The Salesforce Unified Intelligence Platform (UIP) team is building a shared, central, internal data intelligence platform. Designed to drive business insights, UIP helps improve user experience, product quality, and operations. At Salesforce, Trust is our number one company value and building in robust security is a key component of our platform development. In this blog, I’ll share our experience and learnings relating to security design, covering topics such as data classification, data encryption, network security, authentication, data access, multi-tenancy, data environments, and third-party software. If you also work on data platforms, I hope this blog will provide some ideas!

Background

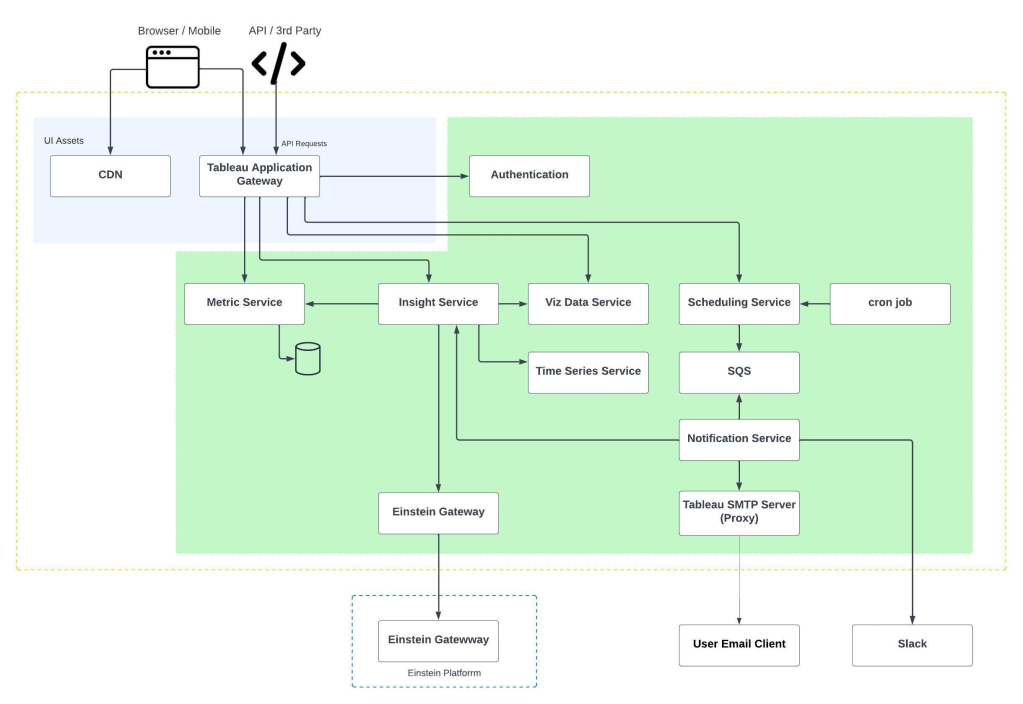

UIP’s predecessor was a huge Hadoop cluster that stored Salesforce’s internal data and provided internal services (such as HDFS, Hive, Spark etc) to all teams across the company. This ran in our first-party data centers and, as a result, faced several challenges such as capacity, scalability, and feature agility. In order to overcome those challenges, with the help from several infrastructure teams, we re-designed the system and built UIP from the ground to run in public clouds. It is still a work in progress, but the diagram below shows the architecture we envision.

When we look at our internal users, their usage scenarios typically follow a data lifecycle in logical order. Looking at the diagram, from the bottom to top,

- First, our app developers produce internal logs, which are transported into object stores. Some users need a data warehouse from the object stores.

- With this massive amount of data, infrastructure engineers need ETL to transform and prepare data and metadata; data stewards need to do data cataloging to power discovery and to do data governance such as access and retention control.

- Then users can compute the data. Data analysts and product managers like to run interactive queries; data engineers like to run batch jobs and to orchestrate the jobs; data scientists like to run machine learning jobs.

- Finally, all users can drive business insights from the compute results. They can visualize them, share them in team notebooks, or use IDEs, customized tools, and automations to further consume the results.

Overall, UIP enables all personas along the data lifecycle. We’ve designed it so that users of the current system, as well as new users, can migrate to public clouds as soon (and in as frictionless a way) as possible.

There is a whole lot more I could share about UIP, but in an effort to keep this blog short, I’ll only talk about security aspect here.

Security-Driven Design

You might think that, being an internal-facing platform, UIP design wouldn’t be concerned with security that much, but it’s not the case. Trust is our #1 company value, from the inside out. UIP’s design was guided by security considerations, from the beginning. Why didn’t we do functionality design first and add in the security bits at the end? Because we didn’t want to hit any last-minute surprises, or encounter security vulnerabilities requiring an architectural redesign, only to throw away all the previous design or implementation work. Imagine you’ve just built a new house, and then the city inspector comes and mandates you to change it from being a split-level to rambler, to change the walls from wood to concrete, and to replace the underground iron plumbing pipes with copper — The disruption from undertaking a late redesign would be huge! And this is particularly true for a data platform, which tends to be more vulnerable in security. Data-related security issues, such as data leaks or data tampering, can be detrimental to a company and its customers with long lasting effects. So, we not only started with security design, but also kept security the main theme throughout our development lifecycle. This practice aligns with the modern security development lifecycle (SDL) method.

Next, I’ll select a few components of our security design and talk about each of them.

Data Classification

One lesson learned is that data classification has a huge ripple impact on our architectural design, so it required consideration from early on. Different data sensitivity levels mean different compliance requirements and legal liabilities, which affected our technical choices for authentication and authorization mechanisms, data sharing restrictions, data recovery strategy, even the way our infra engineers can access the platform and troubleshoot the hosts. To revisit our earlier building analogy, the construction of a storage vault would be completely different depending on whether it’s for a park, a bank, or an armory.

We did reviews with legal and security teams to classify the data to be stored in UIP. During data ingestion, we combine many techniques, such as schema checks, anonymization, compliance scans, incident handling, and more in order to meet the requirements of the target data classes. Besides, UIP follows the design principle of “zero-trust infrastructure,” to protect against accidental data leaks by internal users and services as much as against external ones. More details on some of the data protection mechanisms are in the sections below.

Data Encryption

Here I’ll focus on at-rest encryption (more on in-transit encryption later). Encryption encodes information to obscure its meaning, encrypting data when it’s stored and decrypting it when it’s retrieved. Encryption methods use cryptography and key management system (KMS) to add an extra layer of security on top of the identity and access management (IAM) system, so that even if intruders somehow gain access to the data, they will not be able to decode it. A real world analogy would be security deposit boxes at a bank; a bank puts security deposit boxes inside of a vault, which is locked, and each individual security deposit box also has its own key.

Since UIP resides in public clouds, data encryption is particularly important to us. There are multiple levels of encryption options to choose from: at the lowest level, you rely on cloud vendors to transparently manage encryption/ decryption for you, and manage the cryptographic keys on behalf of you. This is to say you trust the cloud vendor not to give the keys to anyone, including their own employees. At the middle level, you still rely on the cloud vendors to manage encryption/decryption for you, but you control the keys when needed and do not want the cloud vendors to manage them. The trade off is you need to manage key rotation and lifecycle by yourself. The strictest level is client-side (as opposed to server-side) encryption, which means you encrypt the data on-premise even before it enters the cloud, so there is no way the cloud vendors can decrypt your data.

There are more options but, in general, there are tradeoffs between the strictness level and engineering/operational costs, as well as implications to query performance and query quota. A best practice is to adopt the middle level by using server-side encryption with dedicated customer-managed keys to achieve a good balance between security requirements and engineering implications.

Network Security

There are common design principles affecting network security, and I will pick a few principles that are most relevant to a data platform.

- Disable outbound access to the internet by default, since we are an internal-facing platform and don’t want data egress. But we allowlist certain ports provided by cloud vendors in order to use their services.

- Apply network segmentation using subnets, so that even if intruders break into a low level subnet, they still won’t be able to access the more restricted subnets. UIP uses public subnets to place load balancers for receiving user requests, and uses private subnets to place big data computing resources.

- Use security groups for finer grained access control. For example, within a single big data computing subnet, we have different clusters for Spark, Presto, Airflow, Notebooks, etc; each cluster may have different groups of nodes such as controller nodes and worker nodes. We place the clusters and nodes into different security groups, so we can configure the minimally-allowed inbound rules for each group separately.

- Implement in-transit encryption for data exchanged over the wire. This is used together with data encryption at rest in order to achieve data encryption end-to-end. In-transit encryption makes sure data is not sent in clear text, so it’s difficult for intruders to intercept the communications and steal the data. An analogy would be to use sealed envelopes instead of postcards to communicate. UIP uses TLS with private certificates, so the hosts can authenticate and securely talk to each other.

Kerberos Authentication

UIP uses the Salesforce single sign-on (SSO) infrastructure to authenticate users. When a user is using a service, it generally triggers more services under the hood to finish the job. This requires service-to-service authentication, so that services can trust each other and avoid sending data to forged identities from intruders. Some services provided by UIP are from the Hadoop ecosystem, such as Hive, Hive Metastore, and Spark. They use Kerberos as the standard authentication method. So, we store these service’s principals in a Kerberos key distribution center (KDC) and kerberize the corresponding clusters. One problem encountered was that we want different users on a cluster to run on behalf of their own identities, not the shared service principal, to achieve role-based access control (more on that later) and user auditing. So, we enabled user impersonation, so that services such as notebooks would pass the Kerberos authentication when talking to Spark, but would run queries in the name of the individual user who started the notebook. An analogy is, you pass the airport boarding gate by swiping your boarding pass, which is a temporary ticket granted in the name of your ID.

Data Access

Data is the single most important asset of any data platform, including UIP. Data access, or authorizing who can access what data, is a critical piece of security design. The challenge we faced is this: UIP has a massive amount of data, connects to many tools hosted on clouds/on-premise/other vendors, and serves many internal users from different product lines and of different clearance levels. We need a unified and coherent data permissions control strategy, so no user and no tool can access a dataset they’re not supposed to. To take an analogy, it’s like a hotel key card system that needs to decide who can access which rooms/facilities, including guests/ maids/ staff etc. Therefore, we adopted several design principles:

- Role-based access control (RBAC). We assign a user to one or more LDAP groups. Each group is typically (but not necessarily) a subset of members from a team, such as “teamX_general,” “teamX_restricted,” “teamX_contractors,” and so on. We map the groups to roles, and define policies controlling which datasets, tables and bucket folders each role can access. We create these groups, roles, and policies according to user request, and rely on group admins to self-service member management from then on. At this time, we’re also considering finer-grained access controls via attributes (a.k.a. attribute-based access control, or ABAC), such as user attributes, data tags, environmental variables, and so on. Both open source communities and public cloud vendors provide related services; we have also developed our customized solutions to have more flexibility, and plan to open source them later.

- Least privilege. For example, through RBAC, users only get the permissions they explicitly needs, and don’t have access to datasets they’re not allowed for. For another example, not every service needs to write to all the buckets and databases; we can explicitly reduce the scope of resources and actions for each service via policies.

- Data agility. Managing least privilege is a never-ending job; sometimes it can require cumbersome restrictions and extra approval requests and be counterproductive. We don’t want our users to feel frustrated that their hands are tied when they need to access a given dataset. Therefore, we need a balance between least privilege and data agility considerations, which sometimes requires a creative design. For example, we would provide a sandbox space for each team and grant them higher privilege to that sandbox. Users can test our platform and play with sanitized, temporary data in the sandbox and refine their jobs before they put them into production spaces.

Multitenancy

As a data platform, we provide users with access to various big data clusters such as Presto, Spark, and Airflow. One decision we make for each type of cluster is whether it’s single-tenant or multi-tenant. This applies to other types of resources as well, but decisions at the cluster level are the most important. Single tenancy means we launch many clusters, one for each team of users; multitenancy means we launch a cluster that serves multiple teams. The benefits of multi-tenant clusters include:

- A shared cluster tends to cost less than multiple dedicated clusters,

- Fewer clusters means there is less operational overhead required to manage, deploy, patch, and support them.

The benefit of single-tenant clusters include:

- Each team’s data is securely isolated from others,

- Teams won’t compete for cluster resources,

- A team can have certain customizations in its cluster.

The drawbacks of these two models are the inverse of the benefits of each other. To make an analogy, an apartment building can choose to install a washer/dryer within each unit, or one big washer/dryer in the lobby shared by all units.

For UIP, decisions are made independently for each type of cluster, and, so far, the majority of our clusters are multitenant. The primary motivations are to optimize for cost and operations; for the concerns regarding multi-tenancy,

- Data isolation is achieved via RBAC,

- Cluster resources can be given a quota for each tenant based on Kubernetes namespaces or resource queues, and

- We can always launch a dedicated customized cluster for selected tenants if there’s a strong business case.

Data Environment

As common practice, we have separate dev, staging, and production environments. As a data platform, we should store real data assets in a production environment only, not in dev or staging. This means using synthetic data to test our data pipelines and computing services before they are shipped to production. An analogy is a home seller would fill the house with staging furniture to show how the layout could work, but would not fill it with real treasures. One challenge of using synthetic data is we won’t know the actual user-experienced performance prior to deploying them. To mitigate that, we first did some lightweight smoke tests with synthetic data in dev or staging environment to better understand the performance, such as query time breakdown and performance impacts with different encryption options. Next, we initially released to production only for a selected team of pilot users, so they could perform user acceptance tests by running real-world stress queries and concurrent queries, which greatly helped us to identify performance bottlenecks and other issues, so we could address them before opening the platform to a broader set of users.

Third-Party Licenses and Security

In this open-source era, many big data services are based on open source. But having software under different licenses can result in different legal implications and restrictions regarding distribution, committing, etc, so you can’t just grab whatever is “free” online. Apache, MIT, and BSD licenses may have less restrictions than some other licenses, but, regardless, we still did thorough security scans and license reviews with our 3rd party review team before we included any software into our data platform. We will also do periodical reviews for the already included software, just in case some may change their license in the future. Even more subtle than services are dynamically installed libraries; for example, data scientists that use notebook services often need to dynamically try out new packages and decide whether to keep them, and it’s not a good idea for them to freely fetch from the public internet for two reasons:

- The license concerns mentioned earlier, and

- Packages from internet might be harmful. There have been many cases where the official Python package repo PyPI contains malicious modules, such as this example.

So instead, we maintain our own internal repo of packages that are scanned and reviewed. For common libraries such as pySpark, we bake them into our Docker images. When users ask for a new package to be included into the repo, we do a review first. An analogy is, before we bring any delivered package home, we would check whether it’s legit or suspicious at the doorstep.

Conclusion

In this blog, I have shared our experience and learnings of some security design aspects for building a data intelligence platform at Salesforce. Hope this helps give you some ideas, and we welcome any comments or ideas! Lastly, it’s a learning journey as we are still in the process of in building UIP, and there are so many more topics that I’ll have to share later in separate blogs. Stay tuned!

Thanks to Trish Fuzesy, Laura Lindeman, George Hill, Loren Taylor, Threat Intelligence Researchers, Kurt Brown et al from Salesforce for proofreading. Thanks to the UIP team and Salesforce infrastructure teams for their amazing contributions!