In our “Engineering Energizers” Q&A series, we examine the inspiring paths of engineering leaders who have made remarkable strides in their respective fields. Today, we meet Harini Nallan Chakrawarthy, Vice President of Software Engineering, who leads the development of Tableau Pulse. This new Salesforce feature that uses generative AI to provide real-time, AI-powered insights in natural language and visual formats, enabling faster and more informed decision-making based on data.

Join Harini’s team as they revolutionize Tableau by tackling AI/ML integration challenges and scalability obstacles, bringing analytics to new customers.

What’s your team’s mission?

Our team’s mission is to empower business users by providing smart, contextual, AI-driven data insights. We solve the challenge of delivering rich analytics directly into their hands, enabling data-based decisions without the need for extensive manual data analysis. We aim to seamlessly integrate these insights into their workflow, whether it’s through platforms like Slack, email, or any other tools they use, making it effortless for them to access and utilize the data. This streamlined process enhances efficiency and enables faster decision-making for our customers.

Additionally, we focus on transforming the language of data into a conversational one, ensuring that it is user-friendly and accessible to our customers.

Harini describes how she manages her team and increases productivity.

Can you elaborate on the technical challenges you encountered during the integration of AI/ML capabilities into Tableau Pulse?

Our objective was to offer our customers advanced technology that enables conversational experiences, allowing them to engage with data and leverage reliable data analysis that supports critical business decisions. Achieving this meant overcoming the major hurdle of determining the appropriate use of large language models (LLM) for generating insights. To ensure the highest level of accuracy and maintain trust with our Tableau customers, we established guidelines for utilizing LLMs, considering their potential for generating misleading information. Consequently, we use LLMs for its strength in generating natural language queries and matching intents, while utilizing our in-house AI/ML mathematical models to generate insights.

Additionally, testing the relevancy of the AI models remains a challenge. While we have made progress in manual and non-manual testing, there is still work to be done in fully solving this aspect. We continue to invest in our test suites to improve test coverage and validate accuracy and precision of the models. Additional ML specific automation is also being introduced to improve tracking of models over time.

How does the Einstein Trust Layer and metadata integration ensure accurate, reliable, and trustworthy AI insights when integrating our models with Tableau?

When integrating our AI/ML models with Tableau, we took key measures to ensure the accuracy and reliability of the insights generated, while also addressing the challenge of hallucination.

One key aspect is the use of metadata, which is highly valued within Salesforce. By understanding the context and story behind the data, we can reduce the risk of hallucination. During the setup process, we actively engage with users to gather metadata and learn about their specific business context, which helps us tailor our AI insights and minimize the chances of inaccurate or unreliable results. The Einstein Trust Layer plays a significant role in this process, generating summaries based on the insights we calculate.

Additionally, to ensure trust, we pass the customer’s data through the Einstein Trust Layer, which validates that no personally identifiable information (PII), bias, toxic language, or customer data leaks occur.

Can you discuss the scalability challenges your team faced and how you addressed them?

During the pilot phase, we faced challenges in handling an unexpected influx of customers and increased user demand for our email digest processing — requiring us to scale our processing from processing 500 to 20,000 email digests per day. This, combined with Tableau’s flexibility in allowing users to bring their own data and access patterns, necessitated a significant investment to address scaling for Tableau Pulse, Tableau key services, and web concurrency.

To overcome these challenges, we implemented horizontal and vertical scaling techniques, optimizing processing bottlenecks and scaling up running instances. Load balancing was invested in to optimize cache hits while maintaining a stateless API. On the UI side, progressive loading was improved to provide users with important information quickly. At launch, we supported thousands of internal and external users.

Our long-term goal is to serve the global business user base of approximately 3 billion people. To achieve this, we prioritize continuous scaling and system management investments, monitoring Pulse adoption and scaling through Salesforce Monitoring Cloud and Tableau Pulse.

Harini describes the culture of her Tableau engineering team.

Can you explain the composition of your AI team and how you collaborated with them during the development of Tableau Pulse?

During development, our Tableau AI team consisted of around 10+ engineers, including AI/ML engineers, prompt engineers, and insights teams.

We collaborated closely with the Einstein Trust Layer team to ensure seamless orchestration and address issues related to bias, toxicity, and data privacy. That team also provided us with an orchestration layer, enabling us to communicate with multiple elements and ensured the security and privacy of user data. They also helped us with rate limiting and optimizing the generation of summaries to minimize costs.

Additionally, the Salesforce AI Research team played a key role in the development of Tableau Pulse. They kept us updated on the latest models and technologies, validating our approach and providing guidance on the best practices. Their expertise helped us ensure that the models we used were accurate and reliable, as these models are the basis for the insights provided to our customers.

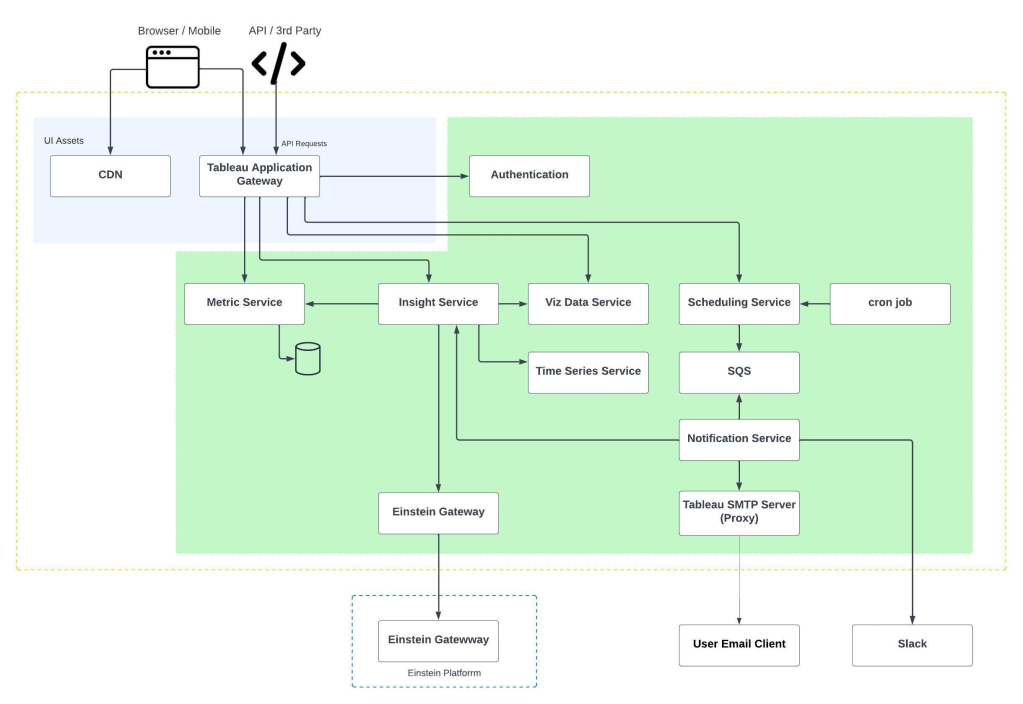

Tableau Pulse’s architecture for generating insights and serving business users.

How do you envision the future of Tableau Pulse in terms of innovation and growth?

These three areas form the foundation of our vision for the future of Tableau Pulse, driving innovation and growth to empower users in their data analysis and decision-making processes:

- Expanding Data Sources and Integration: We aim to expand the range of data sources and integrate public data seamlessly. This expansion will enable us to offer comprehensive insights without requiring users to manually connect the dots. Our vision is to establish relevant insights and metric relationships, empowering users to understand the impact of various factors on their data.

- Leveraging AI Capabilities: We continue to leverage latest and greatest generative AI capabilities to enhance Tableau Pulse’s functionality. This includes implementing conversational interfaces and smart context learning, enabling more intuitive and interactive experiences for users.

- Predictive Metrics: We are exploring the potential of Einstein Discovery to provide predictive metrics. This feature will allow users to gain insights into future trends, once we have established confidence in our look back capabilities.

Learn More

- Read this blog to learn how Tableau’s feature development team overcomes technical challenges to ensure the smooth functioning of various Tableau products

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.