Salesforce is guided by its core values of trust, customer success, innovation, equality, and sustainability. These values are reflected in its commitment to responsibly develop and deploy new technologies like generative AI on behalf of stakeholders — from shareholders to customers to the planet.

The Large Language Models (LLMs) that power generative AI require enormous compute resources to function, resulting in negative environmental impacts like carbon emissions, water depletion, and resource extraction within the supply chain. As the world sets “emissions and temperature records, which intensify extreme weather events and other climate impacts across the globe,” the need to reduce planet warming emissions has never been more dire. At a time when every additional ton of carbon emitted matters, the development of AI technologies should not exceed planetary boundaries.

While the hypothetical long-term sustainability benefits of AI are significant, with the potential to reduce global emissions 5 to 10% by 2030, Salesforce also remains focused on minimizing environmental impacts in the short term.

Boris shares his view on sustainability and explains his sustainability role at Salesforce.

Read on to learn about Salesforce’s strategies for creating sustainable AI, developed as a collaboration between the AI Research, Sustainability, and Office of Ethical and Humane Use teams.

Optimizing models

The energy needed to train a model depends on the number of its parameters and the size of the training data. For the last few years, the trend has been to significantly increase both factors, resulting in exponential growth (750x growth every 2 years) in energy requirements for transformers, the leading LLM architecture. In fact, this trend seems to be dwarfing Moore’s Law, suggesting that hardware efficiency improvements alone won’t keep up with increased computational demand.

The amount of compute needed to train various models as compared to Moore’s Law. Source: Amir Gholami

Though there has been a trend toward large, general-purpose models, is it really necessary to write code with the same model that can create Shakespearean sonnets? Salesforce AI Research remains focused on developing domain-specific models, customized to their intended applications.

For example, the team’s CodeGen model, initially released in 2022, was one of the first LLMs to allow users to “translate” natural language, such as English, into programming languages, such as Python. The latest version – CodeGen 2.5 – has been optimized for efficiency through multi-epoch training and flash attention. The result is a model that performs as well as larger models at less than half the size. Besides being more sustainable, the team learned that smaller models are more cost effective, easier to fine-tune, and operate faster, improving the user experience.

Utilizing efficient hardware

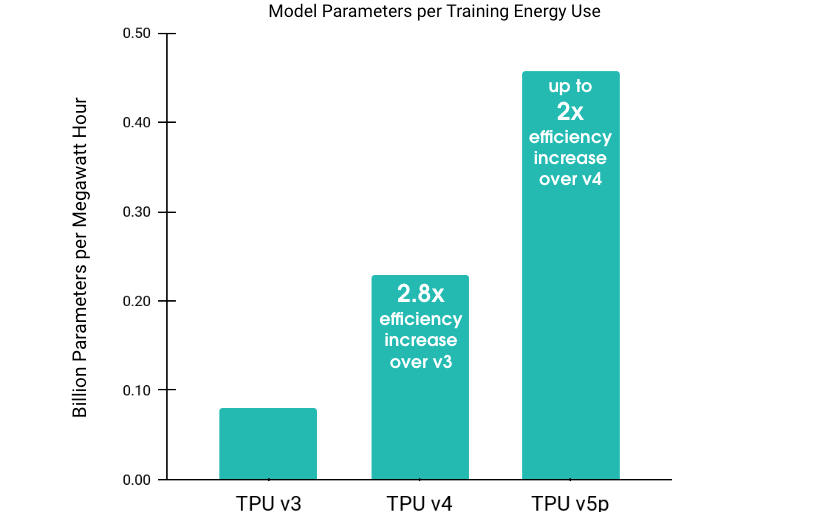

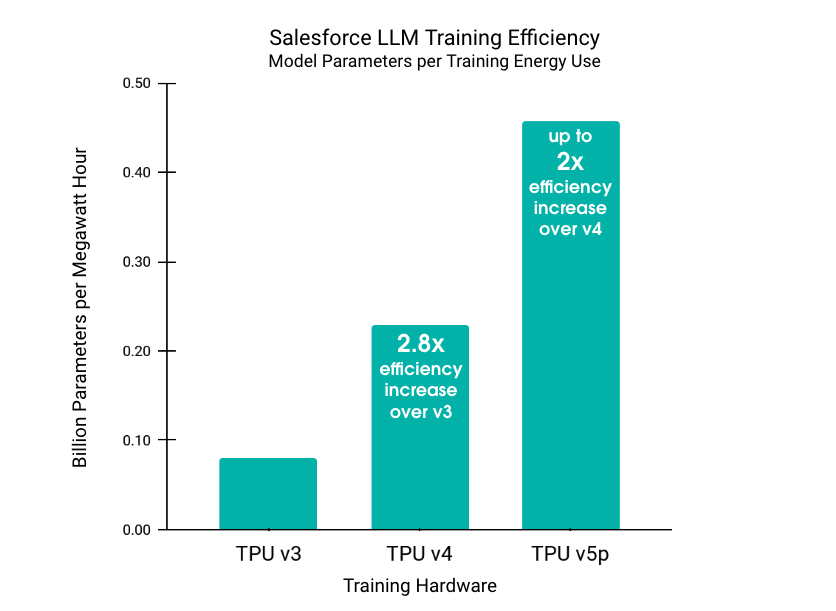

Once the model has been optimized, it’s important to choose efficient hardware to train and deploy AI. AI hardware manufacturers frequently unveil new versions with substantial efficiency enhancements. Google’s AI hardware, the Tensor Processor Unit (TPU), has become more efficient with each new generation, and Salesforce AI Research has leveraged these advancements for sustainability. The team’s more recent models are trained on TPU v4, while some older models were trained on TPU v3. Google estimates that the newer TPU v4 is 2.7x more efficient and the team’s calculations have confirmed this claim, noting a 2.8x efficiency increase (measured by averaging the model parameters per training energy consumed). Furthermore, our initial tests have found that the brand new “TPU v5p outperforms the previous generation TPU v4 by as much as 2x”.

These efficiency increases are expected to continue. For example, Nvidia claims that their forthcoming H200 GPUs will achieve 18x the performance of the A100 GPUs that are widely used today.

Beyond the GPU/TPU hardware specialized for AI, there is also progress in deploying AI on more traditional CPUs, found on consumer-grade computers. CodeGen 2.5 can be locally deployed today, leading to decreased energy use, increased speed, enhanced security, and personalization.

Prioritizing low-carbon data centers

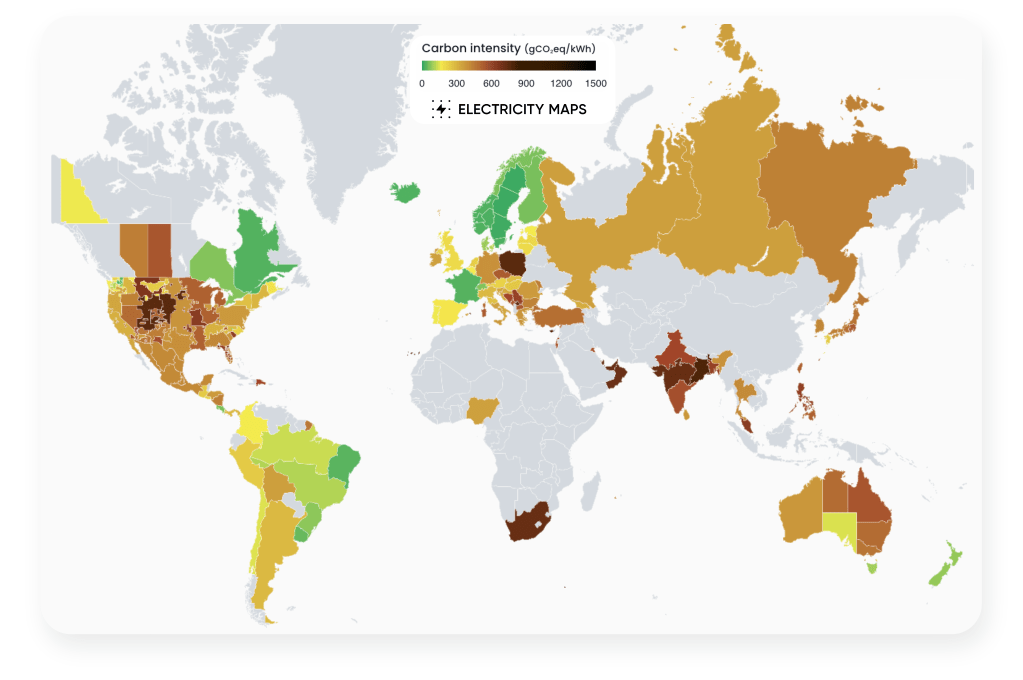

Data centers’ emissions vary widely as they are powered by local electric grids. Depending on the grids’ reliance on fossil fuels, their carbon intensity can vary dramatically region by region (see graphic below).

The varying carbon intensity of the world’s electric grids (source: electricitymaps.com)

With this in mind, it’s important to be cognizant of what data centers are used to train and deploy AI. To reduce our AI model emissions, Salesforce trained its models in lower-carbon data centers, powered by electricity that emits 68.8% less carbon than global average electricity. This resulted in 105 fewer tons of carbon dioxide equivalents (tCO2e) than if data centers with global average carbon intensity were used for training.1

Beyond AI model development: Sustainable AI techniques

The team’s AI sustainability approach doesn’t end with the development and deployment of models. As with all Salesforce products, they work to ensure that its products are designed with sustainability at their core, from the architecture to the UX. For instance, when multiple AI models are available, we default to the most efficient and appropriate option for each use case.1

Additionally, prompt engineering techniques built into Prompt Builder optimize customer satisfaction, cost, and environmental sustainability, ensuring our AI systems deliver top results with minimal resource usage. By caching both prompts and outputs to store and reuse responses for common queries, the team significantly cuts down on repetitive computations, speeding up response times and energy consumption. The team also incorporates mechanisms like usage tracking and rate limits into its Einstein 1 Platform built on Hyperforce, promoting mindful, responsible, and secure use of our AI systems.

Measuring impact

Incorporating these strategies into the AI Research team’s development approach has resulted in significantly lower-carbon AI models. To understand these impacts, the team calculated the energy and carbon emissions of model pre-training, by the far the most impactful AI model lifecycle stage:

Salesforce AI Model Pre-Training Environmental Impacts

In aggregate, pre-training these models resulted in 48 tCO2e: 11 times less than the emissions of GPT-3. Beyond the training phase, the ongoing usage impact of our models has been disclosed in the Hugging Face and ML.Energy leaderboards.

The team believes that transparency is key to Trusted AI and remains dedicated to sharing its environmental impacts and lessons learned, hoping to see other actors in the AI ecosystem follow suit. To follow along as we progress on this journey, check out AI Research updates and explore the models on Hugging Face and GitHub.

A holistic approach to sustainability

Salesforce’s commitment to sustainability is not just about reducing impact; it’s about leveraging AI to create tangible environmental benefits. Innovations in AI are enhancing ESG reporting within our Net Zero Cloud, illustrating the growing intersection between technology and corporate sustainability. CodeGen, which powers Einstein for Developers and Einstein for Flow, enhances code efficiency, with third-party research indicating a 2.5x improvement. This enhanced efficiency extends to Salesforce’s customers as well, with AI-powered tools enabling them to analyze and automatically reduce the carbon emissions of their Apex code.

Additionally, environment is a key focus area for the AI Research team. They are pioneering AI for Global Climate Cooperation, using AI to craft negotiation protocols and climate agreements that could shape future environmental policies. Also, its ProGen AI language model, designed to generate new artificial proteins, is making strides in the development of compostable materials from carrot starches. Another notable project is SharkEye, which employs AI to detect great white sharks, contributing to a vital database for scientists, conservationists, and local communities.

Learn more

- Check out this blog to dive deeper into how Salesforce responsibly develops AI.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.

___________________________________

1 Salesforce trained its models in data centers that had 68.8% lower carbon emissions than the global average carbon intensity for electricity. Salesforce model pre-training weighted aggregate carbon factor is 136 gCO2e/kWh. 68.8% reduction and 105 tCO2e avoidance is compared to the 436 gCO2e/kWh global average (source).

2 Energy is calculated by multiplying Training Time, processor TDP (used for conservative assumption, provided by processor manufacturer), and data center PUE (provided by data center owner).

3 Carbon is calculated by multiplying energy by electric grid carbon intensity (provided by data center owner).

4 Carbon emissions due to electricity only. While embodied carbon data is not currently provided by manufacturers, we hope to expand the calculation scope in the future.

5 Through a combination of renewable energy procurement and high-quality carbon credits, all residual emissions are entirely offset by Salesforce and/or our suppliers.