By Vera Vetter, Zeyuan Chen, Ran Xu, and Scott Nyberg

In our “Engineering Energizers” Q&A series, we examine the professional journeys that have shaped Salesforce Engineering leaders. Meet Vera Vetter, Product Management Director for Salesforce AI Research and a co-Product Manager for Einstein for Flow, a game-changing AI product that is revolutionizing Salesforce workflow automation. Vera works closely with several teams across Salesforce to identify customer needs, define product goals, meet milestones, and deliver value to users.

Read on to learn how Vera and her team successfully drive cross-team collaborative development to solve highly complex AI developmental challenges.

How would you describe Einstein for Flow?

Einstein for Flow is a groundbreaking generative AI tool that leverages large language models (LLMs) to power process automation across various Salesforce products. The tool enables Salesforce admins, regardless of their programming experience, to easily create functional Salesforce Flows via natural language prompts. This removes the need to perform manual configuration, significantly streamlines and scales the Flow automation process, and educates users on Flow creation.

A look at Einstein for Flow’s generative experience in action.

Which Salesforce teams helped develop Einstein for Flow?

To create Einstein for Flow, four specialized Salesforce teams brought their unique expertise, collaborating closely as a cross-cloud Scrum team to complete the project in just nine months:

- Automation and Integration team: This team maintains Flow Builder, where Einstein for Flow resides. They defined user requirements, tackled automation challenges, and played a pivotal role in driving the project’s development. Additionally, they led data acquisition and labeling efforts, which helped the AI model learn and efficiently generate Flows.

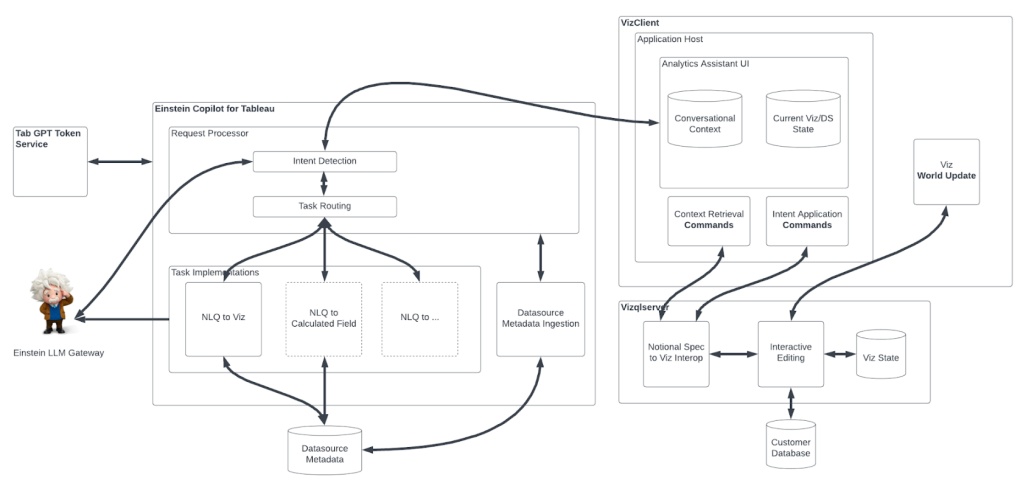

- Marketing Cloud Einstein team: This team was responsible for delivering the feature and building the entire backend application of Einstein for Flow from the ground up. Leveraging their significant engineering experience, they defined the overall system architecture. This involved building the complex platform app service and Marketing Cloud’s Einstein LLM service which, in turn, interfaces with the LLM Gateway.

- AI Platform team: Drawing upon their experience in in-house AI application and LLM hosting and serving, this team powered the robust performance of Einstein for Flow in production. They also played a central role in optimizing the LLM serving stack, delivering optimal throughput and lower latencies.

- AI Research team: Specializing in generative AI development, this team built Einstein for Flow’s AI capabilities from scratch, including the in-house LLM that powers Einstein for Flow.

During development, the teams worked collectively with iterative feedback loops and shared stand-ups, coordinated planning, conducted demos, and more. This helped drive rapid iterations and quick inclusion of feedback into the team’s development process.

Vera shares her experience working at Salesforce and supporting Einstein for Flow.

What were the biggest challenges for developing Einstein for Flow?

As the team developed Einstein for Flow, they addressed several key issues:

- Understanding user-specific flow-building requests. During development, the team learned that users uniquely described their process automation requirements. In response, Einstein for Flow’s generative AI engine was designed to be foundational, enabling it to address a wide range of process automation requirements across varied scenarios.

- Data availability. Data remains crucial for creating effective generative AI models. However, unlike publicly available data, which is used in generic LLM-based chatbots, enterprise-built Flow data is limited. The team effectively resolved this issue by closely collaborating with internal Flow experts and practitioners, who provided relevant flow data and natural language descriptions of processes that users would want to automate. This highlighted, for example, the diverse ways users described similar requirements in their prompts.

- Innovating new generative AI. Salesforce internally developed the entire generative AI stack, including the large language model — a considerable challenge given the state of the generative AI industry.

Vera highlights why engineers should join Salesforce.

What was the process for training Einstein for Flow?

The training process involved two key stages, consisting of pre-training and instructional fine-tuning.

During the pre-training stage, a massive model was trained on a huge dataset. This enabled the team to build a foundational model that served as a launching point for additional development.

During the instructional fine-tuning phase, the team enhanced the model’s understanding of user commands by meticulously fine-tuning it with expertly crafted pairs of prompts and Flow metadata. The outcome delivered a more intuitive AI, capable of quickly creating precise Flow metadata according to user instructions. This fine-tuning process ensures that the model builds Flows with increased accuracy, making it a reliable tool for users seeking efficient solutions for Flow construction.

How does the team incorporate customer feedback to improve Einstein for Flow?

Customer feedback plays a key role in continually enhancing Einstein for Flow. The team implemented a user-friendly and straightforward mechanism that rapidly provides the results. For example, if Einstein for Flow accurately provides a user a requested Flow based on their input, the user can click on a thumbs-up button. On the other hand, if the model missed the mark, the user may click thumbs-down, and provide free-form feedback, which helps the team understand what went amiss.

Using this feedback, along with the implicit performance data, the team dives deep into the specifics of the prompt to interpret where Einstein for Flow may have misunderstood the user’s intent. This analysis is pivotal for supporting the program’s continuous improvement goals.

This thumbs-up/thumbs-down process also helps the team eliminate any model hallucinations, which refers to instances where the model may deliver incorrect or irrelevant outputs.

Einstein for Flow’s customer feedback mechanism in action.

Looking ahead, what is the team’s roadmap for Einstein for Flow?

We are very excited to have just launched the pilot for Einstein for Flow, which supports standard objects, record-triggered Flows, and screen Flows. Looking forward to the future, we will expand Flow coverage by incorporating custom objects and fields as well as increasing the diversity and complexity of Flows covered.

Following that, we plan to release “Flow evolution”, which will provide an editing capability — empowering users to modify their Flows via a dynamic chat-like experience. This feature will support manually-created Flows and Flows built using Einstein for Flow capabilities. This conversational interaction will be multi-turn, meaning that users will be able to seamlessly add or subtract elements.

Learn more

- Hungry for more AI stories? Read this blog to learn how Salesforce engineers built the model for Einstein for Developers, a set of advanced AI tools for improving how developers write code.

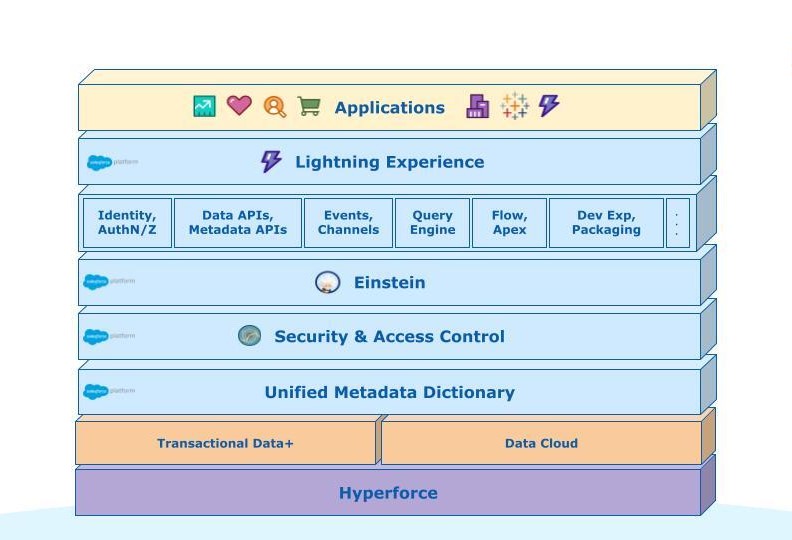

- Explore the new Einstein Copilot, a conversational AI assistant for CRM that uses company data to generate trusted responses and automate tasks.

- Stay connected — join our Talent Community and check out our Technology and Product teams to learn how you can get involved.