By Vivienne Wei, Geeta Shankar, and Shiva Nimmagadda.

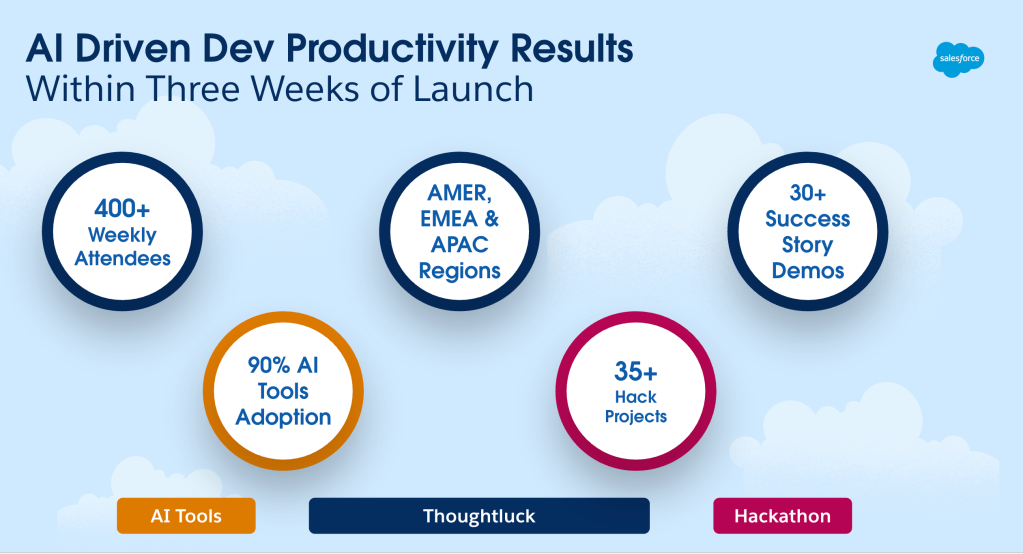

At Salesforce Engineering, AI isn’t a side project — it’s a new way of working. The team recently initiated a company-wide productivity shift by setting a new AI tooling baseline for all development teams. Rather than treating AI as optional or experimental, teams were directed to integrate AI tools into daily workflows. The outcome: over 90% adoption was achieved across six major engineering clouds in three weeks and continued for an additional six consecutive weeks, with thousands of engineers maintaining consistent usage and experiencing measurable improvements in development speed.

Here’s a closer look at the systems, metrics, and use cases that drove this transformation, highlighting how AI tooling was operationalized at scale.

Establishing a New AI Baseline

To transition AI from isolated experimentation to an engineering-wide standard, Salesforce Engineering introduced a formal productivity baseline. Cursor and CodeGenie were designated as default coding agents. GitHub Copilot and Gemini were integrated. Agentforce-based Slack agents were deployed to minimize repetitive queries across teams.

These tools were incorporated into both front-end and back-end workflows. For instance, Gemini facilitated infrastructure scaffolding and documentation summarization, while Cursor handled code editing, test generation, and large-scale migration support. Additionally, Agentforce agents like Engineering Agent, handled most knowledge and support scenarios on Slack. The directive emphasized rapid prototyping, AI-assisted problem solving, and prompt chaining as essential skill sets for modern development at Salesforce.

Geeta dives deeper into AI tools that boosts her team’s productivity.

Measuring What Matters: Instrumenting Adoption with Engineering 360

To gauge the impact of AI tools, Salesforce Engineering expanded the scope of Engineering 360 to track AI adoption. The metrics they focused on included:

- Token Usage Volume: Measured per user and organization to understand how frequently AI tools were being used.

- Tool-Specific Activity: This covered things like the number of Cursor prompts, AI-influenced code acceptances, and Copilot engagement.

- Adoption Heatmaps: These visual tools highlighted which clouds had active versus inactive clusters, giving a clear picture of where AI was thriving.

- Slack Agent Interactions: Agentforce-based bots logged over 18,000 queries, showing how much these tools were reducing repetitive tasks.

The dashboards provided insights into usage patterns and highlighted top-performing teams. This allowed engineering leadership to pinpoint areas that needed more support or additional training. By treating token-based metrics as part of the standard performance dashboards, Salesforce ensured that AI adoption was not just a cultural shift but a measurable engineering outcome.

Increasing Cursor Adoption

The shift led to a rapid increase in AI tool adoption, where Cursor usage grew 300% in just a few weeks. Additionally, more than 475 engineers exceeded their default token limits and were upgraded to higher tiers. GitHub Copilot usage followed a similar trend, as did Gemini prompt activity in supported organizations.

All six major engineering clouds — Data Cloud, Platform, Agentforce, MuleSoft, Tableau, and Heroku — reached over 90% engagement, with daily usage tracked through consistent prompt volumes and changes in acceptance rates.

Operational Use Cases Across Teams

As AI adoption grew, several key workflows emerged, showcasing the tool’s versatility and impact:

- Test Automation and Q4 Readiness: Cursor helped teams auto-generate Q4 performance test templates. These templates were shared across different orgs, cutting down setup time and avoiding redundant work. Standard prompts were created to simplify the creation of test cases that used to take a lot of manual effort.

- Migration Workflows: During internal migrations, teams used Cursor to rewrite Splunk dashboards in Trino. AI-assisted code scaffolding made the process much faster, especially when dashboards needed to be restructured syntactically while keeping their original logic. Without AI, these rewrites would have taken days of manual work.

- Selenium Script Generation: Functional testing teams relied on Cursor to generate baseline Selenium scripts from spec files. This sped up regression testing and made it easier to onboard new test cases.

- AI-Driven Documentation and Summarization: Gemini was a game-changer for summarizing complex documentation, drafting Jira tickets, and updating outdated PR descriptions. Teams even chained multiple Gemini prompts to create comprehensive summaries from multiple performance reports.

- Agentforce-Based Developer Support Agents: Salesforce rolled out Agentforce-based Slack bots that were integrated with Engineering 360 data. These bots answered FAQs, looked up engineering policies, and provided best practices for AI tools. This reduced repetitive questions and made onboarding smoother for new developers.

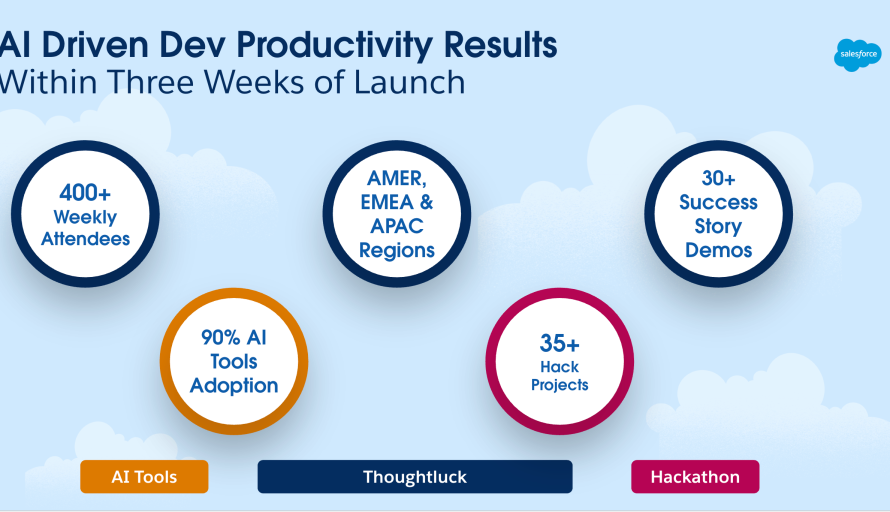

Bringing Repeatable Patterns Through AI Productivity Thoughtluck

To speed up knowledge transfer, Salesforce launched an internal demo series called the AI Productivity Thoughtluck. Engineers from various orgs presented short, technical demos showing how they integrated AI into their workflows. In the first session:

- Six distinct use cases were showcased by six different teams.

- Over 400 engineers attended.

- Follow-up discussions on Slack led to multiple teams reusing shared prompt patterns and scripts.

The Thoughtluck sessions highlighted reusable solutions for common tasks, such as generating test plans, rewriting legacy dashboards, converting documentation to structured data, and summarizing architecture decisions. These insights are now forming the foundation of emerging internal playbooks.

From Local Adoption to Peer-Driven Scale

The adoption of AI tools wasn’t driven by top-down enforcement but by peer behavior and engineering curiosity. Junior developers who started experimenting with Cursor early on became internal enablers, helping their teammates with prompt writing strategies, debugging flows, and creating repeatable prompt chains.

In multiple teams, AI power users emerged naturally. They tracked performance, iterated on prompt templates, and maintained internal knowledge bases through Slack recordings and shared documents. The tools gained traction not because they were mandated, but because they significantly improved workflow efficiency.

Productivity Gains: What 90% Adoption Looks Like

With 90% of engineers actively using AI tools, the organization began to see significant changes in how work was done:

- Development Velocity: Cursor was used to generate boilerplate logic, allowing engineers to focus on higher-order architecture and more complex tasks.

- Testing Throughput: AI-generated test templates standardized Q4 readiness, reducing test case duplication and ensuring consistency across teams.

- Workflow Acceleration: Slack agents handled over 18,000 queries, helping to break down knowledge silos and reduce bottlenecks.

- Code Quality: Prompt chaining improved refactor coverage by suggesting additional test cases and edge-path logic, leading to more robust code.

Ultimately, the trends in token consumption and AI usage clearly showed an increase in development speed and process standardization.

Looking Ahead: Toward Standardized AI Development Playbooks

Salesforce Engineering is now entering the next phase: formalizing reusable playbooks. These playbooks include:

- Prompt Templates: For performance testing and CI/CD scripts.

- Migration Blueprints: For internal tooling shifts.

- Policy Guides: For secure and trust-centric AI integration.

Although still in the early stages, these playbooks mark a transition from experimentation to operational maturity. The goal is to minimize redundant work, streamline onboarding, and boost engineering output without compromising trust or code quality.

Salesforce’s approach to AI tooling wasn’t about novelty — it was about operational scale, instrumentation, and sustained engineering engagement. By aligning AI adoption with measurable metrics and real developer workflows, teams integrated these tools seamlessly into their daily work, rather than treating them as separate entities.

As usage continues to grow and playbooks become standardized, AI is now seen as a core part of the engineering infrastructure, not just an add-on.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.