In our “Engineering Energizers” Q&A series, we highlight the innovators driving the future of enterprise technology. Today, we feature Adam Evans, Executive Vice President and General Manager of the Salesforce AI Platform. Adam leads product management for Agentforce, an advanced AI orchestration layer designed to integrate intelligent agents into real-world business environments. His deep engineering mindset, shaped by years of hands-on technical leadership, guides his approach.

Dive into how Adam’s team managed the shift to probabilistic AI, guided engineers through unlearning decades of legacy practices, and engineered a flexible, multi-model orchestration system that balances adaptability with rigorous testing and control.

What is your team’s mission with Agentforce, and how is it architected to handle enterprise-grade AI use cases?

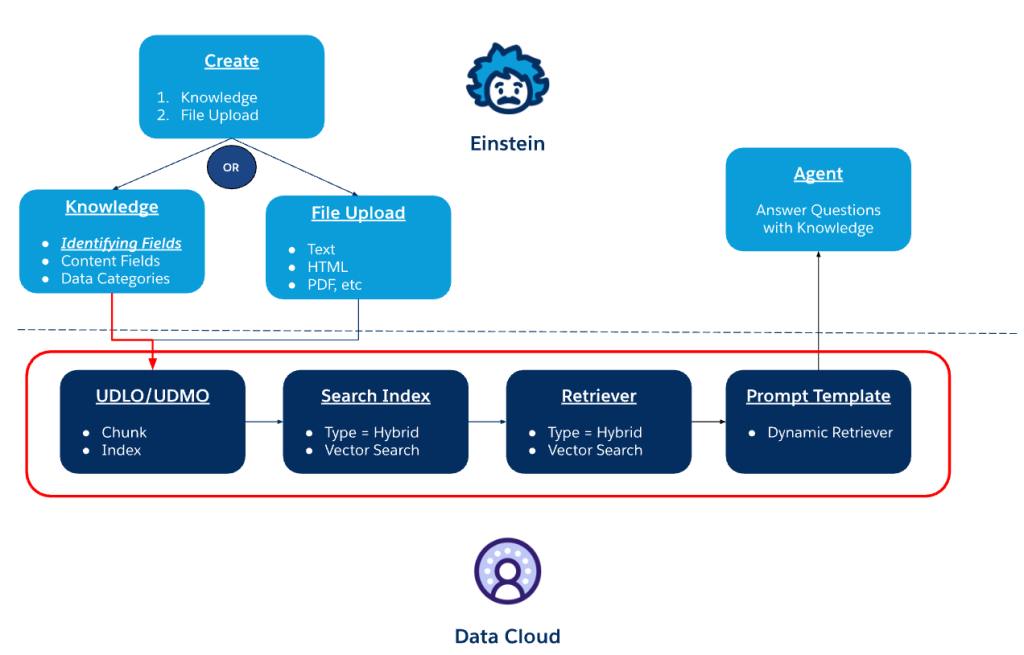

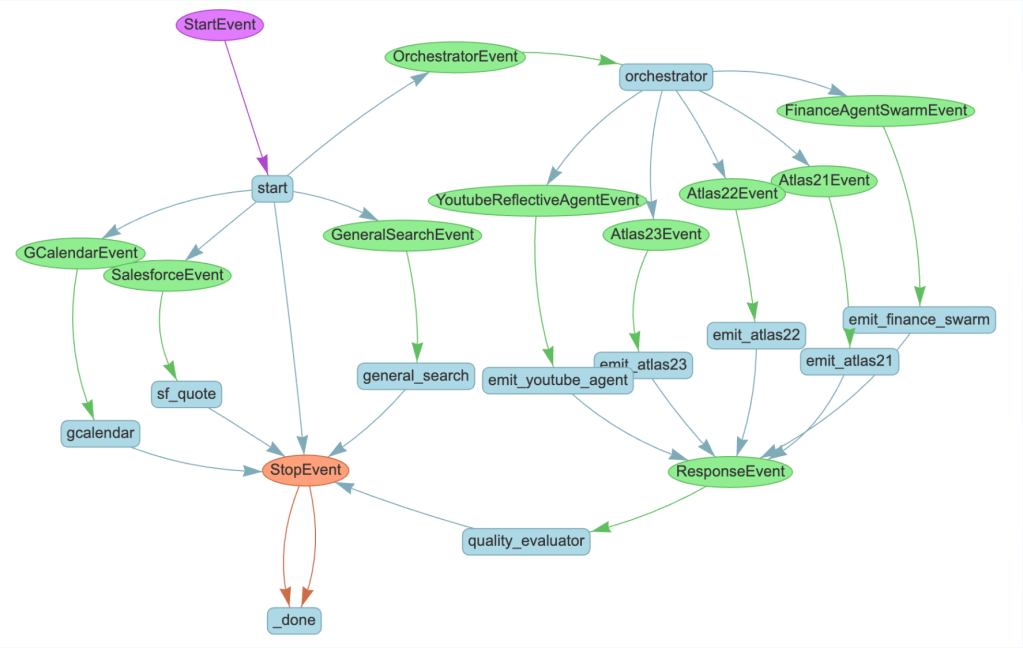

The mission with Agentforce is to reimagine how AI agents can operate across real-world enterprise environments—at scale, with trust, and with a direct impact on productivity. Agentforce sits at the orchestration layer, connecting user inputs to business logic and back-end data while leveraging multiple AI models under the hood. Architecturally, the design focuses on modularity and interoperability. Soon, different agents will have the flexibility to use different models — some excel at reasoning, others at summarization or coding — and Agentforce will coordinate these in a flexible, plug-and-play fashion. This abstraction layer allows for the swapping of models as the landscape evolves, while maintaining consistent application behavior.

The goal is not just to build one-off LLM proof of concepts, but to deploy LLMs into mission-critical customer environments, such as auto manufacturers requiring AI-driven search across thousands of PDF manuals. The task is to transform raw model capability into structured, governed, and auditable business outcomes. The result is a system that supports real user workflows while keeping the architecture adaptable, performant, and secure.

What’s been the most challenging technical hurdle in developing Agentforce?

One of the most significant challenges in developing Agentforce has been the transition from deterministic software to probabilistic, non-deterministic systems. In traditional engineering, predictability is key — code is written, executed, and debugged when issues arise. However, with AI, the flexibility that makes the technology powerful also introduces variability, which can be unsettling for engineers accustomed to hardcoded logic. To tackle this, the team has had to fundamentally rethink the approach to building, testing, and evaluating systems.

Instead of fixing bugs with simple code changes, the team relies on comprehensive evaluation suites that assess agent performance across various dimensions, such as instruction-following, accuracy, latency, and cost. These evaluation suites help identify regression points and set quality thresholds, even with the unpredictable nature of AI outputs.

Another major hurdle was approaching things from a different angle. For instance, rather than spending months developing a complex UI for query building, the focus shifted to leveraging an LLM to infer SQL from natural language. This shift in mindset — challenging default engineering instincts — has been crucial for accelerating innovation.

How are you building out the Agent Development Lifecycle (ADLC), and what parallels does it share with traditional software development?

The ADLC is an internal framework designed to treat AI agents as first-class software constructs, with all the rigor and discipline expected by engineers. Proven concepts from traditional software development have been borrowed and adapted to the unique behavior of AI systems. The foundation starts with evaluation harnesses. For each agent, use-case-specific evaluation criteria are defined, and agents are run through test suites to measure performance against these criteria. Some evaluations test model behavior in isolation, while others assess how the agent performs across an entire workflow. Given the non-deterministic nature of LLMs, multiple iterations are run, and statistical scoring is used, rather than just pass/fail checks.

The team is also introducing constructs like version control for agents, regression testing against evolving model APIs, and per-customer evaluation suites. These systems help move from ad hoc prototyping to production-grade deployment. This work enables teams — and eventually customers — to develop agents with the same discipline applied to software for decades, while still embracing the flexibility and speed that AI offers.

How does Agentforce orchestrate multiple AI models from different vendors, and what are the engineering tradeoffs of that approach?

Supporting a multi-model approach necessitated a significant shift in engineering principles. Instead of hardwiring business

logic into a single model, each model is treated as a swappable module within a larger orchestration graph. The key to making this work is defining clear input/output contracts — functional boundaries that specify how each model behaves, the expected input, and the returned output.

Internally, abstraction layers have been developed to decouple prompt templates, model selection, function-calling logic, and agent composition. This architecture will enable Agentforce to dynamically route tasks to the most suitable model, whether optimized for reasoning, summarization, or speed. When a new model enters the market, the evaluation pipelines can run the model through existing use cases, and integration occurs if the model meets performance standards.

The tradeoff is increased complexity. Robust testing, clear module boundaries, and strict governance are essential to prevent failures when swapping components. However, the benefit is long-term adaptability and the ability to leverage new innovations without overhauling the entire architecture. This adaptability is foundational to how Agentforce manages orchestration across a dynamic, multi-model AI landscape.

How does the Agentforce team balance innovation speed with enterprise stability — especially when piloting experimental features?

Balancing speed and stability is a critical challenge when delivering AI solutions to some of the world’s largest and most regulated businesses. To address this, the Agentforce team has formalized beta and pilot programs as integral parts of the product development process. Instead of deploying experimental features directly into production, small, dedicated teams are formed to co-develop with customers. These teams, consisting of engineers, product managers, and researchers, work in tight feedback loops with real users, creating a sandboxed environment where iteration is safe, feedback is rapid, and the path to production is well-defined.

This approach goes beyond simple A/B testing. These are structured engagements designed to explore high-risk, high-reward concepts, such as new agent modalities (e.g., voice), orchestration techniques, or application domains. By narrowing the scope and increasing the depth of these engagements, the technical feasibility and customer value can be thoroughly validated before a wider rollout.

This method combines the agility of a startup with the enterprise-grade rigor of Salesforce. This approach directly shapes how Agentforce evolves, ensuring that innovation is balanced with the governance required for production AI systems.

What technical lessons shaped Agentforce as you transitioned from deterministic software to non-deterministic AI systems?

One of the most significant lessons has been adapting to a world where absolute certainty is no longer a given. In traditional software, bugs are consistent and solutions are clear-cut. With AI, the same input can yield slightly different outputs, requiring engineers to adopt a probabilistic mindset.

To manage this, we’ve adopted a modular design approach. By breaking down systems into well-defined, independent components with clear contracts and performance thresholds, we can handle variability while ensuring overall system reliability. This modular design also allows us to seamlessly integrate specialized components, such as real-time rule-adherence models, without overhauling the entire system.

Another critical lesson is that experience can sometimes be a double-edged sword. Teams with extensive backgrounds in legacy systems might be inclined to fall back on familiar solutions, like graphical query builders or complex forms. However, LLMs offer more efficient and intelligent alternatives, and it’s crucial for teams to remain open to new approaches and abandon outdated “best practices” when necessary.

This shift in mindset isn’t easy, but it’s vital for developing the next generation of enterprise software. It’s a core principle that guides the design of Agentforce — embracing the probabilistic nature of AI while maintaining the structure and modularity needed for robust enterprise solutions.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.