By Yingbo Zhou and Scott Nyberg

In our “Engineering Energizers” Q&A series, we examine the professional life experiences that have shaped Salesforce Engineering leaders. Meet Yingbo Zhou, a Senior Director of Research for Salesforce AI Research, where he leads his team to advance AI — focusing on the fields of natural language processing and software intelligence.

Read on to learn the risks his team faces in engineering new AI solutions, their biggest technical challenge, how Yingbo drives scale for his team and much more…

What is your AI team’s mission?

Our team has two primary areas of focus: natural language processing and software intelligence. Both areas aim to aggregate efficient and effective representations from data to improve performance across various tasks. Our ultimate goal is to advance the state of the art in these areas and democratize AI, making it accessible and beneficial to everyone.

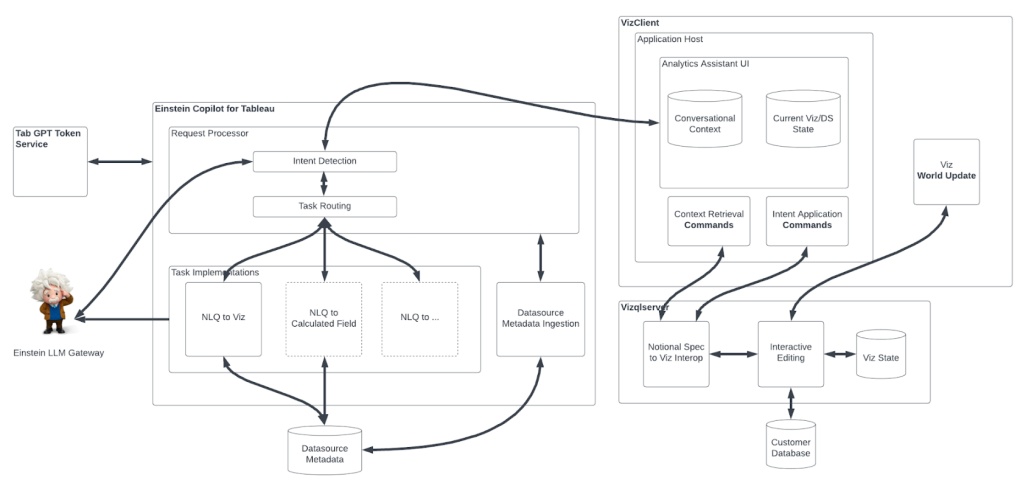

My team built and open-sourced the CodeGen large language model (LLM), which serves as the backbone for Einstein for Developers. We also collaborated with other teams to bring Salesforce’s foundational LLM — XGen to life.

Yingbo dives deeper into his team.

What risks does your team face in engineering AI solutions?

One risk is the generation of incorrect or misleading information by LLMs. To address this, we employ modeling techniques and focus on grounded generation, providing the model with background information to support its responses. This reduces the likelihood of generating misleading content.

We also prioritize reliability by collaborating closely with product teams, gathering user feedback, and improving data collection to train models on relevant information.

Additionally, we guard against over-engineering, which creates unnecessary complexity in product design instead of focusing on users’ needs. This is achieved by aligning our approach with customer needs and priorities through customer feedback sessions and user studies.

What was the biggest technical challenge your team has faced and how did they overcome it?

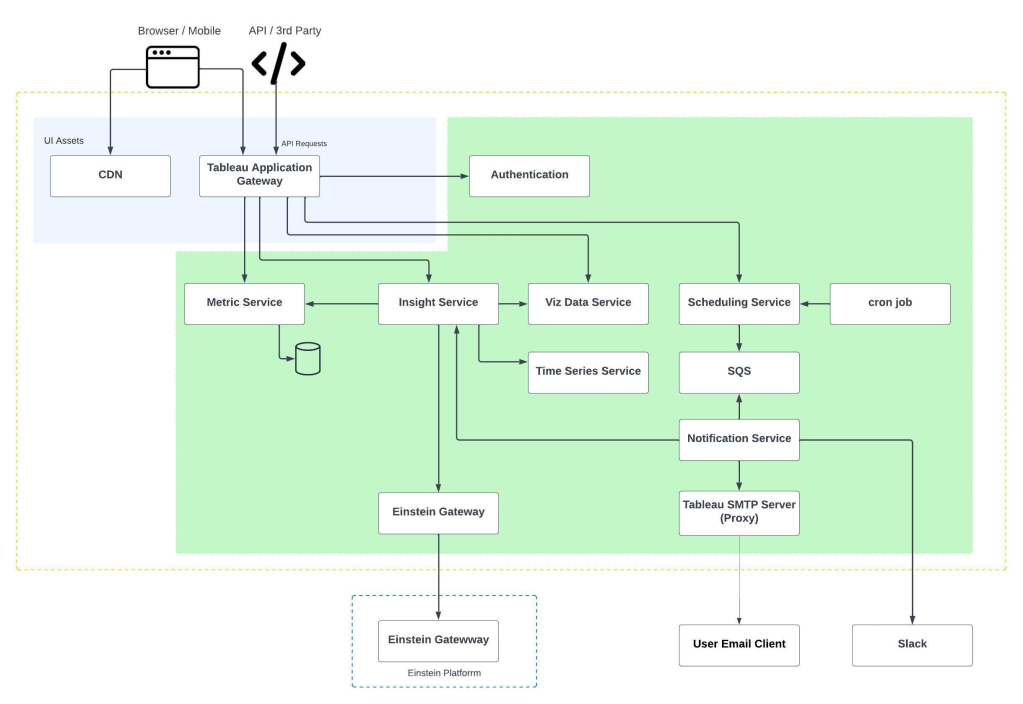

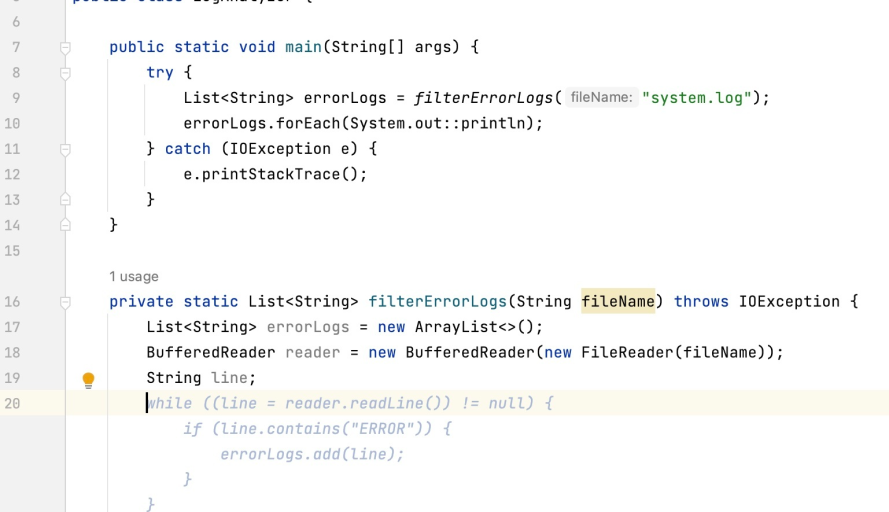

One of the biggest technical challenges we faced was with our incubation project, CodeGenie — an autocompletion tool to improve internal developer productivity. Initially, the product appeared fine from a development perspective, but user feedback was disappointing as users were not very satisfied with the suggestions that the product provided.

To overcome this challenge, we partnered closely with other teams inside Salesforce and gathered detailed feedback. This collaboration helped us identify areas for improvement in both modeling and user experience. We also partnered with an engineering team to enhance the product engineering, which helped our team to focus on improving the model’s performance. Last but not least, we received assistance from user experience researchers who conducted more user interviews, which delivered insights on how customers used the tool and their pain points.

After a year of dedicated effort, we have seen significant improvements, in terms of product output quality measured in terms of product metrics and adoption. This experience taught us the importance of collaboration, user-centric design, and continuous iteration to overcome technical challenges and deliver a better product.

A look at CodeGenie.

How does your team address AI issues such as noisy user data feedback?

My team understands that users’ roles and preferences can change over time, making it challenging to draw meaningful conclusions in a short amount of time. In other words, using raw user feedback data to iterate on product design is a slow process. To address this, we have constructed a benchmark that closely resembles the targeted use case to allow faster product iteration.

By leveraging this benchmark, we can overcome the noise in user data and make informed improvements fast. This approach, combined with our ongoing collaboration with other teams and our commitment to academic rigor, helps us navigate challenges and make progress in our AI research and product development journey.

How do you drive scale for your AI team?

To drive scale, we focus on automating repetitive tasks or processes — significantly reducing development time and increasing efficiency. For example, we automate tasks like data preprocessing, which saves time and ensures consistency.

We are also automating the model evaluation process, enabling non-technical stakeholders to explore and interact with our models easily. This bridges the communication gap and enables others to understand and utilize our work more effectively.

Additionally, we create common repositories within our team, such as libraries and documentation, to leverage existing work, drive cross-project collaboration, and accelerate development.

Lastly, we have regular team meetings where we share our progress and insights. These meetings foster an open and collaborative environment, maximizing productivity and leveraging collective knowledge and resources.

Yingbo shares why his role excites him.

What have you learned about leadership since joining Salesforce?

Initially, I viewed leadership as primarily managing people and tasks. However, I have learned it is more than that — it is about treating team members as unique individuals, valuing their contributions, believing in their abilities, and building trust to create a meaningful work environment.

Ultimately, the most significant learning that I have is the importance of empowering people to do their best work and helping them overcome any challenges they may face.

Learn more

- Hungry for more AI stories? Read this blog to learn how Salesforce’s AI Research team built XGen, a series of groundbreaking LLMs of different sizes that support distinct use cases, spanning sales, service, and more.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.