This post is in response to feedback on my previous post, “Monitoring Microservices: Divide and Conquer”. A friend of mine told me that the post was interesting but that he doesn’t have context for what it’s all about. I’d love to add some context now.

There are many nice posts all around the internet about the meanings of observability and monitoring, like Monitoring and Observability by Cindy Sridharan. For me, the best definition of observability is that it’s the love and care that creators of a product give to those who operate it in production, even if they operate it by themselves. Product doesn’t exclusively mean software. It can include machines as well (like the red parking brake indicator in your car). Here, we will focus on software systems and a bit on the underlying hardware.

The best framework to explain something new is the Heilmeier Catechism. So let’s try to apply it to our explanation of what observability is.

What are you trying to do? Articulate your objectives using absolutely no jargon.

I am trying to make a complex system as transparent as possible to those who operate it.

To make a decision about what’s wrong with a system, the operators need to know as much as possible about internal state of the system. The state itself is too bulky: it includes all data in memory, on a disk, in network wires and devices, etc. I am trying to expose some information about the state so the operators can build a theory about what state is and using the theory plan actions how to bring the state to normal.

Volume of data produced by monitoring components is relatively small. I am trying to give the operators information about how the state changes over time so they can identify trends.

A system can run on different computers and in different geographical locations at the same time. I am trying to give the operators a bigger picture of the global state of the system from all the locations and all the computers.

I am trying to give operators signals for known failure modes so they can have standard procedures how to react on the failures.

How is it done today, and what are the limits of current practice?

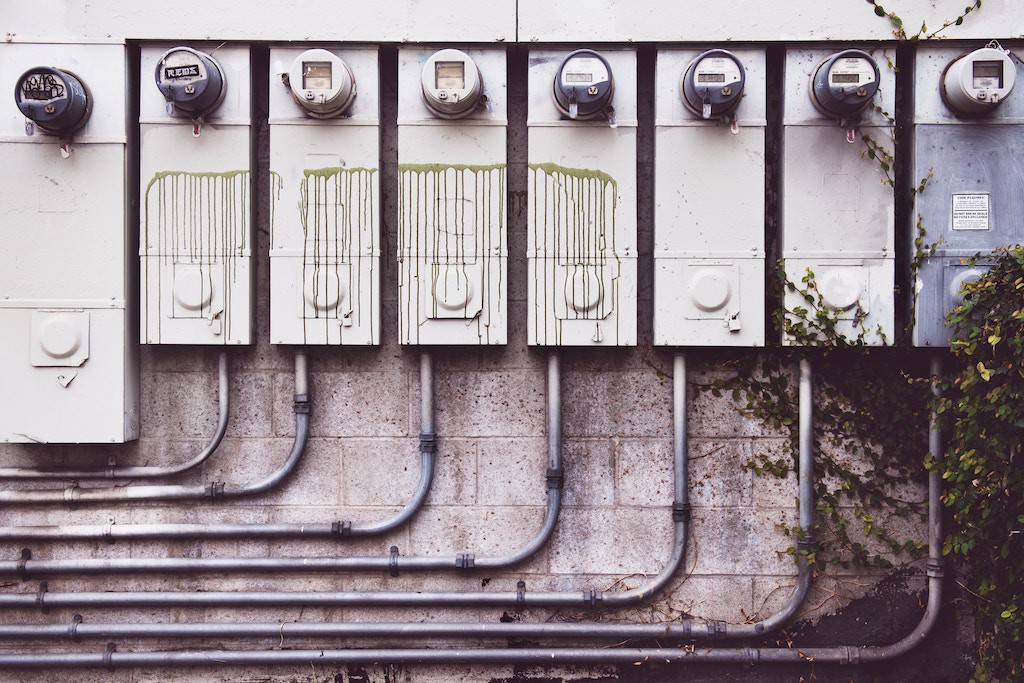

Some basic monitoring functionality is embedded into computers we use. If there is no access to the console of a computer where our program runs but we have physical access to the computer itself, we can observe 2 parameters right away: the level of noise from CPU fan and the frequency of flashes of RX/TX indicators on a network card. CPU fan noise tells us about a level of CPU utilization in the system. RX/TX indicator flash frequency on a network card tells us is that there is network traffic. It is also possible to say the amount of disk activity in the system using noise from the magnet head of a HDD, if there is one of course. A phone call from an angry customer can tell us about ongoing problems with the program.

If we can log in to the computer, then we can find tools from the OS to learn more about what’s going on in the system. The OS can give us numbers for CPU/RAM/Disk/Network usage by different processes in the system as well as some history for these parameters. It can tell us about total capacity of these resources, too.

The main limit is always that we know nothing about what really happens inside of a box. The box can be a computer, or a process on the operating system, or some piece of code running as part of the process. And if there are many boxes it’s hard to collect data from all of them and aggregate it.

Without enough data about the current state of a system, operators cannot build a proper action plan fast enough to react on failure modes, and often they cannot even identify that the system is in failure mode until it is too late.

What is new in your approach and why do you think it will be successful?

My approach is to add sensors (metrics and logs) into control points of a system to record their state. I also want to establish side channels in all layers of the system to collect telemetry from the program itself, from OS and hardware where it runs, and from network devices it uses to communicate. I will then ship the telemetry into storage where operators can query the data later. They can slice it using different dimensions to get a better understanding of the state of the program and the underlying hardware at different points in time.

This approach will be successful because with all the system information on hand, operators will be able to choose faster, better corrective actions to maintain stability of the system. And because often there will be the same corrective actions to the same failure modes, like removing logs older than N days if disk utilization goes above 80%, many of these corrective actions can be automated.

Who cares? If you are successful, what difference will it make?

Business cares. Many problems can be caught before they become critical for the stability of a system. With proper and timely execution of mitigation plans, customers of the system will never know that something was wrong with it. And as system grows a chance increases that always there is something wrong.

Operators care. Properly designed and implemented monitoring system offers alerts and a runbook with instructions what to do if an alert fires. The alerts and the runbook allow operators to react to problems in a standard way, speeding up response time and preventing a full investigation of what the system is, what it is comprised of, where it runs, and so on.

What are the risks?

Alerts and a runbook can cover only a subset of failure modes. Using the rest of the collected data, some additional failure modes can be identified. But some failure modes won’t be covered by alerts or data, so operators should always have a Plan B. Plan B is some kind of a generic solution. It isn’t precise at all and always begins with saying “we don’t know what’s wrong.” The action part of the plan sounds like “let’s reboot everything or let’s restore everything from backup.” Plan B often means downtime and unhappy customers.

Not collecting enough data or alerts that aren’t well defined mean that more failure modes fall into the “Plan B” bucket and that plan is executed more often.

If too much data is collected, navigating the data is harder and less efficient. Storage and processing costs for the data skyrocket.

The main risk here is staying out of the balance: either overcollection of data that overwhelms the operators or frequent execution of the plan B that lowers customers satisfaction.

How much will it cost?

There are 3 components of cost:

- Adding sensors into code and maintaining them as code evolves. This process requires extra work for developers and testers

- Creating alerts and runbook, and maintaining them as code evolves and new failure modes are found. This component means extra work for developers, testers and operators.

- Adding resources to ship, store, and process telemetry like network channels, hardware and software to store and analyze metrics, logs, traces, memory dumps, etc.

How long will it take?

As long as a system remains in production. In the modern world of Agile practices of software development, code changes all the time. The infrastructure where the code runs changes all the time, too. Ideally an observability program begins at the same moment when development of a product begins, or, worst case, after reading this post. It ends only when the product is decommissioned.

What are the mid-term and final “exams” to check for success?

A good test for the success of the observability program and an indicator that it’s on the right track is the continued ability to diagnose and troubleshoot the root cause of problems with a software system without accessing servers where it runs.

Conclusions

I hope you agree that it’s important for a system, especially if it is complex and distributed, to have a high degree of observability. This approach allows operators to make decisions faster and catch growing problems earlier avoiding hard system failures. Some of the decisions can be automated to reduce reaction time to almost instantaneous.

Observability isn’t free, but in most cases the cost of an outage or data loss is much higher than the cost of this program.

And if you want to evaluate how good your new idea is — try the Heilmeier Catechism. I use it a lot.