In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Anubhav Dhoot, Senior Vice President of Software Engineering, who is spearheading the transformation of Hyperforce into a platform that can operate seamlessly across different cloud substrates, including AWS and Google Cloud Platform (GCP).

Explore how Anu’s team has revolutionized service mesh architecture for secure cross service communication, streamlined hundreds of fragile CI/CD pipelines into a declarative, self-healing system, and leveraged AI to enable predictive autoscaling, speed up legacy code refactoring, and automate incident response.

What’s your team’s mission — and how does it support Salesforce’s shift to AI-ready, multi-substrate infrastructure?

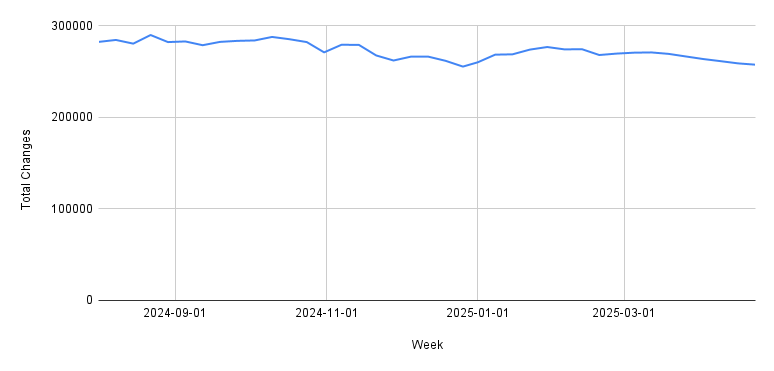

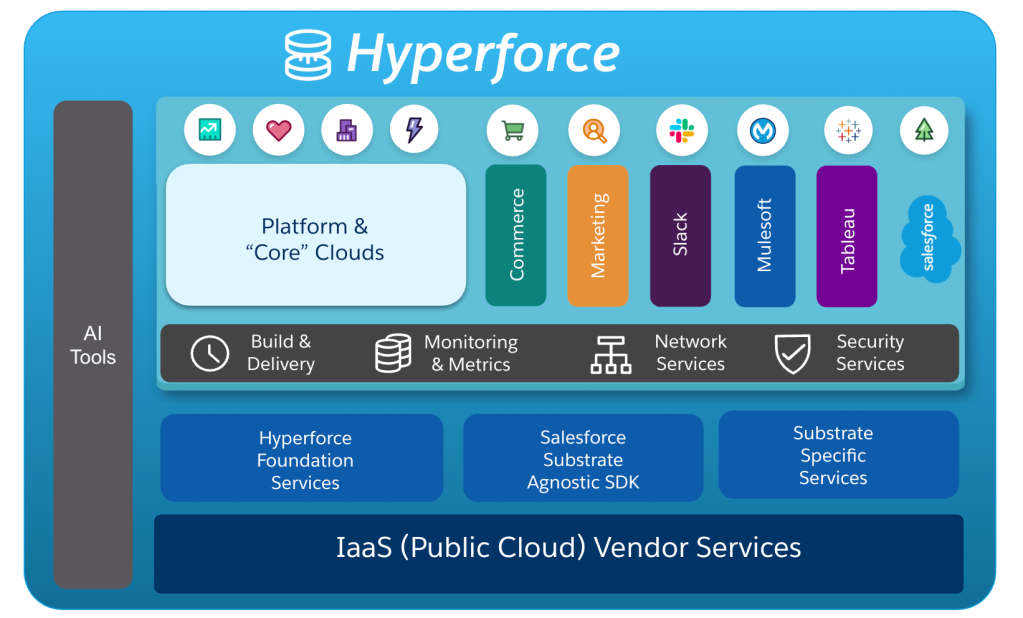

The mission is to transform Hyperforce into an enterprise-scale infrastructure platform capable of operating seamlessly across multiple public cloud substrates, including AWS, GCP, and Alibaba Cloud. This initiative transcends traditional cloud deployment by rebuilding foundational infrastructure to support our long-term goals of scale, agility, trust, and AI enablement.

Drawing from our five years of experience operating Hyperforce on AWS, we have developed a cleaner, more flexible architecture for GCP. Our focus areas include network design, service provisioning, and the abstraction of substrate-specific logic. This ensures that product teams, from Agentforce to Data Cloud, enjoy a consistent and cloud-agnostic platform experience.

This architectural shift simplifies the infrastructure and unlocks intelligent patterns. By creating substrate-agnostic SDKs and centralizing managed services, we abstract the underlying complexity, enabling scalable AI systems to operate consistently across the platform. The result is a future-proof foundation that supports cloud-agnostic, AI-native application development and platform operations, positioning Salesforce at the forefront of innovation.

What was the hardest infrastructure challenge when redesigning Hyperforce for GCP — and how did your AWS experience influence the solution?

The most significant challenge we faced involved rethinking service isolation and trust boundaries. In the AWS-based implementation, the emphasis was on strong separation across business units to minimize the blast radius and enforce security. While this approach was effective, it slowed internal collaboration and cross-product innovation by limiting inter-service communication.

The GCP rearchitecture presented a blank-slate opportunity to redesign key architectural elements. One of the most critical decisions was to rebuild the service mesh model. In the AWS environment, mesh boundaries were product-scoped, which limited service integration across Salesforce clouds. In contrast, the new GCP design supports a unified mesh architecture, enabling secure, resilient, high-trust communication across platform services such as Sales Cloud, Data Cloud, and Agentforce.

This mesh redesign strikes a balance between trust and agility. It supports integrated communication and system interoperability across the stack while maintaining the isolation and resilience necessary for enterprise-scale deployment. The lessons learned from our AWS experience informed this new approach, but the GCP implementation goes beyond mere replication to deliver a more forward-looking infrastructure foundation.

How is AI being used to accelerate infrastructure modernization — including CI/CD refactoring, autoscaling, and SDK abstraction?

AI is integrated throughout the infrastructure stack. One key use case is the development of a substrate-agnostic blob storage SDK. Instead of teams manually rewriting interactions with S3 or GCS, AI models help translate SDK calls and modify application code to use a unified abstraction.

AI is also speeding up CI/CD transformation. Legacy pipelines, often built on Spinnaker, have brittle, hand-written logic for provisioning, deployment, and integration. These are being replaced with a declarative control plane where teams define infrastructure intent, and the platform handles orchestration. AI aids this transition by analyzing pipeline patterns, converting them into declarative templates, and ensuring compliance.

Another major application is predictive autoscaling. AI models trained on CRM traffic patterns predict peak usage and pre-warm compute capacity before demand spikes. This proactive approach reduces cold-start latency and enhances system responsiveness. AI’s impact improves developer velocity, operational efficiency, and cost optimization, providing value at every level of the infrastructure.

Anubhav shares how his team uses AI to boost their productivity.

What’s been the toughest part of embedding AI into platform engineering workflows — and where has it delivered the most value so far?

Embedding AI across the software development lifecycle brings both opportunities and complexities. The main challenge is moving beyond novelty to identify areas where AI significantly enhances system operation or developer productivity.

One success area is AI-driven incident analysis. Custom agents ingest telemetry, detect anomalies, and summarize root causes, accelerating mean-time-to-resolution and reducing the need for manual investigation. AI also powers autonomous remediation, where recurring failure patterns trigger predefined corrective actions without human intervention.

Developer workflows have been enhanced by AI IDE tools like Cursor, which assist with legacy code analysis, unit test generation, and refactoring. This has led to rapid adoption, facilitating improved onboarding and debugging efficiency.

AI agents now also summarize sprint output, a task previously handled by engineering leads. These summaries streamline communication between product and leadership teams without disrupting the engineering flow. Overall, these use cases show AI’s potential to improve both reactive operations and proactive planning within platform teams.

Anubhav shares some of the key traits that make engineers successful at Salesforce.

What technical hurdles emerged during the shift from pipeline-driven CI/CD to a declarative, self-healing infrastructure model — and how is AI helping?

Transitioning to a modern infrastructure exposed the challenge of managing a large, varied network of custom CI/CD pipelines. Many of these legacy workflows, built with Spinnaker, had unique provisioning steps, deployment processes, and service integrations. The sheer number of variations made it hard to standardize and increased the risk of operational issues.

To address this, we developed a declarative control plane for intent-based provisioning. Instead of writing detailed scripts, teams simply specify what they want the infrastructure to do, and the platform handles the rest. This approach gets rid of brittle scripts and speeds up the delivery process.

AI is key to this transformation. AI models analyze the old pipelines to find common deployment patterns, convert them into declarative formats, and highlight any inconsistencies. We store Terraform and Helm configurations in Git alongside the application code, which supports engineer friendly GitOps workflows. AI agents also help detect infrastructure drift, enforce policies, and generate templates, ensuring everything stays compliant without overwhelming developers.

The end result is a more robust and scalable infrastructure that supports continuous delivery, reduces complexity and errors, and minimizes platform friction.

Anubhav shares why engineers should join Salesforce.

Learn more

- Want to apply for Anu’s team? Click here!

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.