In our latest article for our “Engineering Energizers” Q&A series, we meet Christopher Williams, Vice President of Software Engineering. With over 17 years at Salesforce under his belt, Christopher today leads the development of Einstein for Developers. From eliminating time-consuming tasks to providing intelligent code completions, this groundbreaking tool empowers developer productivity and revolutionizes the way developers work.

Join Chris’s team as they revolutionize Einstein, overcoming tough technical challenges and pioneering exciting new features — giving developers on platform the critical edge for taking their game to the next level.

How does Einstein for Developers empower developer productivity?

Einstein for Developers is a next-gen AI tool that eliminates the need for developers to spend time on tasks like debugging syntax errors or writing scaffolding code. It transforms the way developers work by providing features such as code completions, generating tests against existing code, converting natural language prompts into code, and facilitating project kickoffs. By freeing up time for developers to focus on code design, it not only boosts productivity but also allows them to enjoy their work.

Chris dives deeper into Einstein for Developers and how his team harnesses AI.

Have there been any instances where you had to make trade-offs between speed of deployment and ensuring the quality of Einstein for Developers?

Speed of deployment has been a challenge for this project due to the rapid iteration of feature sets and capabilities. For example, we aimed to launch the test case generation and inline autocomplete internally in December, with a plan to go live in January to a broad user group.

However, considering that these were new capabilities, we decided to gather more feedback and refine the experience to avoid any potential gaps. We made the call to delay the launch in order to meet our quality standards and ensure a positive first impression for our external developer group.

This decision proved to be the right one as our developer community loved the capabilities when we showcased them at Salesforce’s annual developer conference, TrailblazerDX. One team member even overheard someone saying, “This can’t be real,” during our demo, which was a great validation of our work.

How do you prioritize and decide on the features and improvements to be included in each release of Einstein for Developers?

Partnering with Salesforce AI Research, we prioritize addressing the challenges faced by developers and identifying tasks where AI can provide efficient solutions. For instance, we give priority to features like inline completions, which help developers quickly complete code and fill in variables and expressions with relevant information from class files. We also consider the need for fast test writing to meet code coverage requirements and ensure comprehensive test coverage for new features.

Chris describes his role as an AI leader.

How do you ensure that the models in Einstein for Developers meet the specific needs and requirements of developers?

We employ a comprehensive approach that involves various feedback channels:

- Internal Pilot Group: Engages in pair programming sessions, providing real-time feedback and suggestions. Their continuous interaction delivers immediate feedback on new capabilities and identifies areas for improvement.

- External Pilot Group: These distinguished customers explore new capabilities and share their thoughts and suggestions, helping us refine the user experience and address any issues.

- UX Research Team: Conducts surveys and gathers feedback from developer groups, providing valuable data points for decision-making. Our collaboration with this team runs continuously, even after a capability goes live, ensuring that we stay attuned to the evolving needs and preferences of developers.

Throughout the process, we rely on success metrics to evaluate the effectiveness of each phase. These metrics serve as benchmarks to assess whether we have met the predefined criteria for a successful launch. If we fall short of these criteria, we carefully evaluate the situation and make informed decisions on whether further improvements are necessary before proceeding.

Can you share any success stories or examples of how Einstein for Developers has significantly improved developer productivity or efficiency?

One of the key features that developers have found immensely helpful is inline autocomplete. This feature allows the model to provide multi-line responses, saving developers valuable time.

Additionally, the test case generation capability has delivered a significant productivity boost. Developers have generated tests for their methods and accepted them on their first try, resulting in a remarkable acceptance rate of 75%. This high acceptance rate is a testament to the accuracy and usefulness of the generated tests. We were pleasantly surprised to see that the acceptance rate exceeded our anticipated range of 40-50%.

This feature has addressed one of the top complaints of platform developers, which is the time-consuming task of writing tests. By providing a ready-made code block, developers can focus on refining and adding to the generated tests, saving them a significant amount of time and effort.

Chris shares why engineers should join Salesforce.

How does your team collaborate with Salesforce AI Research to shape the capabilities of Einstein for Developers?

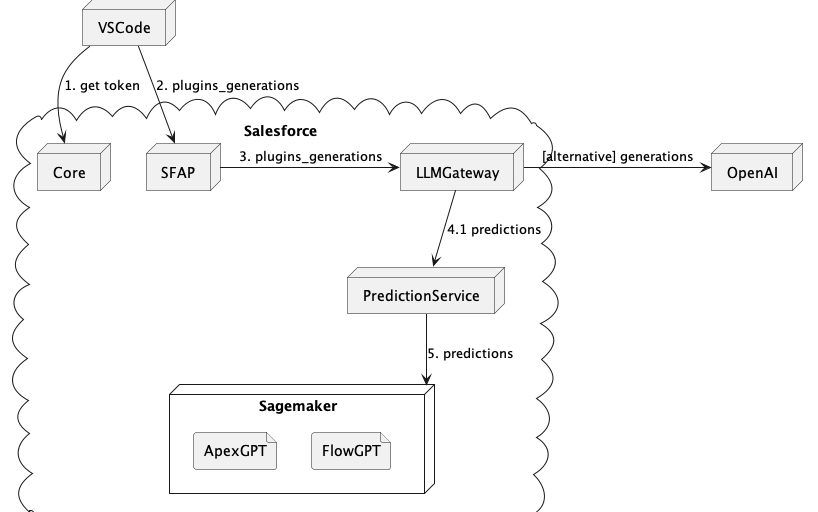

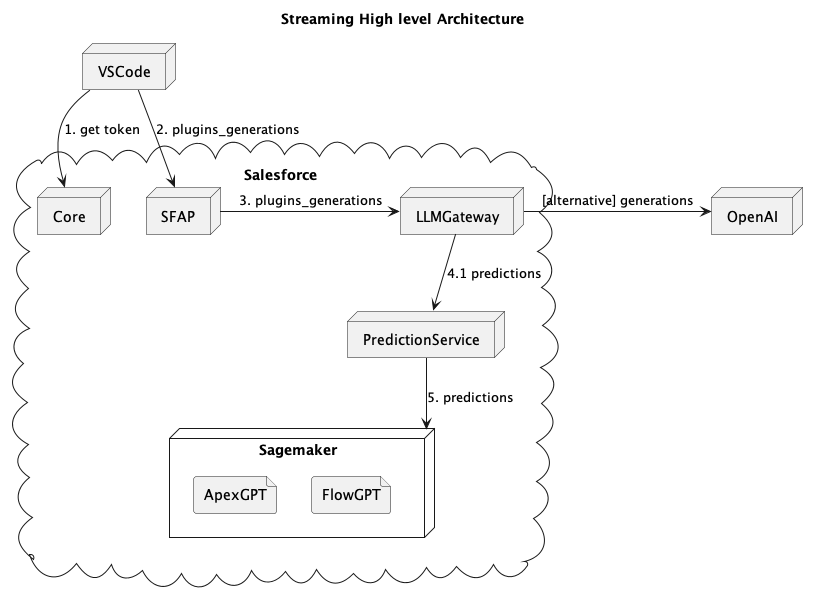

We collaborate with the Salesforce AI Research to identify the required capabilities for the model and assist in tasks like training set preparation and evaluation.

The AI Research team is responsible for model development, which test case generation support, model annotation, training, and providing training set data.

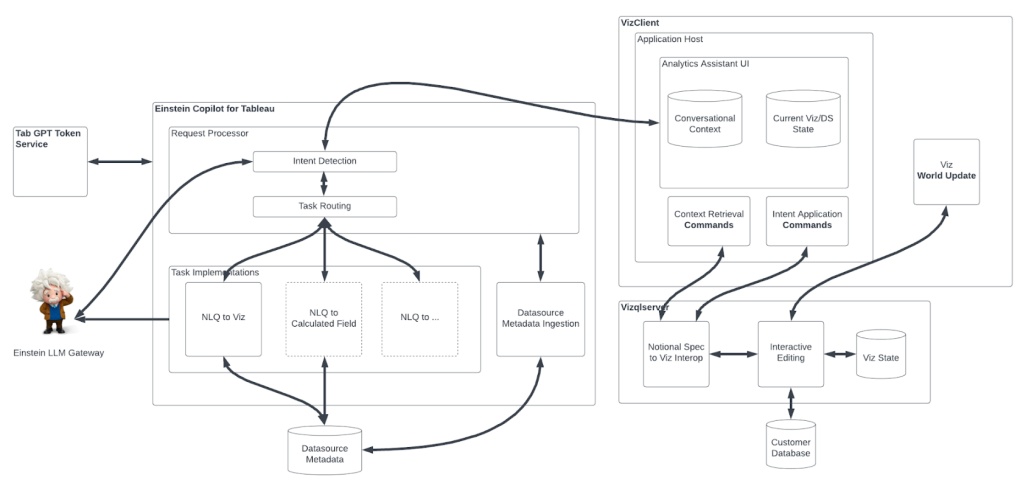

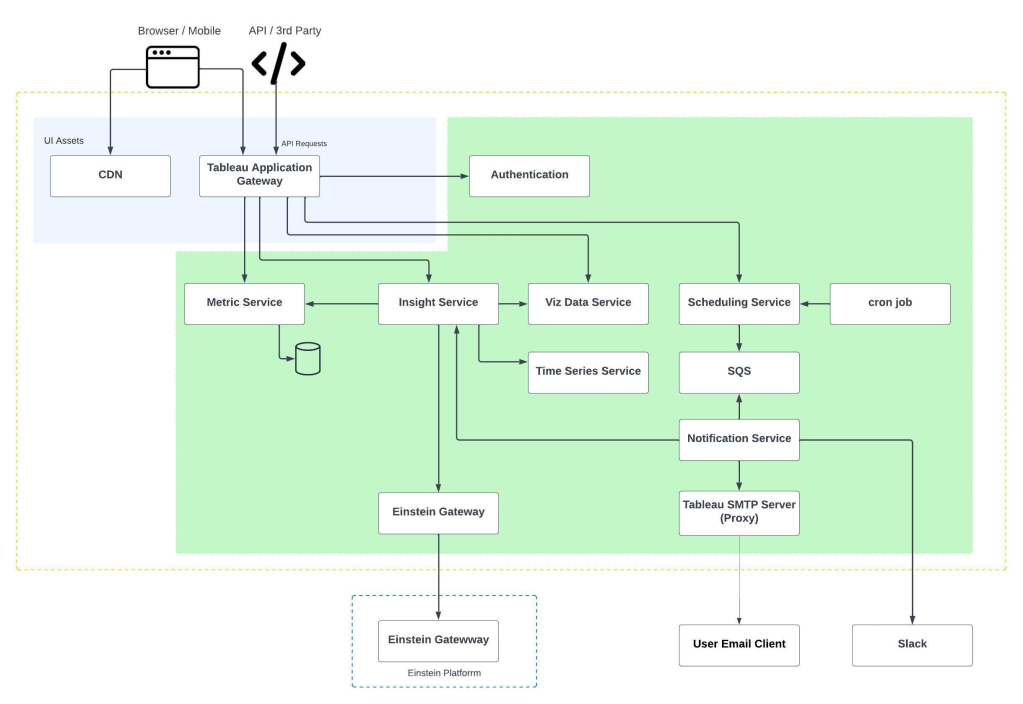

My team primarily focuses on enhancing the user experience and platform interactions, surfacing the model’s outputs through the platform and ensuring seamless integration with various environments, such as SageMaker or Google Cloud Platform. Additionally, we also handle user authentication and access control to ensure that only valid platform users can interact with the model endpoints, such as our API gateway and LLM gateway, which support the deployment of AI Research team’s models on SageMaker.

A look inside the architecture of multi-turn chat.

What is an exciting ongoing development project related to Einstein for Developers?

Einstein for Developers’ multi-turn chat is an upcoming feature that will greatly enhance our current chat capabilities. After developers write a prompt and receive a response from the model, they can have an interactive conversation with the model by asking it to make specific updates, provide more details, or answer additional questions. For example, they can ask the model to describe their code, document code, refactor code, or even generate tests.

Ultimately, this will deliver a more dynamic and iterative process compared to the traditional prompt-response approach where developers can explore different possibilities and refine their interactions with the model. Multi-turn chat will launch later this year.

Learn More

- Want to dive deeper into Einstein for Developers? Go behind the scenes with AI Research in this blog.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.