Kubernetes is arguably the most sought after technical skill of the year and has seen a 173 percent growth in job searches from 2018 to 2019. As one of the most successful open source projects, it boasts contributions from thousands of organizations that ultimately help businesses scale their cloud computing systems.

Many large enterprises have adopted Kubernetes, including Salesforce. Salesforce implemented this open-source platform to integrate with analytics engine Apache Spark. Salesforce has been working with Spark running on Kubernetes for about three and a half years.

As Salesforce Systems Engineering Architect Matt Gillham explains in his Spark on Kubernetes webinar, he and his team began working with the analytics engine back in 2015.

“We wanted the ability to provide Spark clusters on demand, particularly for streaming use cases. We were looking at other uses for the engine as well.”

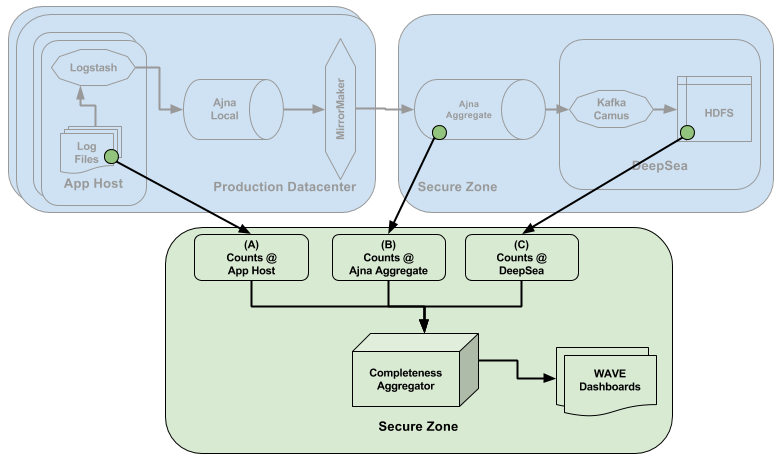

The first use case the team took on was processing telemetrics and application logs, streams that ran through a set of Apache Kafka clusters.

“We wanted to create a separate Spark cluster for each of our internal users to maximize the isolation between them. So, we had some choices to make about what cluster manager to use.”

The team considered several, with Yarn, Kubernetes, and Mesos emerging as the top candidates. Spark had first-class support for Yarn and Mesos so there were tradeoffs to evaluate.

“At the end of the day, we chose Kubernetes largely due to the backing and momentum it had and who was behind the project. And it seemed to be the most forward-looking cluster manager for cloud-native applications.”

While the team focused on the success of its initial internal customers, it also prototyped some other big data systems running atop Kubernetes. Of course, simultaneously, the open source community was continually refining Kubernetes. One community project involved making Spark natively compatible with the Kubernetes scheduler, to eliminate redundancy between the function of the Spark master components and what the Kubernetes scheduler and control plane could manage.

Matt and his team learned a lot from these first efforts, and that knowledge has guided and shaped their subsequent work with the Spark and Kubernetes platforms. In the Spark on Kubernetes webinar, Matt digs into some of that hard-earned knowledge. He explains in detail why:

- Distributed data processing systems are harder to schedule (in Kubernetes terminology) than stateless microservices. For example, some systems require unique and stable identifiers like ZooKeeper and Kafka broker peers.

- Kubernetes is not a silver bullet for a failure tolerance. (Hint: It’s really important to understand the lifecycle of a Kubernetes pod. We wrote more about building a fault-tolerant data pipeline here.)

- It’s in everyone’s best interest for Salesforce to align its efforts with Kubernetes to those of the broader Kubernetes community. As relatively early adopters of Kubernetes, Salesforce’s Kubernetes problem-solving efforts sometimes overlapped with solutions that were being introduced by the Kubernetes community.

Matt and his team are continually pushing the Spark-Kubernetes envelope. Right now, one of their challenges involves maximizing the performance of very high throughput batch jobs running through Spark.

“We’re constantly discovering new issues running Spark at scale, solving them, and contributing those solutions to the Spark and Spark Operator projects.”

To learn more about our work with Spark on Kubernetes, watch the webinar.

And if you’re interested in working with a company that likes to push the envelope with all its software technologies, please check out our open roles in software engineering. Join us and engineer much more than software.