by Chi Wang and Scott Nyberg

In today’s data-powered world, leveraging customer data to improve AI capabilities remains key for providing highly personalized consumer experiences. In fact, 43% of customers believe AI has improved their lives, with 54% willing to provide their anonymized data to improve AI-related products. However, more than half of customers shared trepidations about how companies use their personal information in AI development.

Salesforce’s Interactive Data Science (IDS) team tackles this complex AI trust challenge by creating cutting-edge technologies that power Salesforce Einstein AI development. This includes their trusted notebook solution — a three-phase, context-based data access control process.

Their solution reinforces trust — Salesforce’s number one value — by implementing robust privacy measures. This empowers customers with full control over their data usage and ensures responsible and secure data handling.

Additionally, the solution improves AI development efficiency — enabling Salesforce developers to provide high-quality information to customers faster than ever.

Which factors led the IDS team to innovate their trusted notebook solution?

Historically, Salesforce AI data scientists faced several data approval processes and permission check mechanisms that often encumbered smooth modeling development. These challenges stemmed from various factors.

First, Salesforce imposes a high trust benchmark for internal machine learning development with customer data. For example, Salesforce customers maintain full control over their data usage. This created a stringent and time-consuming data collection consent and approval process — incorporating legal reviews and external auditing — to ensure customer data remained protected.

Additionally, numerous AI tools used during AI development required permission control for customer data, making data scientists’ work more complex.

Seeking to help data scientists better navigate these logistics processes and speed their AI development process, IDS pioneered their trusted notebook solution.

What’s the engine that powers IDS’s trusted notebook solution?

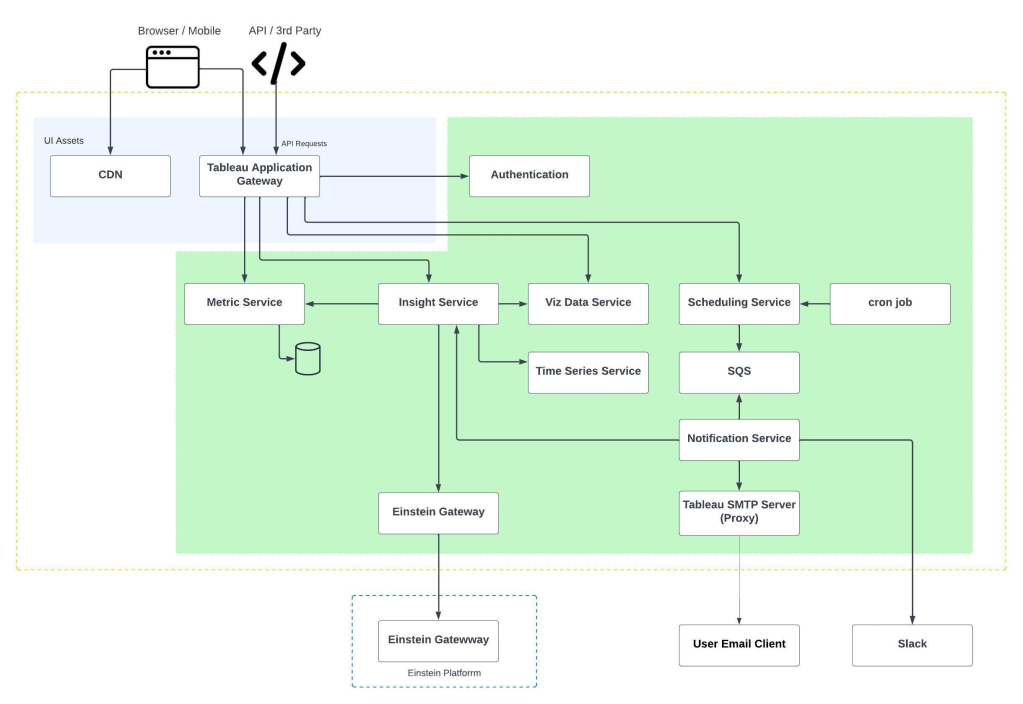

To address the challenges for acquiring customer data, the IDS team introduced a three-phase, highly simplified permission control approach workflow, as shown in the diagram below.

Diving deeper, here is a closer look at each phase within the illustration:

Phase 1: A data scientist (Jennie) logs into the notebook (NB) admin system using a web UI, then she submits a data access request (steps 1, 2). Her request specifies the purpose (for what application or what task), tenants (which customers), and data sources (what types, categories, and locations of data). Finally, a data administrator (Tian) reviews and approves Jennie’s request (step 3).

Phase 2: The NB admin system verifies Jennie’s data request against customer consent and provides the compute resources (normally a JupyterLab instance) that she needs for her AI development (step 4). As part of the resource provision, an auth (JWT) token will be created, encapsulating data scopes and data access purposes.

Phase 3: When Jennie writes code to explore customer data or prototype a model algorithm in her notebook instance (provisioned at step 4,5), all outbound requests from her code attach the auth (JWT) token and go through the reverse proxy hosted in the notebook admin system (steps 6, 7). External interactions from Jennie’s NB will be enforced by the notebook admin system according to the data scope and service operation scope defined in the auth (JWT) token.

Ultimately, this process generates constrained access tokens and validates them when Salesforce data scientists access customer data. Everything happens in real-time and interactively, enabling the team to maintain a high trust level without sacrificing AI development efficiency.

How does the data control process integrate with external AI platforms like AWS SageMaker?

The team’s three-phase data control process is designed to be versatile, delivering a seamless, secure, and inclusive experience for data scientists that supports them when they work from within Salesforce internal AI services, data storage, or external AI platforms such as AWS SageMaker.

The process involves transferring approved data and service scope — such as the auth (JWT) token created during step 5 — into Identity and Access Management (IAM) roles and policies within an external machine learning platform.

For example, when Jennie works in SageMaker, the notebook admin system provisions three key components:

- Compute resources and services in SageMaker

- An IAM role that represents Jennie and her approved service scope

- IAM policies that authorize Jennie to work in SageMaker

The team extends its trust enforcement principles into SageMaker by aligning the access scope defined in Jennie’s project request with permission controls. This alignment is set up within AWS IAM. Check out the illustrative example below, which uses an AWS attribute-based access control policy to identify Jennie’s project role and provide her access to a s3 folder. Additionally, the policy verifies her project application context against the resource’s application context.

Through the power of their trusted notebook solution, Salesforce’s IDS team streamlines data access controls for Salesforce internal AI systems and synergizes them with external AI platforms. This empowers data scientists to deliver high-quality information to customers with increased speed while effectively safeguarding customer data. This innovation marks a major advancement in improving customer experiences through AI.

Learn more

- To learn how Salesforce engineers develop ethical generative AI from the start, check out this blog post.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.