Introduction

Spinnaker has been gaining popularity as a Continuous Deployment (CD) solution. It certainly offers many useful features supporting deployment pipelines including but not limited to access permission control, automatic and manual gated deployment configurations when moving from one phase to the next. Having said that, if you are operating a complex legacy CI/CD system, what might you need to consider before a migration to Spinnaker? How can you make sure the process is smooth for all stakeholders?

This post is a recap of my presentation at Spinnaker Summit 2020 in which I discussed the challenges we encountered in our legacy deployment orchestration system and how we migrated to Spinnaker.

Background

We (build and release team) previously used TeamCity as both the CI and CD solutions for many of our platform services. However, as our build and release activities expanded over the years, the challenges facing CI/CD got more complex.

For example, TeamCity was able to access production environments, thus failing the least privilege security principle. Build and deployment projects were configured separately to accommodate increasing engineering activities, making it difficult to enforce consistent permission and deployment policies. When it came to release management, gating deployments from dev to staging to production required constant monitoring, manual review and approval. As a result, deployment orchestration enhancement was becoming a much higher priority.

Before

After

- TeamCity remains a CI tool while Spinnaker manages CD.

How

Once we decided we needed to move forward with migrating legacy CI/CD to Spinnaker, the question became, “Where to start?!” There are a few phases we went through to ensure a smooth transition experience for all stakeholders including developers, SRE, quality engineers, performance engineers, and project managers. These phases include Spinnaker installation, preparing deployment configuration changes, and constructing new deployment pipelines.

Spinnaker Installation Key Features

The core features of Spinnaker, including Application Management, Application Deployment, Pipelines and Kubernetes Provider, are configured by infrastructure engineering.

In addition, we added Authz, Role Based Access Control (RBAC). This feature enables access restriction against applications, pipelines, and accounts and provides custom permissions for automatic and manual pipeline triggers. One key reason we adopted this feature was to fulfill our security requirements.

In order to meet our change control requirements, we added Pipeline as Code as well. This feature allows defining deployment pipeline configuration in Github. As a result, pipeline changes require pull request reviews and validation. Plus, we are able to restrict who can apply a pipeline code change.

Preparation

Given that many deployment configurations were operating in TeamCity for years, how did we move them over to Spinnaker while minimizing interruption?

First, we identified all active deployment jobs in TeamCity. It had deployment configurations for 20+ applications/services in 5+ environments from dev, staging, performance to production. Among deployment jobs, we looked for configurations that included build steps that called Helm commands. From these configurations, we identified Helm chart paths for services to deploy. These same Helm charts are now used by Spinnaker.

Once we had a solid understanding of the actual TeamCity configurations we needed to migrate, the next step was a very important aspect of migration: communicating and collaborating with all stakeholders.

We presented a proposal to all stakeholders about changes in architecture, policies, and process and engaged stakeholders about detailed configuration changes and expectations. We emphasized the following key points in communications:

- Standardization: Build and Release team owns pipeline templates, making it easier to support org, onboarding new services and audit.

- Opinionated Permissions: We provide well-defined user groups & access control list (ACL) by configuring opinionated permissions.

- Pipelines as Code: Changes to configurations are reviewed through the Pull Request process.

- Separate CI & CD: Use TeamCity for build & test only; remove the ability to deploy. CD is only managed in Spinnaker, drastically reducing the chances of an unintended change against production.

- Automate Releases: We integrate with End-to-End tests in pipelines, coupled with roll-backs and issue tracking integration.

Construction

Once the migration proposal went through discussion, feedbacks, and revision, we were ready to work on constructing our matching deployment pipelines in Spinnaker. Pipelines in Spinnaker must meet certain conditions.

For each active application/service in production, pipelines are constructed from standardized templates. They must carry forward and enforce the same release process as before migration. The move to Spinnaker made this much easier to enforce.

Generally speaking, pipeline triggers are implemented as follows:

- Dev pipelines auto deploy when a new dev build is published.

- Staging pipelines trigger if dev deployment tests pass.

- Prod pipeline trigger conditions include passing staging deployment tests and manual judgment by release manager or authorized personnel.

In order to enforce pipeline change control, we configured Pipeline as Code and dinghy service. Pipeline code is defined in Github. New pipeline commits automatically apply changes in Spinnaker pipelines. To safeguard pipeline code, we enabled Pull Request (PR) review with validation tests. Only authorized personnel are allowed to approve and merge PRs.

To help standardize pipelines, we defined common pipeline stage modules including Bake, Create Namespace, Deploy Manifest, Restricted Window, Integration Tests. These modules can be shared between applications and pipelines.

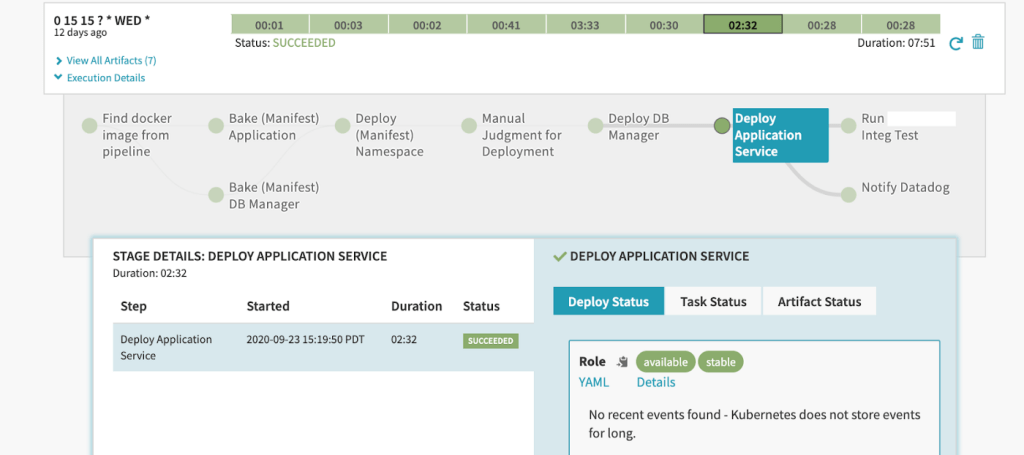

Here is an example of a typical deployment pipeline. It includes common stages such as Bake, Manual Judgment, Deploy Manifest, Post deployment testing, and Datadog notification. These stages are generally similar among pipelines except for pipeline-specific artifacts and accounts.

Next, we coordinated moving deployment jobs from TeamCity to Spinnaker. Our general approach was to start by testing a Spinnaker pipeline. Once that worked, we disabled its corresponding deployment job in TeamCity and informed the service dev team about pipeline update. We started with a pilot program covering a few services. Once the pilot program succeeded, we started implementing Spinnaker pipelines for the rest of the services under our purview. During the migration, we announced our progress to all stakeholders on a regular basis.

While working on pipeline code, we noticed varied complexity due to service dependencies and requirements. Therefore, we had to revisit and improve our shareable modules to allow easier module re-use.

While communicating progress with all dev teams, we also updated them with instructions about how pipelines work in Spinnaker compared to TeamCity. In particular, we highlighted the benefits offered by Spinnaker deployments including release cadence improvement, streamlining processes for ad hoc and hot fix releases, automatic post deployment validation tests, and preventing unexpected release failure due to lack of change control in legacy CI/CD. As a result, we are able to achieve more reliable and repeatable deployments.

Conclusion

The main goal of this journey, which was a success, was to separate CI and CD. TeamCity remains CI while CD is managed by Spinnaker. This allows for easier enforcement of deployment security policies, better change control, and standardized pipelines, all of which streamlines the release process for both scheduled and unscheduled deployments.