In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today we feature Zak Zimmerman, Director of Software Engineering, leading the Data Cloud Performance Engineering team. Zak has transformed the way his team analyzes large-scale performance data, responds to critical incidents, and builds automation systems using AI-powered development tools.

Learn how they tackled the challenge of keeping up with customers who process trillions of data points, leveraged MCP (Model Context Protocol) with Cursor to pinpoint issues in vast datasets, and significantly boosted productivity during critical incidents where every second matters for mission-critical applications.

What is your team’s mission?

The team serves as the guardians of performance, scalability, and reliability within Data Cloud. The mission is to act as the last line of defense, ensuring that everything operates efficiently at scale before it reaches our customers. This role is critical, as the team integrates with every aspect of Data Cloud — from data ingestion to internal processing to data extraction. Our job is to provide both internal feature delivery teams and developers with the confidence they need in the performance of what they’re building, while also assessing the system’s ability to handle specific volumes of data.

Think of it like buying a car and seeing the “miles per gallon” rating. This is a benchmark that everyone understands, but the raw details are influenced by various factors such as fuel type, car size, acceleration patterns, wind conditions, and weather. Similarly, performance engineering in Data Cloud involves more than just rows and bytes. There are numerous nuances to consider, such as how often data updates, whether customers are using formula fields, and the data types they work with, like CSVs versus Parquet files, as well as the compression status of the data. Understanding these complexities requires a blend of science, engineering, and experimentation, and that’s where our team steps in to ensure everything runs smoothly and efficiently.

What analysis bottlenecks consumed hours during extreme Data Cloud load testing, and how did AI transform performance data processing?

The challenge is staying ahead of customers who are pulling in trillions — maybe even quadrillions — of data points very quickly, each with different patterns. When a single team competes with the entire world using Data Cloud, emulating what customers do becomes incredibly complex.

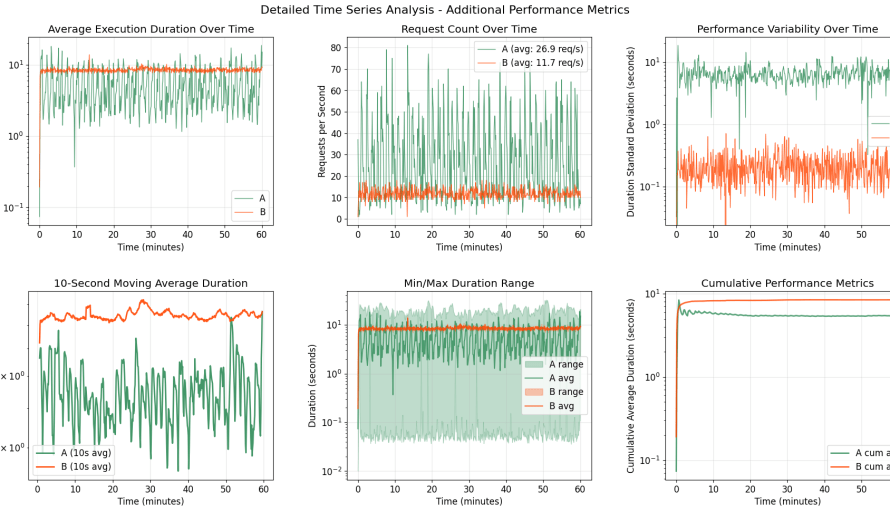

To address this, we conduct monthly exercises called “BLAST” (Big Load and Stress Test). During a two-hour window, our goal is to push Data Cloud to its limits until it tips over in controlled test environments. When dealing with millions of events and trying to find needle-in-haystack performance problems, manually combining and dissecting all that data was overwhelming.

AI has significantly cut down the time we spend building important visualizations for the data we collect and the experiments we run. We can ask Cursor to inspect our data and help identify patterns. AI assists us in creating Jupyter notebooks to display our findings, and when we need to analyze millions of events to come up with action items, AI helps us figure out potential solutions for the next iteration.

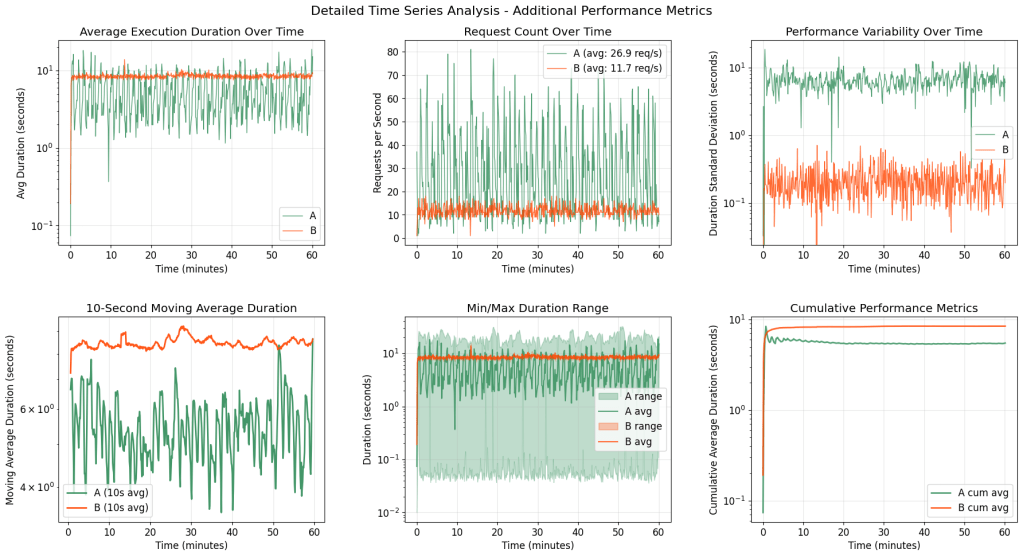

Cursor-generated Jupyter Notebook Visualizations against performance metrics (A vs. B).

The system scales up programmatically, so the team needs automation to emulate customer patterns. AI helps us both define these patterns and analyze the resulting performance data efficiently, ensuring we can keep up with the demands of our customers and maintain the highest levels of performance and reliability.

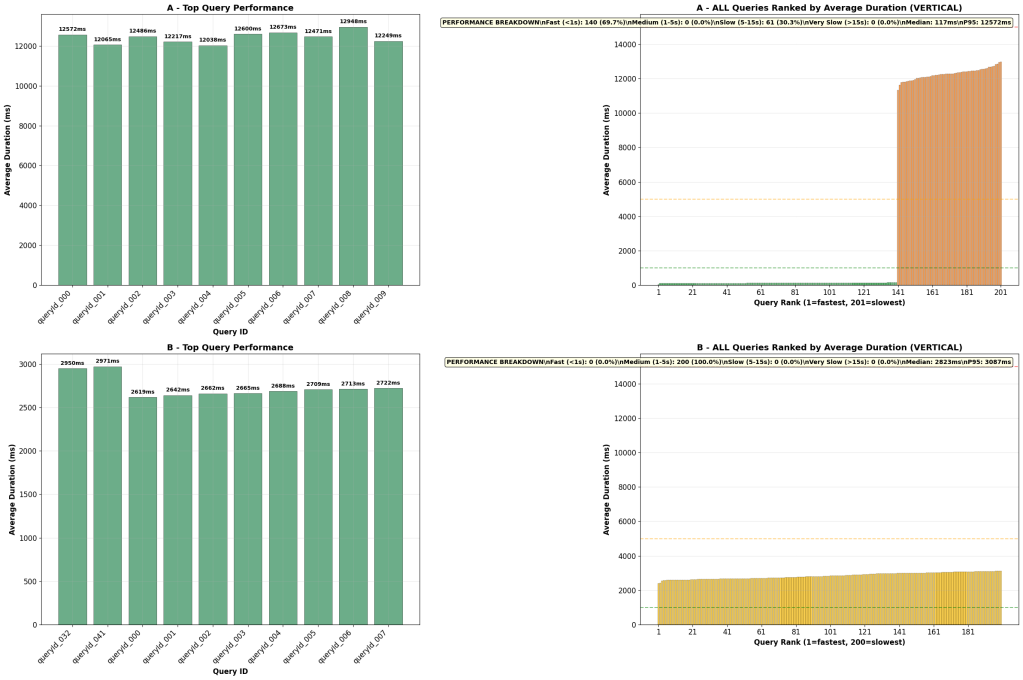

Cursor-generated performance analysis recommendation showcasing a consistent pattern with only certain queries.

What productivity barriers slowed Data Cloud performance pattern investigation?

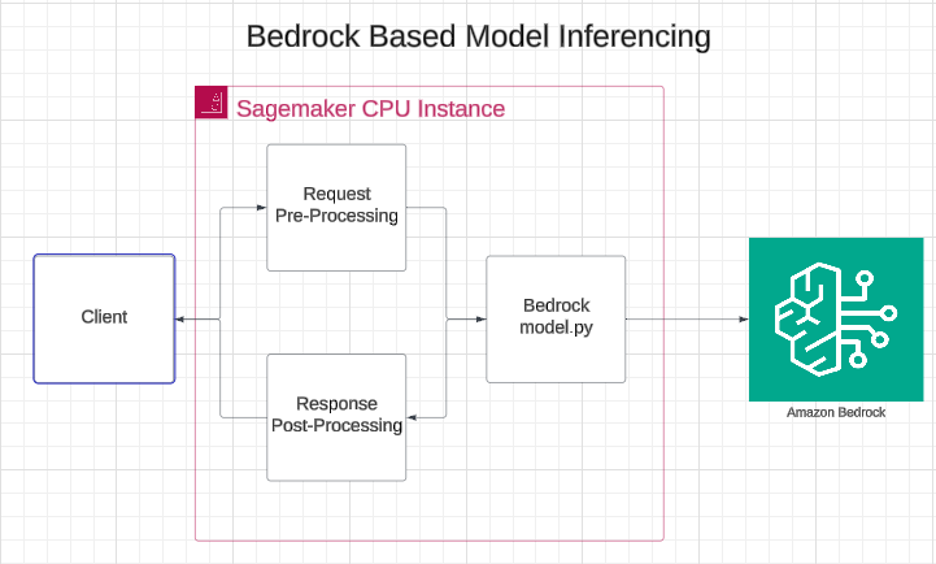

Engineers handle millions of events per second, including logs, usage metrics (metadata about customer activities), and Argus telemetry about machine CPU utilization. The sheer volume of data is overwhelming and is a common challenge in managing a platform as complex as Data Cloud. Performance engineers need to extract valuable insights about customer usage patterns and determine if performance is regressing.

The breakthrough came with the implementation of MCP (Model Context Protocol) using Cursor. This allows engineers to set up internal MCP servers that can access internal data. Performance specialists can now use human language to ask questions like, “Which customer is doing the largest thing?” In the past, engineers had to manually find tables, read metadata to understand what metrics meant, build queries, and then create dashboards.

Now, engineers can simply ask human questions. The system has context about the data and metadata that describe what the data means. Instead of engineers struggling through manual processes, AI and performance teams can iterate on investigations using natural language, and the system can reason through requests rather than just providing point answers.

This has saved engineering teams a tremendous amount of time — analysis is now 3-4 times faster by eliminating the need to repeatedly establish context and navigate multiple systems manually.

Zak shares what keeps him at Salesforce.

What incident response challenges did AI solve?

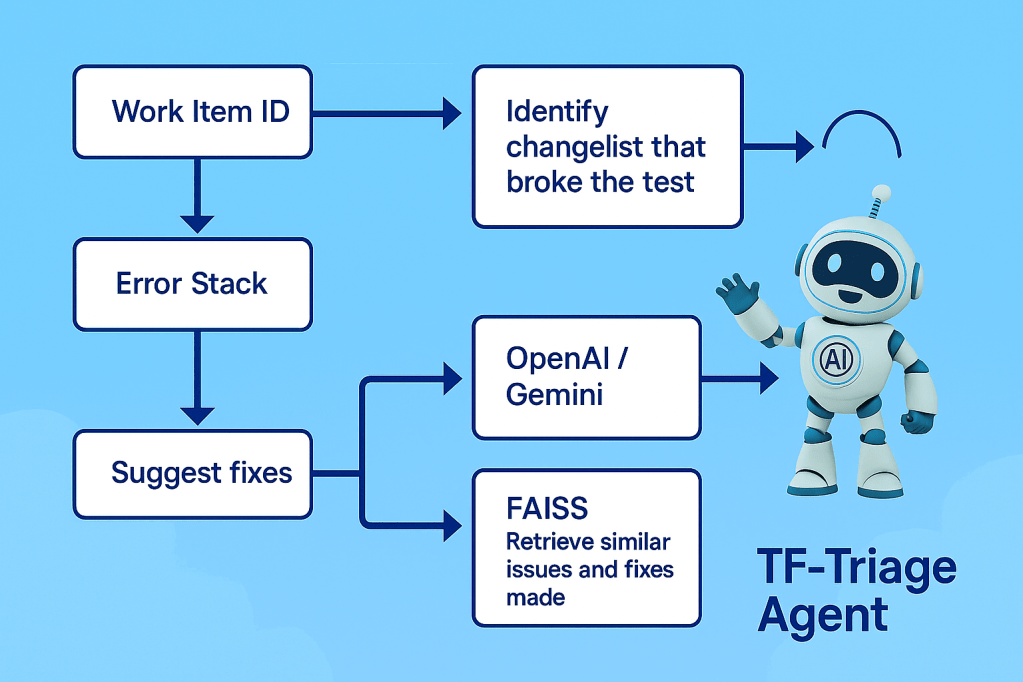

During live site incidents, every second is critical. Engineers are often on calls with multiple team members, sharing screens and findings, which can be incredibly stressful and challenging. The system is so extensive that finding a specific issue can feel like searching for a needle in a haystack, especially when time is against them. Data Cloud manages mission-critical, time-sensitive applications, such as vehicle emergency contact systems, where immediate response is essential for customer safety. If the systems go down, every second counts.

The technical challenge is compounded by the need to work with multiple query languages. Splunk has its own language and context, Argus uses a different query language for server telemetry, and usage metadata requires SQL expertise. Translating between these languages while trying to diagnose issues can be extremely stressful and time-consuming.

AI has revolutionized this process by helping engineers build complex, context-aware queries to quickly identify the root of problems. Engineers use AI daily to construct these queries during customer incidents, which has dramatically improved their productivity. Query building time is reduced by up to 90%, and engineers are now 2x faster, if not more, during incidents. They have Cursor repositories specifically designed for these scenarios, complete with rules and pre-existing queries that enable rapid iteration and problem-solving.

Zak explains how Cursor has improved his team’s productivity.

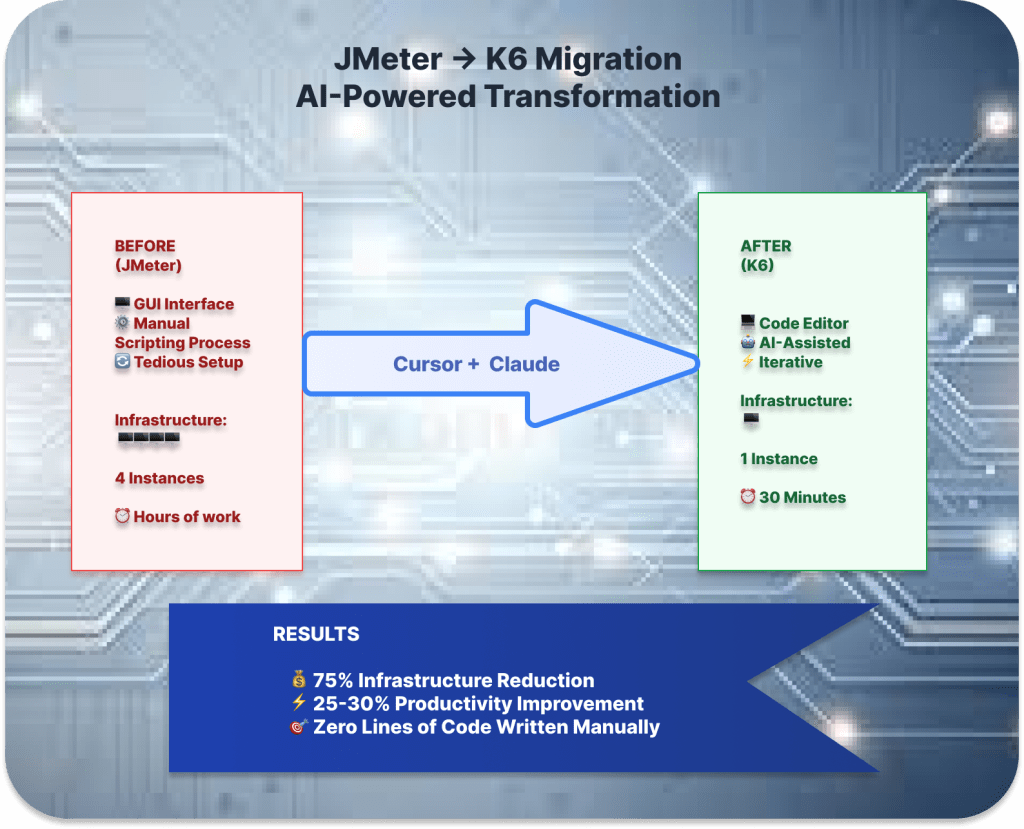

What automation development constraints limited performance monitoring systems, and how did AI overcome these barriers?

The team faces limitations due to the physical constraints of manpower and daily capacity. As Data Cloud’s platform grows exponentially, the team’s responsibilities expand organically. They need to continuously push the boundaries to achieve higher, better, faster, and stronger outcomes. It’s not just about implementing new features; it’s also about ensuring these features scale and integrate seamlessly into the Data Cloud ecosystem.

To reclaim valuable time, the team heavily invests in automation. This automation is crucial for pushing the system to its limits, configuring Data Cloud to align with customer needs, generating load, and analyzing results. Without high-quality code-driven automation, the team would struggle to scale effectively.

Additionally, the team maintains robust regression monitoring systems, which provide a continuous performance check for Data Cloud. They monitor this “heartbeat” daily to ensure that Data Cloud performs and scales appropriately, even under synthetic workloads.

Cursor plays a significant role in all the automation efforts. The team uses Cursor to write detailed analysis pieces, enhance automation development time, create APIs, and develop notebooks for performance result analysis. The challenge lies in maintaining high standards of quality, as the team must meet stringent performance and scale requirements, not just basic functionality.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.