By Karunakar Komirishetty, Bhumika Sethi, and Shiva Rama Pranav Joolaganti.

In our Engineering Energizers Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Bhumika Sethi, a software engineer on Data 360’s Personalization team, delivering real-time, large-scale personalization by unifying customer profiles, engagement signals, and metadata across Salesforce, including intent-based recommendations for Agentforce.

Explore how the team brought personalization into Agentforce by using AI to transform free-form conversations into structured user intent, solved cold-start relevance through semantic catalog modeling, and re-architected hybrid machine learning systems to fuse real-time intent with behavioral signals — using Agentforce actions, Data 360 real-time ingestion, and deep learning models operating at platform scale.

What is your team’s mission as it relates to building intent-based recommendations for Agentforce?

The Data 360 Personalization team focuses on delivering personalization capabilities, ensuring real-time, relevant recommendations across Salesforce experiences. We unify customer profiles, engagement signals, and metadata at scale, creating the essential foundation for providing personalized recommendations within dynamic environments such as Agentforce.

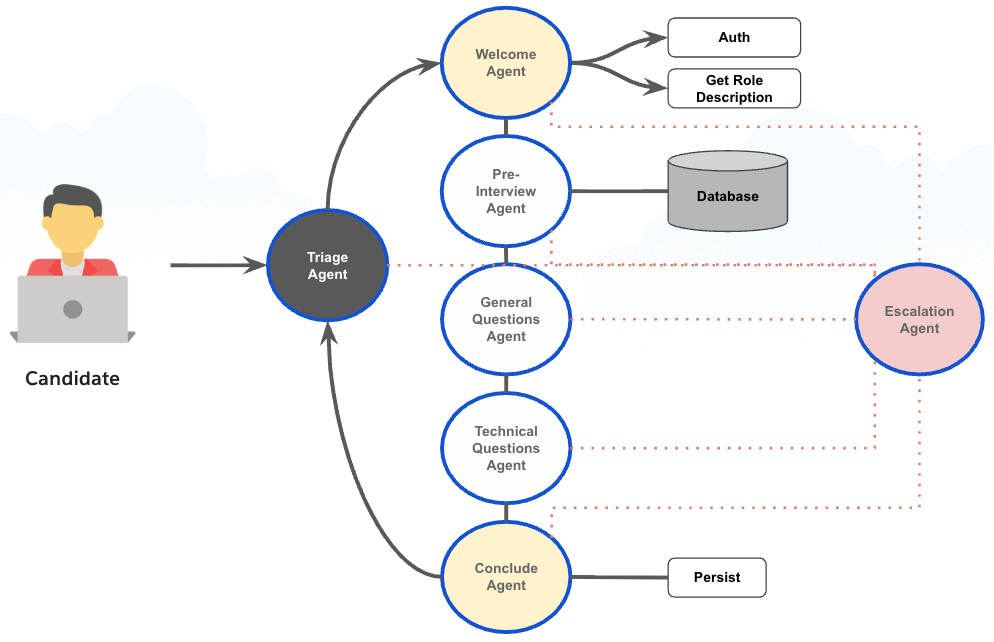

In agentic conversations, standard personalization based on user engagement behavior is insufficient to provide relevant, user query-specific recommendations. We focus on the user’s conversational intent; otherwise, the user experience is substandard. With intent-based recommendations, we aim to improve this user experience.

As Agentforce and autonomous agents advance, personalization moves from a mere enhancement to a core requirement. An agent that only retrieves information, without understanding user context, preferences, or intent, simply cannot deliver meaningful outcomes. The team’s mission is to ensure agents understand the user context and provide personalized responses in real time, considering:

- Who the user is

- What they are trying to accomplish

- How their intent evolves during a conversation

To support this, the team treats conversational intent as a primary engagement signal. Unlike traditional web personalization, which often relies on clicks and page views, conversational interfaces depend on what users say. By engineering systems that extract intent directly from conversations and combining it with existing engagement data, the team empowers Agentforce to deliver personalized recommendations that remain relevant throughout an interaction.

What architectural constraints shaped the effort to adapt web-based personalization systems for Agentforce?

Existing recommendation systems were initially designed for web experiences, where implicit signals like clicks and views typically drive personalization. However, Agentforce introduced a fundamentally different interaction model. This new approach requires the system to interpret explicit, real-time user intent directly from conversational text, all while maintaining low latency.

One significant challenge involved integrating unstructured conversational input into a system that was originally optimized for structured engagement data, without introducing any delays. The architecture also needed to balance immediate conversational intent against long-term behavioral history. This ensures that what the user is asking for in the moment carries more weight than past interactions, while still preserving overall personalization.

Another constraint arose from the variability across customer datasets. The system needed to function reliably across organizations with vastly different data volumes. This included scenarios where little to no historical engagement data existed. To address this, the team designed a flexible architecture. This architecture is capable of operating at scale, handling both high-volume environments and cold-start scenarios without compromising recommendation quality.

What challenges did you face extracting reliable user intent from free-form agent conversations, and how did you solve them?

Free-form conversation introduces a level of ambiguity that structured data systems are not naturally equipped to handle. Users often express their intent across multiple turns, use informal language, or rely on implicit context. This makes it difficult to determine what they are actually trying to accomplish at any given moment.

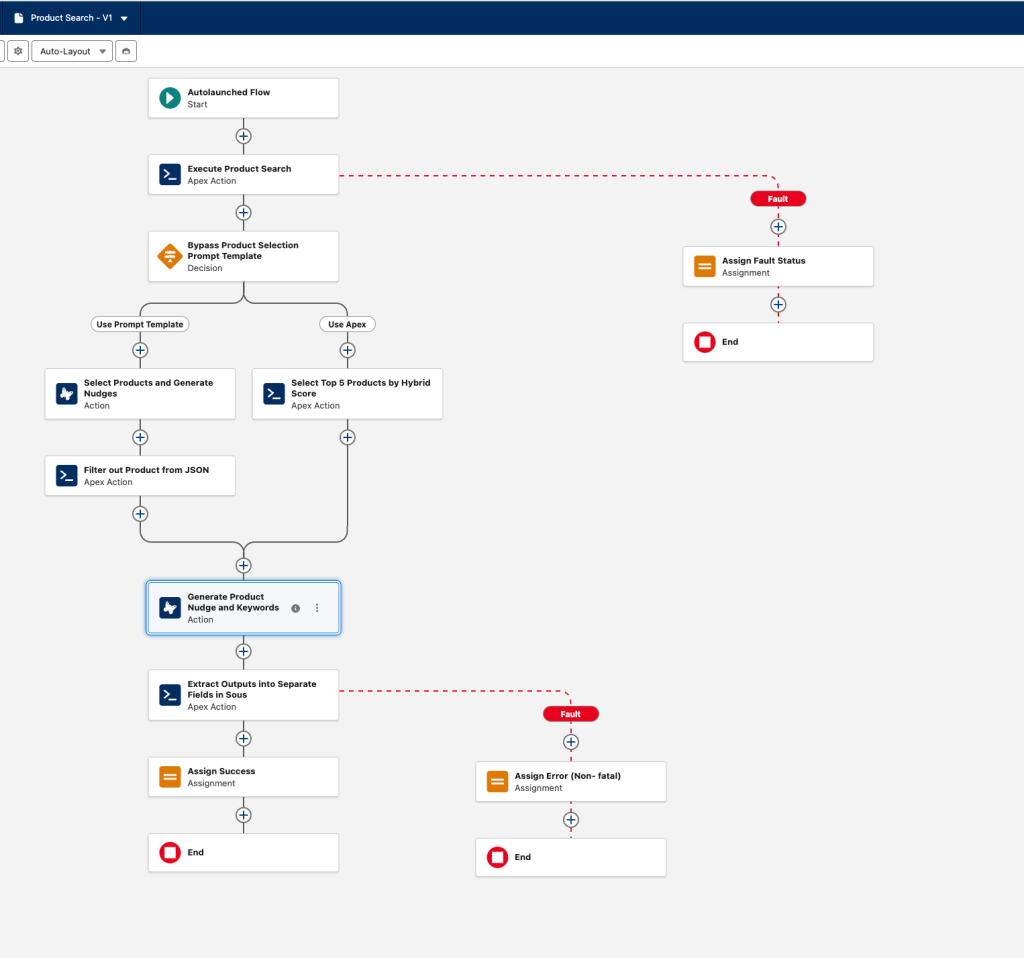

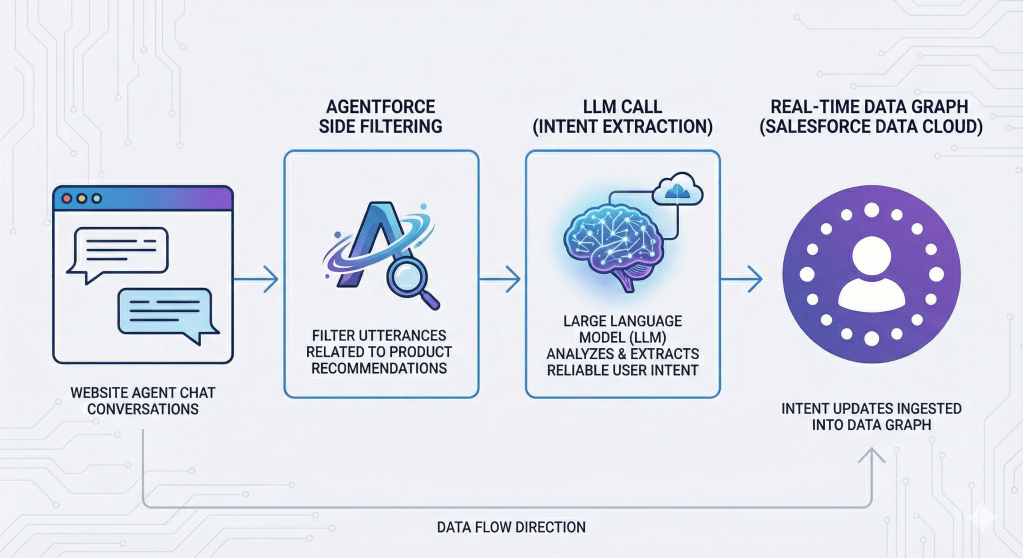

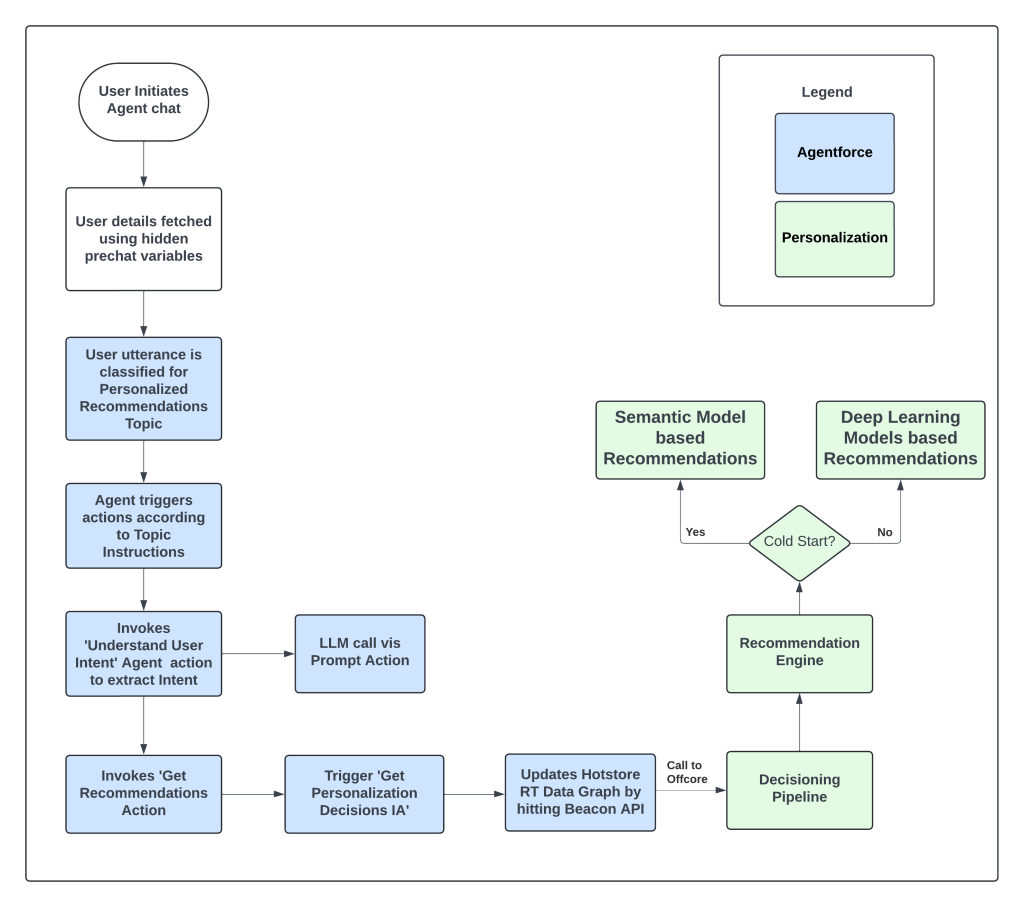

To address this, the team developed a dedicated Agentforce action called Understand User Intent. This action scans conversation history, filters out noise, and identifies the utterances most relevant to the user’s intent. Rather than passing raw text downstream, this action leverages large language models (LLMs) to extract concise, structured intent signals. It then translates these signals into standardized JSON, which the real-time personalization layer can consume.

This approach also bridges vocabulary and language gaps. By mapping user language to catalog terminology and supporting multiple languages, the system ensures intent extraction remains consistent across global use cases. Treating intent as structured data allows it to flow through existing personalization pipelines alongside other engagement signals without disrupting latency or reliability.

Intent Extraction Steps.

How did you address cold-start scenarios when no historical engagement data was available?

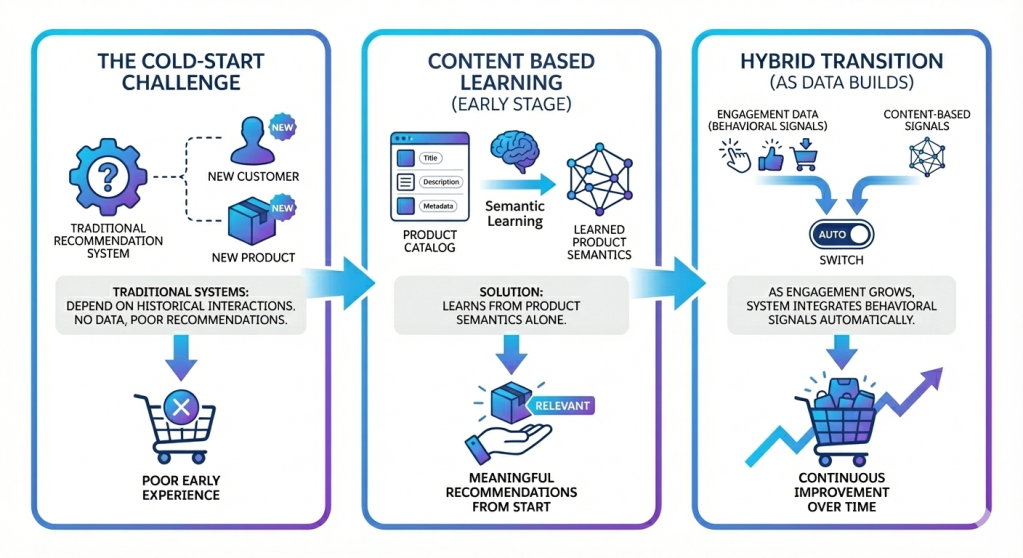

Cold-start scenarios pose a significant challenge for agent experiences, primarily because traditional recommendation systems depend heavily on historical interactions. When a new customer or product catalog is introduced, those crucial signals simply do not exist yet. This creates a hurdle for delivering meaningful recommendations right from the start.

To tackle this, the team shifted its approach from behavior-based modeling to a more content-oriented understanding. Instead of relying on how users have interacted in the past, the system now analyzes the semantic attributes within the product catalog itself. This includes titles, descriptions, and other contextual metadata. These attributes are then transformed into high-dimensional vector embeddings, which allows for recommendations based on the relationships between items, rather than just prior engagement.

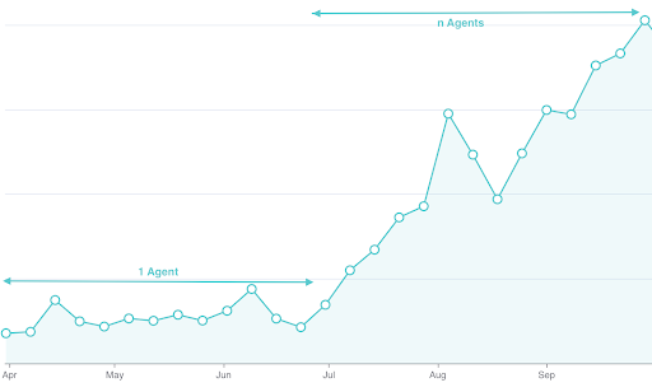

As engagement data begins to accumulate, we gather user feedback for our recommendations directly from these interactions. The system then smoothly transitions to a hybrid approach.

It progressively integrates behavioral signals alongside content-based signals. An internal switching mechanism detects when sufficient interaction data becomes available. This ensures that recommendations continuously improve over time, eliminating the need for manual intervention or compromising early-stage relevance.

Our periodic retraining cycles further enhance this process. They ensure we continuously learn from user behavior and feedback, which improves our recommendation models.

Cold-Start Strategy.

What changes were required to integrate real-time user intent with existing interaction-based recommendation models?

Traditional interaction-based recommendation models typically optimize for sequences of user actions, such as views and clicks. However, in agent-driven experiences, users explicitly state their desires. Ignoring this stated intent in favor of historical patterns can often lead to less effective outcomes.

To resolve this discrepancy, the team modified the machine learning architecture. They began treating conversational intent as a primary engagement signal. Text embedding models were integrated directly into the feature pipeline, which allowed unstructured utterances to be translated into vector representations. These representations are fully compatible with downstream models.

The system underwent a re-architecture to support multi-modal data. This means it now ingests both real-time intent and historical interaction sequences into a single hybrid model. This approach ensures that explicit intent guides recommendations while still preserving the broader context of the user’s behavior. The result is improved relevance without discarding valuable historical signals.

What integration challenges arose across Data 360, personalization systems, and Agentforce, and how were they addressed?

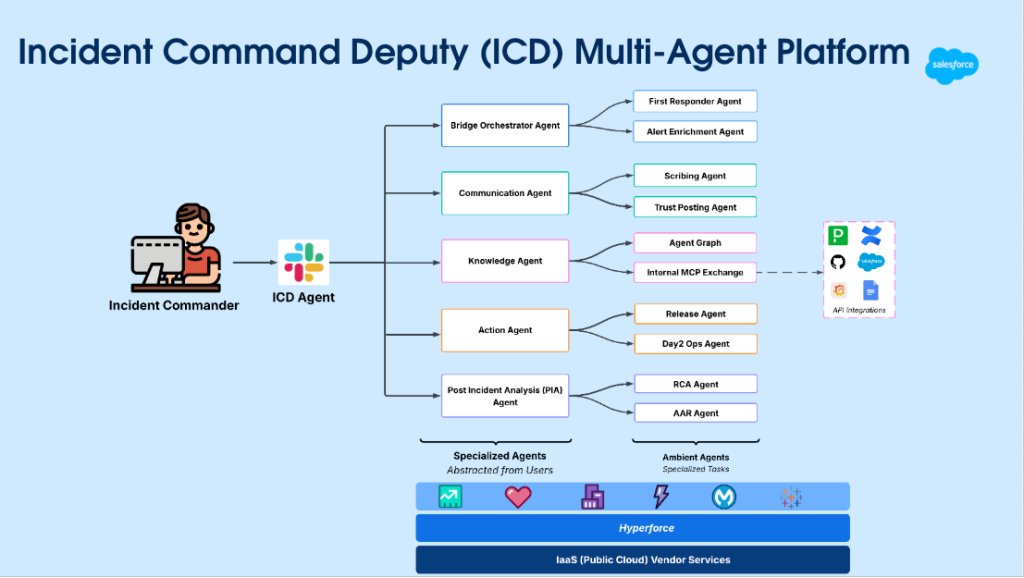

Coordinating multiple systems presented challenges concerning data flow, latency, and operational boundaries. The team needed to move data seamlessly across Data 360’s batch and real-time layers, Salesforce core systems, Agentforce orchestration, and internal model hosting services. Accomplishing this required avoiding any performance bottlenecks.

To address these issues, the team utilized Data 360’s real-time ingestion capabilities. This ensured sub-second availability of engagement and intent signals. Agentforce actions were then employed to orchestrate data flow efficiently across various layers, which reduced processing overhead. Furthermore, models for intent understanding and engagement embedding were hosted together, minimizing hops and further streamlining the inference process.

This design allowed the system to meet strict latency requirements while simultaneously maintaining accuracy and reliability. This held true even as data navigated multiple layers of the Salesforce ecosystem.

Intent Based Recommendations – End to End Flow

What validation and acceleration challenges did you face, and how did AI help address them?

Validating correctness across a multi-system architecture proves difficult before live user feedback exists. The team required a method to evaluate intent extraction and agent behavior early in the development lifecycle, ensuring the system could accurately interpret complex user needs without relying solely on traditional, static metrics.

To address this, the team integrated AI directly into the validation and data preparation pipelines:

- Refining Intent Extraction: LLMs were used to generate synthetic conversational scenarios, allowing iterative testing and refinement of intent extraction strategies across diverse domains and edge cases.

- Catalog Enrichment: AI-generated structured datasets were used to fill critical gaps in the catalog, ensuring the recommendation engine had a comprehensive foundation from which to operate.

- LLM-as-a-Judge: An LLM-as-a-judge framework was introduced to qualitatively assess recommendation relevance and system responses, establishing an objective performance baseline before human feedback loops became available.

AI-assisted tooling also helped address acceleration challenges across heterogeneous systems. We utilized AI for deep research to vet technical approaches before finalizing system designs. During implementation, tools like Cursor and workspace AI helped generate boilerplate code, unit tests, and design artifacts. This combination of AI-driven validation and research-backed acceleration enabled the team to maintain high confidence in system correctness while significantly increasing iteration speed.

Learn more

- Intent Based Recommendation is now generally available. Visit the Agentforce help documentation to get started.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.