An important transition is underway in machine learning (ML) with companies gravitating from a research-driven approach towards a more engineering-led process for applying intelligence to their business problems. We see this in the growing field of ML operations, as well as in the shift in skillsets that teams need to successfully drive ML and artificial intelligence (AI) end-to-end (from data scientists, to data and ML engineers). The need for maturity and robustness has only accelerated recently, as intelligent solutions are applied more and more into critical business areas and core products.

Salesforce is a company that caters to the enterprise space. We have unique use cases in machine learning products for our business customers, who, in many ways, are relatively unsophisticated in regards to ML fluency. We also prioritize automating our ML products and making them as robust as possible. We have to, because we typically deploy at least a model per customer; since we have tens of thousands of customers, we quickly run into a fundamental challenge. For their lifecycle, training, validation, monitoring and so forth, we need to deal with hundreds of thousands of managed pipelines and models. Those intimidating numbers are a forcing function in how we see ML automation and how we enable it at scale for all of our tenants/customers. In particular, we also focus on automation for building models (autoML), given that it is impossible to have teams that handcraft models for that many customers (which is still the typical process in most of our industry). Having no human in the loop while building and training models requires an extra layer of automation in every other aspect of the lifecycle; in particular, how we approach the quality of those models, which ultimately determines what gets deployed, what gets left behind, and for how long.

We have built and operated sophisticated systems for supporting data (Data Lakes, Feature Store), and model management (Model Store) for some time. Recently, we introduced another critical component covering an often overlooked aspect of the ML lifecycle: testing. Enter our Evaluation Store. Our philosophy for ML is that the ML lifecycle is just a specific manifestation of the software engineering lifecycle, with the twist of data thrown in. We think of the process of building and deploying models in similar terms to building and deploying software, in particular on the aspect of quality assurance. Most ML platform solutions deal with quality via a combination of metric collection/calculation systems (they are typically hooked into classic metrics/observability components like openTSDB, Datadog, Splunk, Graphana etc.), and a set of batch metric datasets/stores for offline or adhoc querying via query engines (Presto, Athena, etc.). While we also deeply care about metrics and treat them as core entities, the sheer number of models we work with has forced us to think about quality in a much more structured way. Namely, we think in terms of tests/evaluations that have a clear pass/fail outcome — very similar to how we view software engineering quality in terms of test coverage, test types, and behavioral scenarios. In our case, the primary approach to quality is to define/express it as a series/suite of tests (not metrics), involving invariants in data, code, parameters and threshold functions along with clearly expressed expectations.

Metric vs. Test-Centric ML — The Paradigm Shift

The shift of perspective from metrics to tests has major benefits in multiple areas of the ML lifecycle. Capturing tests in a structured way for each model/pipeline allows us to track consistently what test data was used, including test segments within datasets with particular requirements, and to monitor performance along multiple dimensions for these entities. It also allows to track consistently the more intangible aspects of tests that would otherwise be lost if we thought purely in terms of simple metrics. This includes aspects like expectations from the tester, which are sometimes represented as static or historic thresholds, in addition to what the test code itself is (what test/benchmark are we performing exactly, and which other model is performing the same exact test).

The contrast between metrics and tests goes deeper. A metric on its own, such as an OCR model with accuracy 95%, does not tell ML engineers a lot. It’s the context, whether implicit or explicit, associated with that metric that makes it meaningful. In the OCR example, we need to know, among other things, what dataset that metric came from, something like MNIST, a standard dataset for handwritten digits. Anyone that has trained simple models on MNIST can tell that 95% is not necessarily a good result, because they can compare with previous results on the same dataset. The point is that it is always the comparison with some expectation, rather than the raw metric, that tells the tester whether something is of good quality or not, and whether it meets desired criteria. Metrics should in that sense be considered as raw data that require interpretation. In clear terms:

metric-centric ML platforms are human driven/piloted

The key reason why the majority of ML platforms have a metric centered approach for quality is because of their deeply embedded assumption to cater to a small and human manageable number of models. That assumption originates mostly from a research centric world view and perspective. On the other hand:

test-centric ML platforms allow full automation and can be driven on auto pilot.

The reason underpinning this stark contrast between a metric-centric and a test-centric approach is simple. Metrics always require (human) interpretation to be actionable. Tests are immediately actionable by automated processes (i.e validation, deployment gates, experiment progressions, monitoring etc.). Test centered ML platforms are closer to the world of engineering products driving business cases, especially if they require industrial scale. In that sense they are much closer to an engineering centric perspective. At Salesforce this represents a fundamental paradigm shift and lies at the core of our ML platform philosophy, displayed throughout our ML stack and quality management processes.

We also have many cases where we don’t have the luxury of using a lot of data to test but can only test and rely on specific, highly curated prototypes/single representative points. Here the outcome is not a metric (aggregate), but a label instead. Tests on prototypes again challenge the reliance on metrics for determining quality. As a result, in our store we can express datasets, segments, and prototypes as integral parts of the evaluation context. These various levels of data coverage, along with curated scenarios and sequences of evaluation tasks can provide drill down insights and more holistic and interpretable assessments of quality that are easily lost with aggregate metrics.

Through the Evaluation Store, we allow our ML engineers to express their tests/evaluations (we use both words as synonyms) via a (Python) library that has similar interfaces to vanilla testing libraries in software development. In fact, our library is inspired and borrows concepts heavily from Jest (a modern Javascript testing library). The library captures inputs and results of the tests along with other metadata and takes care of reporting them to the Evaluation Store via APIs. The key concepts we organize our testing on are:

- Evaluation: maps to an entire test suite/scenario covering a full behavioral suite. As mentioned, the direct mapping in software testing is that of a test suite. Among other metadata, we capture here in a structured way the datasets used, and the code used for the test, plus what is being tested (i.e. models, pipelines or data).

- Task(s): a particular check; here we also capture which data segments or prototypes we are performing the task on. Segments represent interesting subsets of the dataset (for example, all women age 18–35 in a dataset). Tasks represent a single test in software testing terms.

- Expectation(s): typically a boolean expression contained within a task. Parameters and variables can be referenced here to be materialized during execution/runtime, and compared against what is expected by the tester. We have a few types of expectation definitions, mostly guided by simple DSLs (i.e. sql flavors and similar).

Validation, Experimentation and Monitoring via ML Tests

With the Evaluation Store as a dedicated system to represent structured tests, processes in ML that we typically think of as isolated, like offline validation, online experimentation, and (model/drift) monitoring, can be expressed via tests and their relation to the deployment process. The processes above are about managing quality, they are underpinned by testing techniques and concepts. More importantly they should be seen as a spectrum of quality checking and management processes that models progressively go through during their lifecycle journey, with tests as the foundational unifying primitive. Online experimentation cases (A/B tests, champion challenger, etc.) are nothing but a deployment of multiple model versions that incorporate tests for progression. Monitoring for data drift can be expressed as a continuously running test (say for KL divergence) after a model has been deployed which retrains or alarms if it fails its expectation, hence affecting/guiding the deployment lifecycle. Our perspective is that deployments in ML represent complex processes (we think of them as pipelines/flows) and are not singular atomic events; they ultimately have lifecycle. A simple staggered deployment, for instance, involves deploying to 5% of traffic, then testing and proceeding to 10%, then 50%, and so on. Hence, this deployment type is not immediate and has a lifecycle involving repeated, time-delayed consultations with automated tests. Similarly, drift monitoring, a classic use case of model monitoring, can be expressed as continuously or scheduled running tests that affect the deployment lifecycle if test expectations are not met.

Human Evaluations/Evaluators

The Evaluation Store allows us to express human in the loop type of decisions from end-customers or even auditors, who may not be involved at all in the building of the model but who understand very well what good predictions and bad predictions would be for their business cases. Human evaluations typically also involve interactive evaluation sessions with models, where just a few samples are tested, one at a time, each one representing a particular behavioral case from the customer. Cases like this are classic examples where the evaluation is not based on metrics but on raw predictions, whose outcome determines the human decision (pass/fail). They can provide a thumbs up or thumbs down on the model versions we enable for these tests. The separation of stakeholders, the difference in who builds vs who tests, is critical in our view, and represents the future of testing in ML. Customers, whether in an enterprise setting or not, may not be aware about the latest transformer models, but they know very well what is a good vs what is a bad prediction for them. As far as tests go, despite lack of fluency in ML, customers can be very sophisticated and they are right at home. In testing, they can speak their own business language. Hence, we have a strong case for customers providing tests along with test data themselves, rather than relying solely on who builds their models.

Evaluations of Models, Data, Pipelines and Deployments

An obvious benefit is that we are able to leverage the same concepts for establishing not just model, but also data quality standards and checks as well, where expressing quality via tests has the same benefits as for models or pipelines (executable artifacts, in software terms). Data quality is a critical ingredient for good models and a data-centric approach to ML. Data tests can be performed in a structured way, before training or even during the sourcing process in our data systems, to prevent problems in downstream tasks, like training or generation of derived datasets. Examples of such data tests relevant for downstream tasks include checking that datasets have sufficient number of samples for training, checking for missing values in important features beyond historical norms, and more sophisticated tests around significant shifts in distributions. Similar to models or data, the Evaluation Store supports evaluations of entire pipelines, as well as what we call multi-tenant deployments, which represent deployments of hundreds or even thousands of different models. These capabilities allow us to have a representation of quality beyond just simple models.

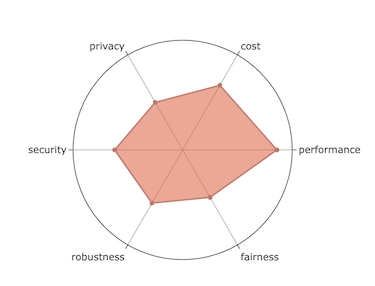

The richness of potential checks for ML applications and the large number of resulting tests, including functional tests as well as statistical ones for different data layers (segments, prototypes, full datasets), calls for standardizing test quality along a few dimensions. Specifically, we express tests along the following axes:

- performance tests (i.e. accuracy, rmse based, etc. )

- robustness tests (perturb inputs and check for changes, …)

- privacy tests (do we leak private, PII info…)

- security tests (adversarial attacks, poisonous observations…)

- fairness/ethical tests (i.e. under/overrepresented segments …)

- cost tests (memory, CPU, monetary benchmarks)

These major dimensions are an initial set, and the list may grow; however, we consistently structure quality along these six factors. We do this in particular, to shift away from a unidimensional focus in evaluations (i.e. accuracy/performance) towards a more holistic view of model quality that allows ML engineers to asses real world behavior of their models as well as to push them towards creative test scenarios.

Venturing into the future, we think that testing ML/AIs will increase in complexity and sophistication. Yet, at the same time, tests on models/AIs also need to become more transparent and accessible to customers in order to increase their trust in our intelligent solutions. As models are deployed in increasingly critical and high stakes cases (health care, finance, autonomous driving/AVs, etc.), we cannot afford an unstructured approach to quality (e.g. would relying on just the train/test data split for AVs be good enough? How are they being tested?). While our industry seems currently poised on chasing (what may be marginal) improvements via bigger models, we also think that the process of structured testing may reveal important insights that then lead to significant improvements. More structured testing and test tooling is the area that we see as the most promising to establish machine learning firmly as an engineering discipline, and elevate it from its research roots.

Both data requirements and the procedure of testing ML/AI agents could become similar to testing processes for human agents, where the tested and the tester represent different stakeholders and the process is to go through a list of questions/tasks to make a decision. This sounds a lot like a typical human interview. Because of the difference in stakeholders, we also should not assume that tests will always have access to a global view of data on what is being tested. Instead, we will need to use/generate very few data points in addition to increasing reliance on trusted credentials.

Ultimately, we think there are major benefits to expressing ML quality in terms of tests and test suites, as opposed to just metrics. The difference between the two determines how ML platforms are shaped, where reliance on metrics only gears them towards scientific labs, churning out few highly curated and high human touch ML models, while reliance on tests structures platforms towards industrial factories, churning out very large numbers of models (think millions!). We can, at the very least, adopt and borrow from the experience in software engineering and the testing processes and concepts they have over several decades. The Evaluation Store and its foundation on test concepts frames the ML quality strategy for our platform.