Introduction

In 2016, I started as a fresh grad software engineer at a small startup called MetaMind, which was acquired by Salesforce. Since then, it has been quite a journey to achieve a lot with a small team. I’m part of Einstein Vision and Language Platform team. Our platform provides customers with the ability to upload and train datasets (images or text) to produce models that can be used for generating insights in real time. We serve internal Salesforce teams working on Service Cloud, Marketing Cloud, and Industries Cloud, as well as external customers and developers.

If you’ve ever interacted with a chatbot on a leading e-commerce apparel store, financial institute, healthcare organization, or even a government agency, then it’s likely that your request was processed on our platform to understand the question and provide an answer. For example, Sun Basket customers are able to track orders or packages, report any issues with delays or damage, and get a credit or refund. AdventHealth is able to provide patients with an interactive CDC COVID-19 assessment and educate them about the disease.

I’m frequently asked about my experience building an AI platform from the ground up and what it takes to run it successfully, which is what this blog post explores.

User Experience

Understand Your Customer | End-to-End User Experience

User experience is the key to our success. Our target users are developers and product managers. We provide a public-facing API to interact with our platform. As a team of product managers and developers, it was easy to imagine a fellow developer or product manager using an API to upload a tar file with images. But, the challenge we faced was that not all of our users in these roles were familiar with deep learning or machine learning.

We had to educate our users by answering questions like: What’s a dataset? Why do you need a dataset? What are classes in a dataset? How many samples do we need in a dataset? What does training on a dataset mean? What is a model? How do I use a model? And that’s not all; in some cases, we were even asked, “What is machine learning, and why do I need it?”

We spent a considerable amount of time understanding our customer. We ran several in-house A/B tests and opened our platform to a small number of pilot customers. We also ran workshops and hands-on training sessions to educate customers about the model training process, challenges with quality and quantity of data, prediction accuracy, and so on. Ultimately, we realized that, based on the level of understanding and experience with machine learning we encountered, we needed multiple channels to onboard users to our platform. So, we introduced Einstein Support. This page points you to the relevant resources for working with Einstein Vision and Language Platform.

For a novice user who may not be familiar with machine learning concepts — such as dataset, training, prediction — we provide step-by-step self-service tutorials via Trailhead. One of my favorite guides is this Journey to Deep Learning.

To make it easier to get up and running, we also created Einstein Vision and Language Model Builder. This is an AppExchange package that provides a UI for the Einstein Vision and Language APIs, and it enables customers to quickly train models and start using them.

For a user who is a developer looking to dig in to code, we have code snippets that show API usage — such as uploading datasets — in different languages and frameworks. For a product manager, we have API documentation with cURL command snippets to make API calls.

curl -X POST \

-H "Authorization: Bearer <TOKEN>" \

-H "Cache-Control: no-cache" \

-H "Content-Type: multipart/form-data" \

-F "type=image" \

-F "path=https://einstein.ai/images/mountainvsbeach.zip" \

https://api.einstein.ai/v2/vision/datasets/upload/sync

In addition, we created a community of developers via forums and blogs. It’s so rewarding to see a developer blog post like this one.

To further improve the user experience and to provide a quick sense of our platform’s capabilities, we introduced pre-built models. These models are built by our team and are available to all customers. Users can sign up and quickly make a prediction. Within minutes, the user gets a sense of the value that the platform can provide.

Lastly, I want to mention that we’re obsessed with our API quality and follow strict processes for API changes and releases. Backwards compatibility is always a hotly debated topic of discussion across the team.

Team

Domain Expertise | Technical Expertise

Great products are built by great teams, and an AI platform is no exception. In my opinion, there are four team pillars on which an AI platform is successfully built: Product, Data Science, Engineering, and Infrastructure. Each of these teams has its own charter. But, it’s important that each team has both domain expertise and technical expertise.

Domain expertise is required to understand the target market, business value, the use cases, and how a customer uses AI within their business processes. Technical expertise involves understanding the importance of data and data quality, how models are trained and served, and the infrastructure that’s the foundation of the platform. Unless all the stakeholders understand these challenges deeply, it’s difficult to drive conversations towards solutions for addressing customer use cases.

Data scientists are focused on conducting research and experiments, building models, and pushing the boundaries of model accuracy. Engineers are focused on building production-grade systems. But, shipping a model from research to production is a non-trivial task. The more each team knows about both the product and technical aspects, the more efficient the team is and the better solutions they create.

For example, in a realtime chatbot session, the user expects to get an instantaneous response. A model may be highly accurate in its predictions, but those predictions lose value if they can’t be served within a certain timeframe to the end user. To put it into perspective, we have thousands of customers simultaneously training their own models and using them in realtime. Our customers expect that, once their model is trained, it’s available for use and serves predictions within a few hundred milliseconds.

Building a platform that can support such requirements demands involving people who can understand model architectures and their functional and compute requirements, know how to use those models to serve customer requests and, ultimately, build them to return responses on which customers can build business logic and realize value. This requires data scientists, software engineers, infrastructure engineers, and product managers to collaborate closely in order to provide end-to-end user experience.

Process

Communication | Collaboration

Given the cross-functional nature of our team organization, it took some iterating to land on a way for us to seamlessly work together. Active collaboration among all stakeholders — the applied research and data science team, the platform and infrastructure engineering team, and the product team — has to start right from the first phase of identifying the requirements to delivering the solution to production.

An effective process of communication and collaboration reduces friction between cross-functional teams, creates accountability, improves productivity, promotes exchange of ideas, and drives innovation.

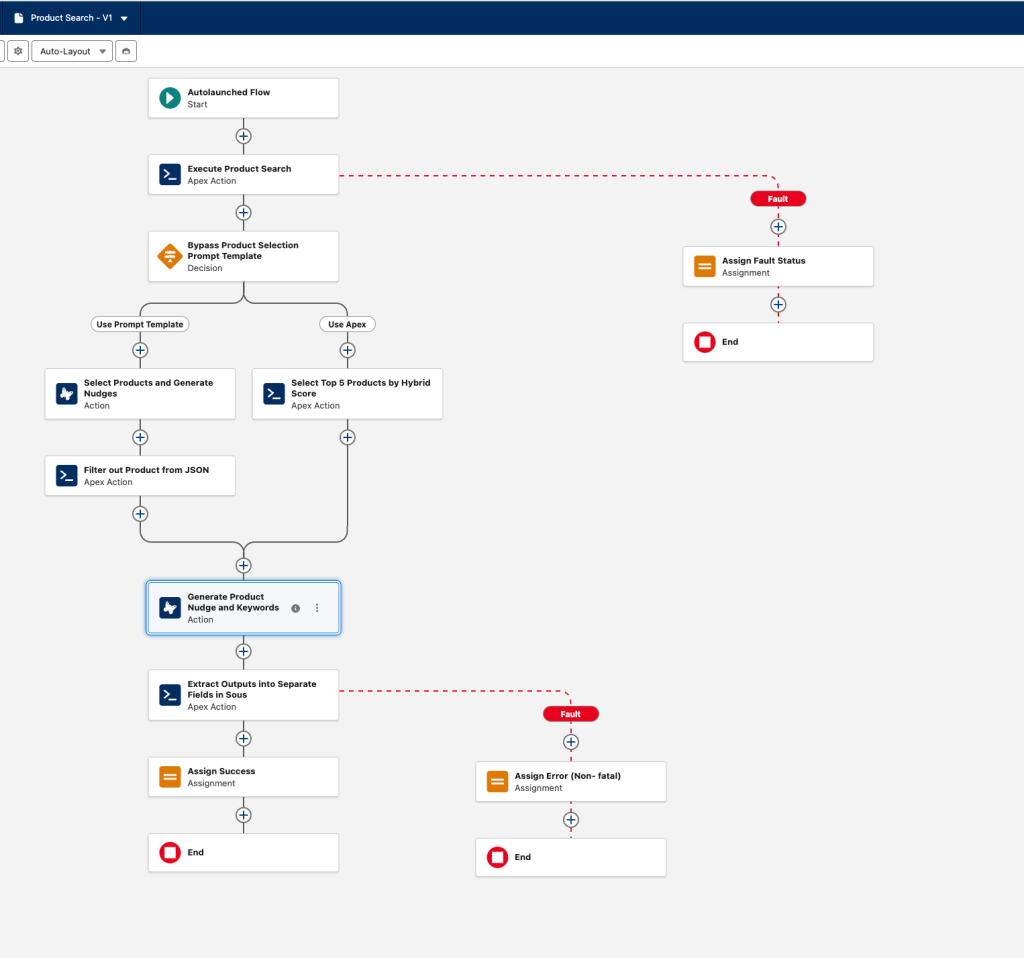

After iterating through different schedules of daily standup updates, weekly sync meetings, sprint demos, and retrospective meetings, we ultimately came up with an effective, end-to-end data science and engineering collaboration process, which you can see in the following timeline.

This is a high-level view of our collaboration process. The idea is that every use case involves active collaboration among stakeholders from multiple teams starting right at the beginning.

Data scientists/researchers, engineers and product managers work together to identify solutions and propose alternatives.

For example, in the Spiking phase, there are multiple teams working in parallel. Data scientists figure out what neural network architecture best fits the use case. Platform engineers work to identify potential API changes, changes involved in data management, and set up training and prediction systems. Infrastructure engineers work to identify resource requirements: GPU/CPU clusters, CI/CD pipeline changes, and so on.

As part of each of the collaboration steps, we created a uniform way to track communication among these cross-functional teams and to record design and architecture artifacts and decisions using Quip documents.

This graphic shows a sample project home document that contains a project summary and a list of representation from across teams and maintains records of all project-related artifacts throughout the collaboration process.

We created templates for all the artifacts so teams can simply fill in the details. Using templates ensures uniformity in recording information across teams and projects.

Using this process, we were able to ship the first version of one of our first research-to-production models, Optical Character Recognition (OCR), in a matter of two weeks.

Agility — Research to Production

Cloud Agnostic | ML Framework Agnostic

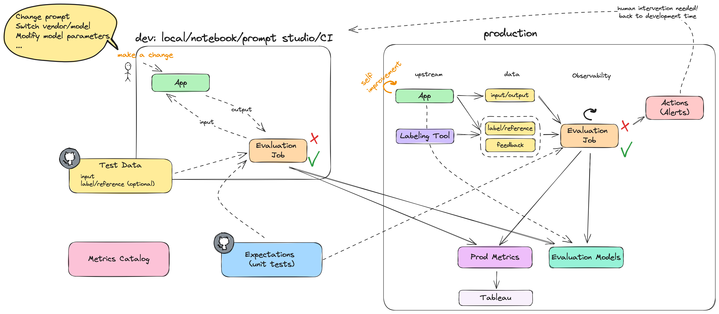

The ability to iterate and ship new features is an important attribute of an AI platform team. When it comes to identifying the right solution to solve an AI use case, data scientists and researchers need to experiment with datasets and neural networks. This involves running model training sessions with several different parameters and various feature extraction and data augmentation techniques. All of these operations are expensive in terms of time and compute resources. Most experiments require expensive GPUs for training, and it can still take several hours (or sometimes days), depending on the complexity of the problem.

So our teams needed an easy, consistent, flexible, and framework-agnostic approach for experimentation. And also an established pipeline to launch features/models to production for customers.

Our platform provides a training and experimentation framework for researchers and data scientists to quickly launch experiments. We have published a training SDK that abstracts all the service and infrastructure-level components. The SDK also provides an interface to easily provide training parameters, pull datasets, push training metrics, and publish model artifacts. The framework also provides APIs to get the status of training jobs, organize experiments, and visualize experiment metadata.

To ship a model to production, the data science and research team uses an established Continuous Integration (CI) pipeline to build and package their training and model serving code as Docker images. The platform team also provides base Docker images: Python, TensorFlow, PyTorch. The CI process publishes these Docker images to a central repository.

Similar to the training SDK, our prediction service acts as a sidecar and interacts with the model serving/inference container that the data science and research team publishes using a standard gRPC contract. This gRPC contract abstracts any pre/post-processing and model prediction-specific operations and provides a uniform way to provide input to the model and receive a prediction as output.

The data science and research team can then use platform-provided APIs to launch the new version of these training and model serving Docker images in production. After the Docker images are launched, customers can then train new models and use them for generating insights.

The key takeaway here is that our platform services are cloud-agnostic (we run on Kubernetes) and ML framework agnostic. As long as a new team member is familiar with Docker and API interfaces, they can be productive on day one. This drastically reduces the learning curve of using our platform, enables rapid prototyping, and improves time-to-production.

Extensibility and Scalability

Standard Convention | Uniformity

One of the biggest learnings for us as a team over the past few years has been designing the platform for extensibility and scalability. Our platform has gone through several iterations of improvements. Initially, most of our services were designed for specific AI use cases. So some parts appeared rigid as we onboarded new use cases.

Our data management service was initially built to handle image classification and text classification use cases. Naturally, all of our data upload, validation and verification, and update and fetch operations were tuned to those two data formats/schema. As we started onboarding image detection, optical character recognition, and named entity recognition use cases, we realized that it’s redundant and inefficient to implement dataset-specific pipelines to perform these operations. It was evident that we needed a dataset type agnostic system that can provide a uniform way to ingest, maintain, and fetch datasets.

We built a new data management system, which now allows our platform to support any type of dataset (images, text, audio) and provide a uniform way to access those datasets. The idea is to maintain a uniform “virtual” dataset that’s nothing but metadata of the dataset (number of examples, train set vs. test set, sample IDs) and to expose APIs that provide a simple and unified experience to access data regardless of the type.

As mentioned in the section above, our training and prediction services provide abstraction using SDK and gRPC contracts, respectively. This allows the platform to be consistent and uniform regardless of the type of deep learning framework, tool, or compute requirements. Our training service can scale horizontally to launch several experiments and training sessions without having to address framework or language-specific challenges. And, similarly, the prediction service can easily onboard new model serving containers and acquire predictions from them over gRPC contracts, unaware of any model- specific pre/post-processing and neural network-level operations.

Establishing a standard convention and abstracting the low-level details to create uniform interfaces has allowed our platform to be extensible and scalable.

Trust

Security-First Mindset | Ethical AI

Our #1 value at Salesforce is trust. For us, trust means keeping our customers’ data secure. Any product or feature we build follows a security-first mindset. Einstein Vision and Language Platform is SOC-II & HIPAA compliant.

Every piece of code we write and every third-party or open-source library we use goes through threat and vulnerability scans. We actively partner with product and infrastructure security team, as well as the legal team at Salesforce, to conduct reviews of features before we deliver them to production. We implement service-to-service mTLS, which means all the customer data that our systems process is encrypted in transit. And, to secure data at rest, we encrypt the storage volumes.

All customers are naturally sensitive about their data. The security processes we follow have been a critical part in gaining the trust of our customers. Being compliant also means we’re able to venture into highly data-sensitive verticals like healthcare.

Our research and data science teams follow ethical AI practices to ensure that the models we build are responsible, accountable, transparent, empowering, and inclusive.

Acknowledgement

Thanks to Shashank Harinath for key contributions in establishing the data science & engineering collaboration process & for keeping me honest about our systems and architecture and to Dianne Siebold and Laura Lindeman for their review and feedback on improving this blog post. Also, I’d like to take the opportunity to mention it has been a wonderful learning experience working with the talented folks on the Einstein Vision and Language Platform and Infra teams.

Please reach out to me with any questions. I would love to hear your thoughts on the topic. If you’re interested in solving challenging deep learning and AI platform problems, we’re hiring.