Introduction

The Einstein Vision and Language Platform Team at Salesforce enables data management, training, and prediction for deep learning-based Vision and Language use cases. Consumers of the platform can use our API gateway to upload datasets, train those datasets, and ultimately use the models generated out of training to run predictions against a given input payload (for example, input text during a live chat). The platform is multitenant and supports all the above operations for different customers simultaneously.

Prediction Service is one of the Einstein Vision and Language Platform services that runs behind the scenes. As the name suggests, its job is to serve predictions. The nature of the predictions is real-time. For example, a customer may train a custom model to learn different intents from a set of chat messages. Then, during a live chat session, the utterances are sent to the Prediction Service where the service uses the customer-trained model to identify and return the intent of those utterances. Based on the intent returned by the model, the chatbot takes the appropriate decision (for example, checking the status of an order). Failing to return prediction result within the Service-Level Agreement (SLA) window may result in poor end-user experience.

In addition to being real-time, predictions are multitenant: multiple customers train one or more models and use them to get predictions. Together, real-time & multitenancy aspects of predictions make it an interesting challenge to solve. The real-time aspect of predictions implies that the time taken to serve a prediction request should be low — for us it depends on use-case; typically the SLA’s for latencies range from 150 ms to 500 ms. And the multitenancy aspect means managing models for thousands of tenants in a way that model access from memory to disk, and file store (AWS S3) to disk is minimized.

Typical Prediction Request/Response

Before we dive deeper, let’s take a look at a typical prediction request. This is what our service looks like to the consumer.

Request

The following example shows the request parameters for a language model (intent classification) prediction request. The modelId is the unique ID that’s returned after you train a dataset and create a model. The document parameter contains the input payload for prediction.

{

"modelId": "2ELVAO5BNVGZLBHMYTV7CGD5AY",

"document": "my password stopped working"

}

Response

After you send the request via an API call, you get a response that looks like the following JSON. The response consists of the predicted label—alternatively called category—for the input payload. Along with label, the model returns a probability for each label, which indicates the confidence of the prediction.

In the response below, the model is 99.04% confident that the input, my password stopped working, belongs to the label Password Help. Similarly, the rest of the probability scores indicate that the input doesn’t belong to other labels.

{

"probabilities": [

{

"label": "Password Help",

"probability": 0.99040705

},

{

"label": "Order Change",

"probability": 0.003532466

},

{

"label": "Shipping Info",

"probability": 0.003473858

},

{

"label": "Billing",

"probability": 0.0024010758

},

{

"label": "Sales Opportunity",

"probability": 0.00018560764

}

],

"object": "predictresponse"

}

Sending a prediction request is just a simple cURL call.

curl -X POST \

-H "Authorization: Bearer <TOKEN>" \

-H "Cache-Control: no-cache" \

-H "Content-Type: application/json" \

-d "{\"modelId\":\"2ELVAO5BNVGZLBHMYTV7CGD5AY\",\"document\":\"my password stopped working\"}" \

https://api.einstein.ai/v2/language/intent

You can quickly sign up for free and build your own model using your own data or one of the sample datasets in our API reference. Or try one of our pre-built models. Feel free to drop a note in comments and let us know about your experience using our API.

Lifecycle of a Prediction Request

To understand how predictions are handled in a multitenant environment, let’s look at the lifecycle of a single prediction request.

After you train a dataset, the model artifact is stored on an AWS S3 file store. The model metadata (for example, model ID, S3 location) is stored in the database via the Data Management Service (DM). To get the location of the model, Prediction Service queries DM service using the customer’s tenant ID and model ID. DM returns a pre-signed S3 URL with a temporary access token.

Prediction Service then downloads the model tar from AWS S3 and stores it on the Elastic File System (EFS) disk (if the model does not exist on the disk already). Then the model tar is extracted and the model is loaded into memory. Finally, the pre-processed input is run through the model (neural network) and the output is returned back.

An example of pre-processing is tokenization & embedding lookup. Typically, the prediction input (eg. live chat) for language models (eg. intent classification or Named Entity Recognition) is first tokenized where the input is split into words. Further, the words are then used to do a lookup to get their vector representations. This lookup table of words & their vector representations is called an embedding — glove is one type of embedding. This is what vector representation of word “salesforce” looks like — only first five dimensions shown:

salesforce 0.23245 -0.31159 0.28237 0.10496 0.3087 ....

The vector representation is then passed to the model for prediction. The model returns a set of labels and their probability scores as shown in example response above.

Note: Embeddings are intentionally kept separate from model artifact to reduce memory & disk footprint, which factors into overall performance.

Prediction Service Overview

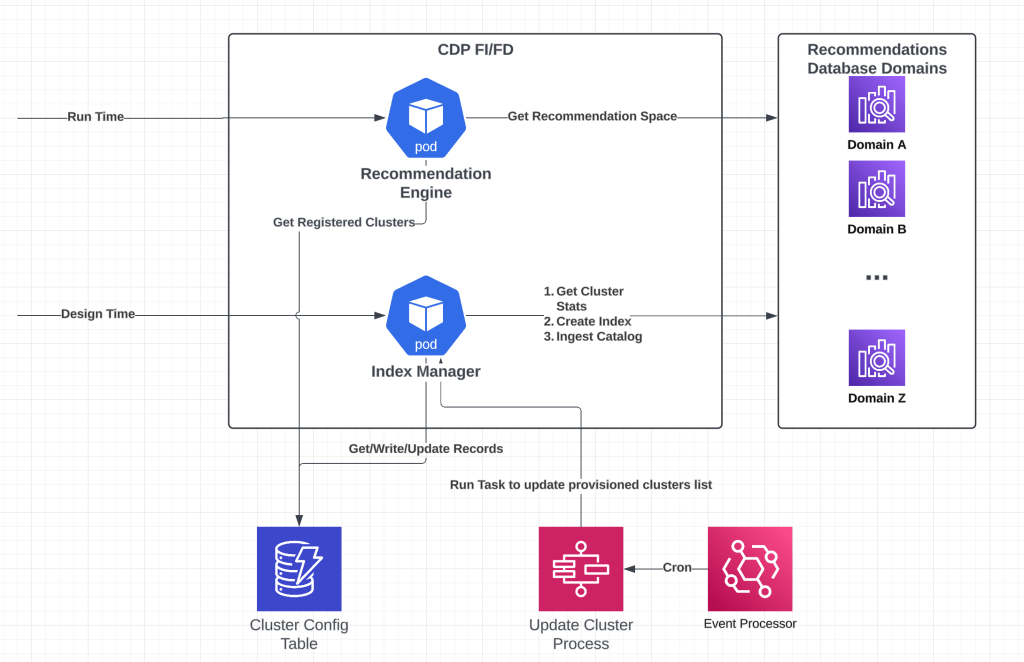

The Einstein Vision and Language Platform runs on a Kubernetes cluster. All services are deployed using Kubernetes Deployment to launch multiple replicas of each service as Pods which are exposed via cluster-scoped internal Kubernetes Service endpoints.

The diagram below shows language and vision predictors running on the Kubernetes cluster. Predictor is a group of pods responsible for carrying out prediction/inference and they are fronted by a Kubernetes service. The API Gateway acts as a proxy to the Prediction Service and routes requests across different predictors.

Predictor Pod

Let’s dive deeper into the Predictor. The Predictor is collection of pods fronted by a Kubernetes service that acts as a load balancer. Each pod in the Predictor consists of two containers: model management sidecar and inference.

sidecar container

- The sidecar container is a RESTful server and is responsible for model management and business logic flows. It accepts requests from the API Gateway and downloads the model (if not already present) and related metadata from the DM service. This container communicates with the inference server over gRPC and provides model metadata (for example, location on disk) and prediction input payload.

inference container

- The inference container is a gRPC server that accepts requests from the sidecar container. It performs pre-processing, prediction, and post-processing.

gRPC contract

- The Einstein Vision and Language Platform team published a standard gRPC contract which provides a way for all Data Science and Applied Research teams at Salesforce to onboard to the platform. The gRPC contract abstracts all of the model download and business logic flows so that data scientists and researchers can focus purely on pushing the boundaries of model quality. An example of gRPC protobuf used by one of our Intent Classification predictors looks like this:

syntax = "proto3";

package com.salesforce.predictor.proto.intent.v1;

option java_multiple_files = true;

option py_generic_services = true;

/*

* Intent Classification Predictor Service definition,

* accepts a model_id & document and returns labels with

* probabilities for each.

*/

service IntentPredictorService {

rpc predict(IntentPredictionRequest) returns (IntentPredictionResponse) {}

}

message IntentPredictionRequest {

string model_id = 1; // id of the model

bytes document = 2; // document to classify

}

message IntentPredictionResponse {

bool success = 1;

string error = 2;

repeated IntentPredictions probabilities = 3;

}

message IntentPredictions {

string label = 1; // predicted label

float probability = 2; // probability score

}

Conclusion

The blog presents an overview of Einstein Vision & Language Platform and discusses the architecture and components of Prediction Service. In that, the prediction request lifecycle describes the operations carried out in real-time to successfully serve a prediction request and thereby highlights the challenges involved in achieving low latency SLAs while managing hundreds of models from multiple tenants. Also, we briefly discussed gRPC protocol buffers as the means for smooth on-boarding and collaboration between Data Science & Platform teams. In a future blog post, we plan to discuss in detail our architecture for in-memory and on-disk model management and the challenges associated with it.

Please reach out to us with any questions, or if there is something you’d be interested in discussing that we haven’t covered.

Follow us on Twitter: @SalesforceEng

Acknowledgements

I would like to thank Shu Liu, Linwei Zhu & Gopi Krishnan Nambiar for contributing to various aspects of Prediction Service design and implementation, Justin Warner for refactoring & improving the state of integration tests for repeatable and isolated test environment, Shashank Harinath for championing the use of gRPC based contracts for effective Data Science & Platform collaboration & on-boarding and Vaishnavi Galgali for helping with migration to AWS EKS. Last but not the least, thanks to Ivaylo Mihov for the continuous support & inspiration.