Why Machine Translation

At Salesforce, our goal in introducing machine translation was to increase scalability and better serve our international customers. Advantages include:

- Innovating and acquiring know-how internally

- Reducing translation time by enhancing translators’ productivity

- Increasing content freshness by publishing more frequent updates

- Reinvesting savings into high-value content and products

When we explored commercially available solutions four years ago, we quickly realized that they didn’t offer any data privacy and customizability to support XML tags or terminology, and the specificity of our technical domain was challenging. Driven by Kazuma Hashimoto, Lead Research Scientist at Salesforce Research and Raffaella Buschiazzo, Director, Localization at R&D Localization, the team built a Neural Machine Translation (NMT) system on the Salesforce domain with a language-agnostic architecture with models for each language. The system processes whole XML files from English into 16 languages: French, German, Japanese, Spanish, Mexican Spanish, Brazilian Portuguese, Italian, Korean, Russian, Simplified Chinese, Traditional Chinese, Swedish, Danish, Finnish, Norwegian, and Dutch.

Our primary target for machine translation (MT) was the Salesforce online help content, which had been localized by professional translators for 20 years. Salesforce international customers use our online help to resolve issues. If they can’t do it through the localized help because translation quality is poor, they will escalate to in-country tech support. For this reason, high quality machine translation is expected but is hard to achieve because the new release content comprises new feature/product terminology not included in our translation memories and general training corpora.

Challenges

Challenges that we faced while building our system include:

- Tag handling. We built our end-to-end system based on our publicly available dataset and we filed a patent (US10963652B2 — Structured text translation).

- Getting the “right” mix of customized training datasets and more general ones. We benchmarked our MT output against commercially available systems and observed that, while our model scored higher for sentences with content specific to Salesforce, the others provided better results for more generic content. That’s how we decided to switch from using our Salesforce-only data to fine-tuning publicly pre-trained models: mBART, XLM-R, etc.

- Quality tracking. When we started, we used BiLingual Evaluation Understudy (BLEU) scores and conducted human evaluations at each iteration. Translators evaluated 500 machine translated strings using 1/2/3 categorization (1 — Translation is ready for publication; 2 — Translation is useful but needs human post-editing; 3 — Translation is useless), plus offered their overall feedback after post-editing 100K new words + 300K edits per major release. But this was not giving us enough data to understand how we were doing. That’s why in 2020 we started calculating the edit distance on every post-edited segment by using an algorithm respecting the Damerau–Levenshtein edit distance. It counts the minimum number of operations needed to transform one string into the other, where an operation is defined as an insertion, deletion, or substitution of a single character, or a transposition of two adjacent characters. The average of all machine translated segments that didn’t need any edits across languages over five releases is 36.03% which is pretty good considering that we have languages that are still challenging for MT, such as Finnish and Japanese.

- MT API. When our model started producing good quality output, we knew that it was time to integrate the MT API to our localization pipeline. This required security implementation, testing, and computer capacity.

Technical Overview

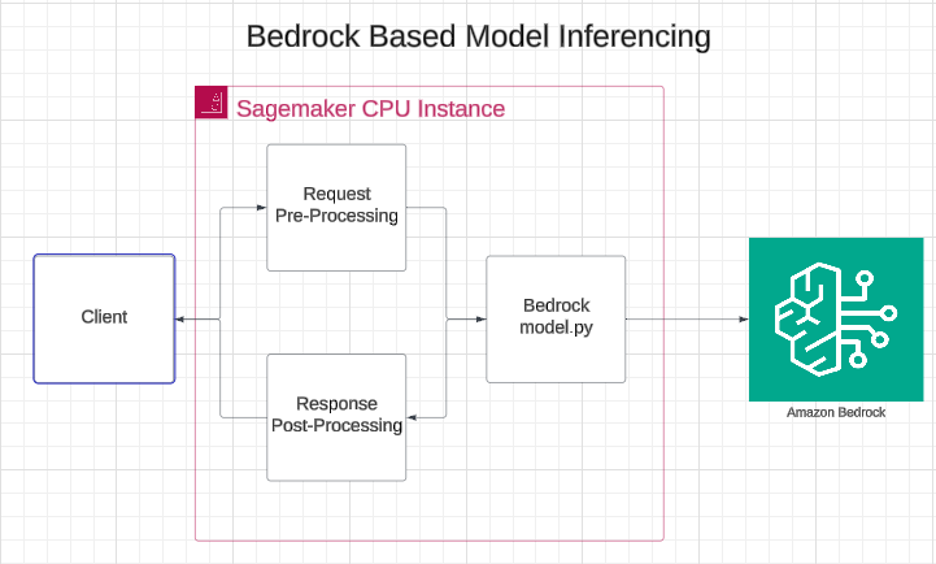

Our goal was to translate rich-formatted (XML-tagged) text, so we investigated how neural machine translation models could handle such tagged text.

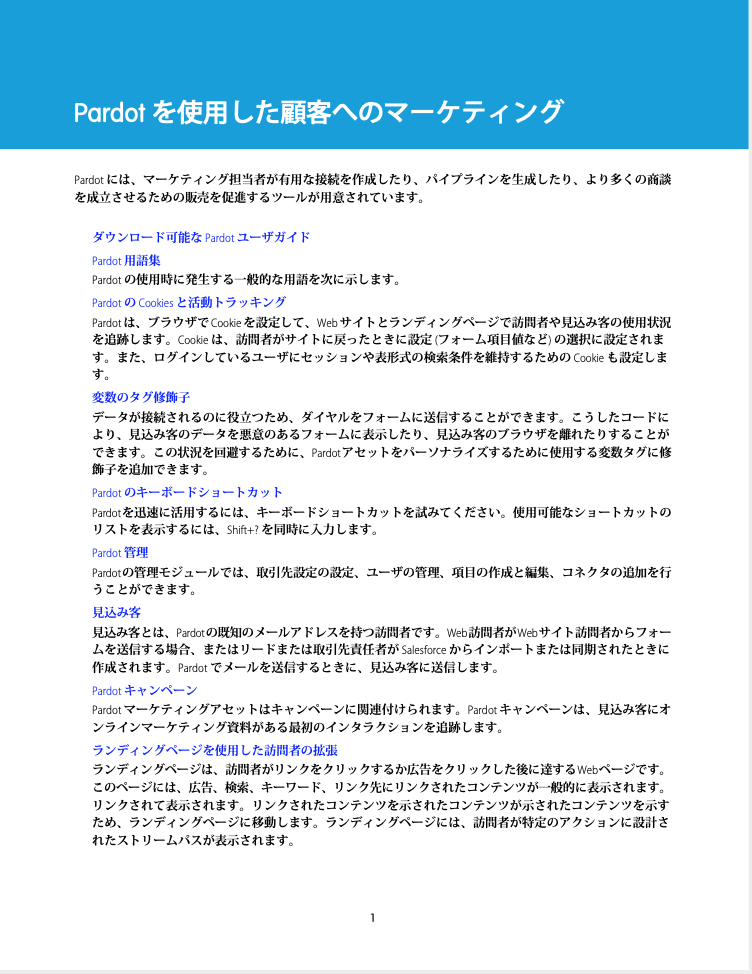

Here are two example pages from a PDF output from our English-to-Japanese system. We can see that the original styles are preserved because our MT model can handle XML-tagged text.

To build our system, we first extracted training data from the Salesforce online help, and we released the dataset for research purposes. Basically, our model is based on a transformer encoder-decoder model, where the input is XML-tagged text in English, and the output is XML-tagged text in another language.

To explicitly handle XML-specific tokens, we use an XML-tag-aware tokenizer and train a seq2seq model. Our model also has a copy mechanism to allow it to directly copy the special tokens, named entities, product names, URLs, email address, etc. This copy mechanism will later be used to align positions of the XML tags between two languages.

For the entire system design, there are two phases: training and translation. At training time, we use the training data from the latest release, and we also incorporate release notes for the target release. Release notes describe new products or features in the particular release, so we can let the model learn how to translate the new terminology in the new context.

This is similar to providing a new grocery list, but release notes can be seen as a set of contextualized groceries. For the translation phase, we first extract new English sentences that have little overlap with our translation memory, remove metadata from XML tags, and run our trained model. This is because, if we can find a similar string in the translation memory, we do not need to run MT, but instead human translators can directly edit it. In the end, we recover the metadata for each XML tag by using our copy mechanism for the tag position alignment. The translated sentences are verified and post-edited, if necessary, by our professional translators, and then finally published.

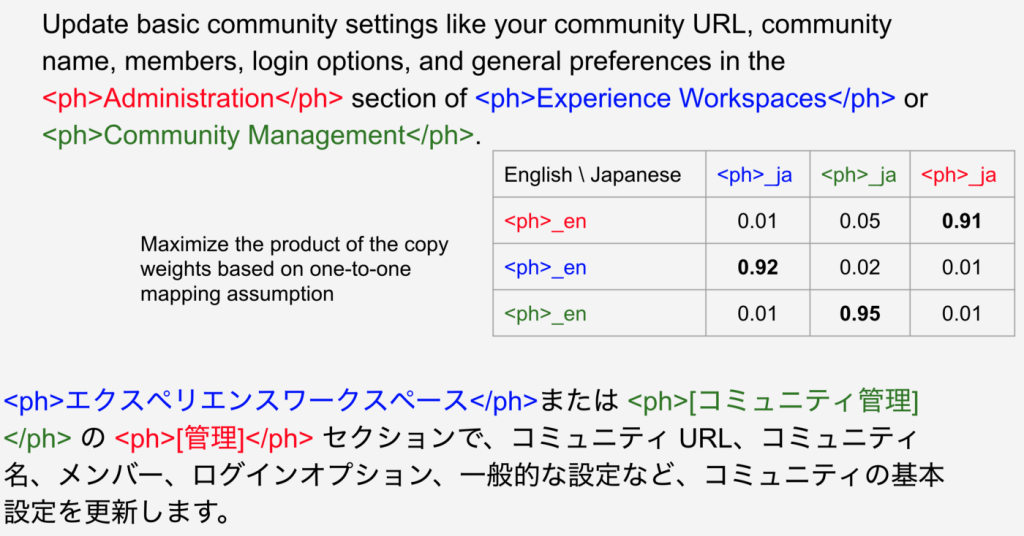

Here is an example of how our system’s pipeline works.

(1) Overview

An English sentence with markup tags is fed into our system, and then another sentence in a target language (here Japanese) is output. Those tags are represented as placeholders with IDs.

(2) Input preprocessing

Our system first preprocesses the input sentence by replacing the placeholder tags with their corresponding tags (i.e., <ph>…</ph> tags). For the sake of simplicity, we do not include any metadata or attributes inside these tags, which makes it easier for our MT model to perform translation with the tags.

(3) Translation by our model

We then run our MT model to translate the English sentence with the simplified tags. Here we can see a Japanese sentence (with the tags) output by our model.

(4) Tag alignment

To convert the translation result to the original format with the placeholder tags, we need to know which source-side (i.e., English-side) tags correspond to which target-side (i.e., Japanese-side) tags. We leverage the copy mechanism in our model to find a one-to-one alignment between the source-side and target-side tags. The detail of the copy mechanism is described in our paper. The alignment matrix allows us to find the best alignment as shown in the figure. In this example, tags with the same color correspond to each other.

(5) Output postprocessing

In the end, we can replace the simplified tags with the original placeholders by following the tag alignment result. As a result, we can see that the translated sentence is output in the same format.

In 2020 we succeeded in implementing Salesforce NMT as a standard localization process for our online help. Now 100% of our help is machine translated and human post-edited by our professional translators for all 16 languages we support. We developed a plugin to track MT quality systematically, trained our translators on MT post-editing best practices, and reduced training time for the MT models from 1 day to 2–3 hours per language. This training time reduction has been done with a continual learning method. The idea is that, instead of training the models at every release, we quickly fine-tune our previous models only with new additional data (like release notes and new content added in the latest release).

Future Applications

We envision extending MT to 34 languages, to machine translate UI software strings and other content (i.e. knowledge articles, developer’s guides, and so on) in the near future. Having an in-house MT system also means that we could potentially use it for customer-facing products such as Salesforce case feed, Experience Cloud, Slack apps, etc. And maybe one day we could make Salesforce MT API available to our customers as an out-of-the-box or trainable product. There is so much content out there that people need to understand but not enough time and resources to human translate it all! That’s where Salesforce NMT system can be the protagonist.

Acknowledgements

This program wouldn’t have been possible without the leadership and support over the years by Caiming Xiong, Managing Director of Salesforce Research, Yingbo Zhou, Director of Salesforce Research, and Teresa Marshall, VP, Globalization and Localization.