In our Engineering Energizers Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Muralidhar Krishnaprasad (MK), President and CTO, C360 Platform, Apps, Industries, and Agentforce. His organization leads the unification of Salesforce’s core platform layers — data, agent, and applications — into a single, cohesive architecture that empowers real-time, AI-driven enterprise experiences.

Explore how MK’s team unified these Platform layers end-to-end: harmonizing fragmented systems of record inside the data foundation, engineering real-time context and activation at scale, and delivering consistent agentic execution across applications and channels — ultimately enabling Customer 360 to function as an integrated, Platform-wide engine for service, sales, marketing, and beyond.

What is your team’s mission transforming the Agentforce 360 Platform into the unified, real-time foundation for enterprise applications, including Salesforce’s app and industry clouds?

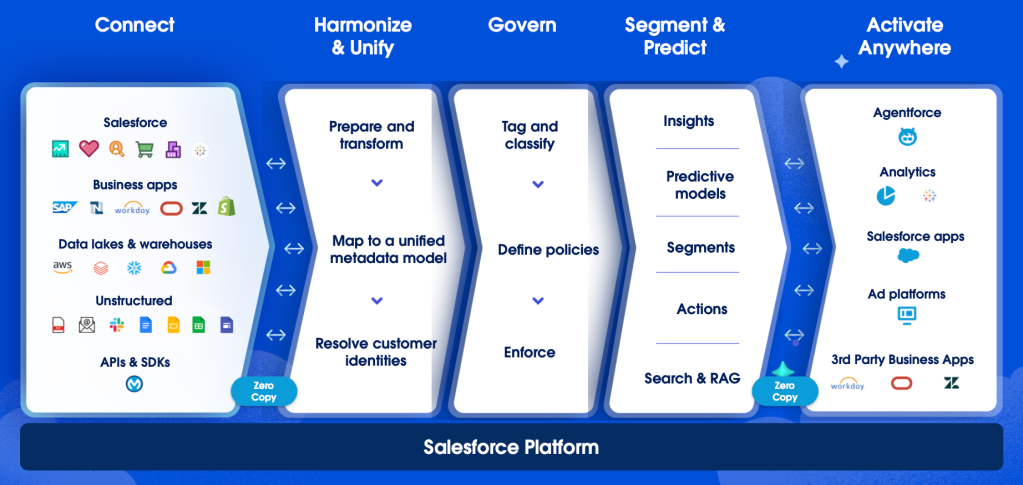

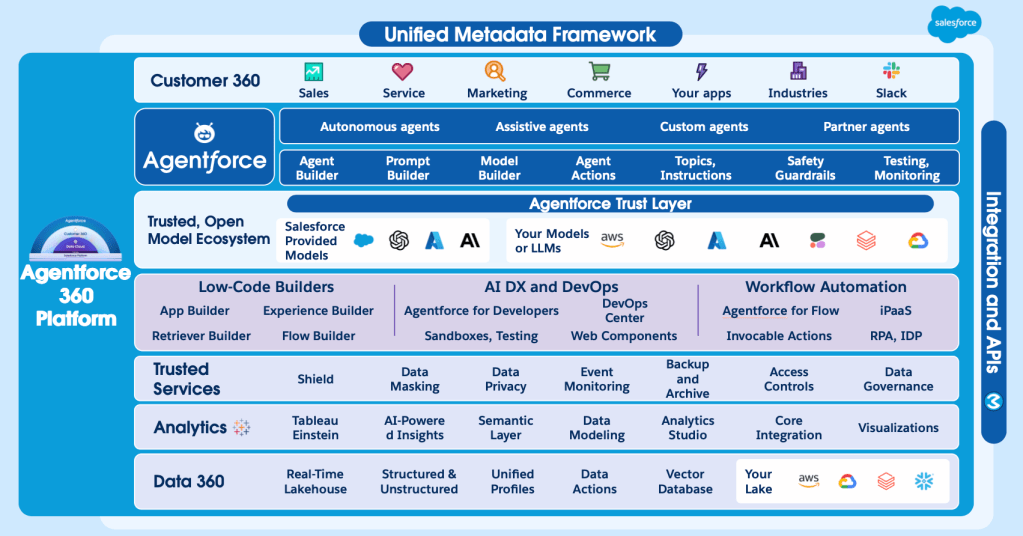

Our mission is to evolve the Agentforce 360 Platform into an uniquely integrated foundation aligning the data, agent, and application layers into a single, unified stack. This provides consistent customer context, reliable reasoning, and deeply integrated execution across our application suite.

At its base, the Platform’s data layer serves as the harmonized backbone. It brings together data and signals, metadata, and personal unified profiles that all higher layers depend on. The agent layer uses all that deep context to deliver reasoning actions, personalized context and memory. The application layer then reimagines domain workloads. It uses these new capabilities to deliver business outcomes with greater accuracy and autonomy.

Our goal is not simply to add AI or connect data. It is to rethink how applications behave and what value they should deliver in an agentic world. We design workflows that assume unified context, real-time activation, and multi-surface execution. When these layers work together as one system, we deliver complete customer scenarios across not only traditionally CRM service, sales, marketing but also any business custom workloads with more consistency, trust, and shared understanding.

What architectural factors shaped your strategy for consolidating Salesforce’s historically fragmented application, data, metadata, and identity layers into a vertically unified Agentforce 360 stack?

Early limitations arose from point-solution approaches, especially agent frameworks relying on downstream connectors. These connectors had differing semantics and freshness characteristics. Without central control of ingest schedules, synchronization behaviors, and context interpretation, the same question could yield varied answers across different surfaces, which could create fragmentation and erode trust

To address this, we engineered the Platform’s unified data layer (powered by Data 360) as the universal “plane of glass” for all enterprise data. This allows us to harmonizes signals across application clouds, external sources, and real-time events into a single, coherent semantic model. This approach allowed us to deliver shared freshness guarantees, unified correlation logic, and consistent contextual retrieval. This holds true regardless of where a question originated.

Building this vertical stack required breakthroughs in three architectural dimensions:

- Unified ontology modeling to eliminate competing interpretations of customer entities and other metadata.

- Centralized freshness and ingest control spanning both streaming, batch, and zero-copy pathways.

- Unified search and discovery to ensure identical answers across agents and applications.

With these capabilities consolidated into the Platform’s data foundation, every consuming layer, from applications to Agentforce, can rely on a single, authoritative context engine.

What scalability and performance boundaries guided your evolution of real-time data, eventing, and storage systems as C360 expanded into the system-of-record for AI-driven applications?

As more systems of record migrated into the Platform’s unified data layer, the Platform encountered significant scaling pressures. These pressures focused on metadata, harmonization, and read/write performance. The prior metadata system could not handle the rapidly growing volume of entities, attributes, and cross-source relationships. This required rethinking metadata as data. We stored, indexed, and retrieved it using scalable data-domain patterns. This ensured each application or agent activated only what was relevant.

Harmonizing models across diverse systems also introduced complex operational boundaries. We solved this by defining a harmonization metamodel. These metadata types describe mappings, transformations, and runtime execution semantics. This allowed the system to generate canonical customer profiles from heterogeneous sources in a consistent, predictable way.

We then optimized the data plane by balancing several critical performance dynamics:

- Freshness versus cost across large datasets that back both personalization and context activation.

- Scalable real-time event correlation to ensure that incoming signals immediately update the unified context.

Through iterative refinement driven by live customer usage, the Platform’s data layer matured into the unified system-of-record backing end-to-end AI scenarios.

Enriching data to drive insights and actions in the context of work.

What engineering challenges emerged integrating the layers of Agentforce 360 and how did those considerations influence your strategy?

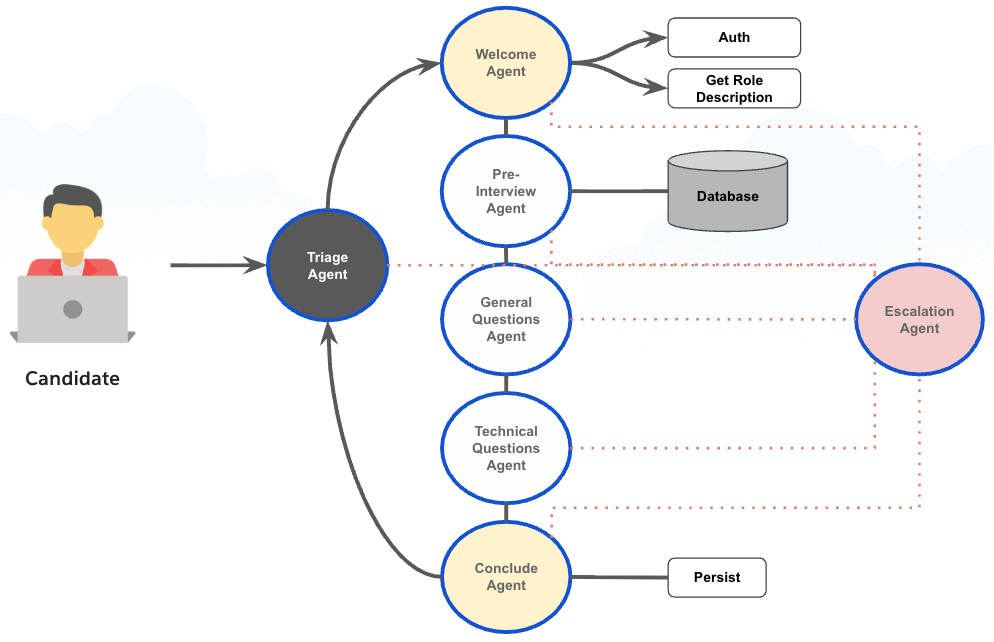

One of the most significant challenges involved building Agentforce 360 Platform while at the same time application teams built their first agents. This occurred long before invariants emerged — APIs, Platform boundaries, or models had stabilized. Because the industry evolved rapidly, assumptions that were valid one month often became obsolete the next. This occurred as model behavior improved or new Platform abstractions emerged.

This created a dynamic environment where:

- Application teams built temporary workarounds when Platform APIs did not yet exist.

- Interfaces required refactoring after the Platform matured.

- Guardrails, trust layers, and reasoning boundaries shifted as LLMs advanced.

- Deprecation constraints required cautious rollout of any externally visible API.

Clear separation eventually emerged through several mechanisms:

- We established early stable primitives (topics, actions) that survived rapid iteration cycles.

- We provided safe experimentation zones for app teams before Platform features reached GA.

- We developed Agent Script and Agent Graph as formal orchestration models shaped by early customer usage.

- We engineered the Platform centrally. This ensured every new capability, from customer sandbox to Apex, Flow, and security features, testing centers, and developer experiences, functioned seamlessly across the entire system.

This iterative maturation process produced a well-defined boundary between agent Platform and applications — a foundational requirement for the Platform’s unified architecture.

Another thing we did both in data and AI was to really build in a Platform-centric way so every aspect of the Salesforce developer ecosystem that people love such as sandbox, security, testing, and dev experiences all just worked.

What governance, trust, and identity considerations shaped your approach to building a unified security and policy enforcement model across Data 360, Agentforce and Applications + Industries?

As the Agentforce 360 Platform took on mission-critical and AI-driven workloads, governance and identity requirements became deeply multi-layered. Customers needed consistent enforcement of data-access policies, user identities, and trust boundaries across applications, agents, and channels. Historically, these systems evolved independently, with different policy engines and enforcement semantics, making unified scenario execution difficult.

We addressed these challenges by centralizing policy evaluation in the data layer — transforming identity, trust, and governance into shared Platform responsibilities rather than cloud-specific concerns. By standardizing enforcement, every agent and application benefits from consistent governance, reducing fragmentation and ensuring policies behave predictably across real-time and multi-surface scenarios.

What are some of the challenges that were uncovered while enabling enterprise context from unified Data 360 for agentic workflows across applications?

The shift to agentic workflows exposed glaring boundary mismatches. Retrieval systems, application logic, and metadata models simply did not align. Early RAG pipelines often delivered inconsistent or low-confidence results. This stemmed from varied chunking strategies, inconsistent embedding quality, and even the customer’s own specialized vocabulary and poor data hygiene. The identical question could produce wildly different answers, depending on the source material’s structure. The team tackled this by investing in several key technical improvements to bolster retrieval fidelity:

- Stronger embedding models and enabling multiple chunking strategies.

- Hybrid indexing RAG, merging keyword and vector search.

- Context Intelligence for complex formats like tables, flows, and non-text elements.

- BYOC for specific domain document structures.

- Initial work on Graph-RAG to maintain structured relationships.

As application evolved to become agentic, the need for a unified data runtime within the broader Platform became even more fundamental. This runtime now ensures agents, workflows, and applications operate with consistent semantics, even as the underlying retrieval systems, metadata and personalization layers continue their evolution.

What factors shaped your approach to evolving the Agentforce 360 Platform capabilities and extensibility for internal and customer engineering teams, partner ISVs, and broader AI agent developers building on top of the unified stack?

Evolving the capabilities and extensibilities proved a formidable challenge, needing to operate alongside rapid Platform transformation. This often occurred while systems were already running at scale in production. As adoption accelerated, availability expectations climbed, demanding four-nine levels across apps, messaging, data activation, and agent execution. Delivering new capabilities under live load mandated thoughtful architectural governance, predictable release cycles, and tight team coordination.

We built stability through:

- Structured technical governance, with local and rolled-up tech summits spanning Data 360, Agentforce, and applications.

- Cross-layer architectural reviews, ensuring Platform abstractions met real application requirements.

- Fast, safe release processes, using patch-style cadences for early detection and quick remediation.

- Closed-loop validation, where application and customer use directly shaped Platform features.

This approach maintained the agility necessary for rapid innovation. It also preserved coherence and extensibility across all layers of the unified Agentforce 360 Platform.

The Deeply Unified Platform.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.