In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Phil Mui, SVP of Agentforce Software Engineering. Phil and his team led the development of agent graph, the core technology behind hybrid reasoning. This groundbreaking approach externalizes reasoning into design-time graphs, ensuring reliable behavior (enterprise “Standard Operating Procedures”) while preserving natural conversational flow.

Discover how his team tackles the “drop-off” issues common in enterprise implementations, squarely addressing the unpredictability of LLMs through graph-based reasoning for deterministic workflows. These advancements set the stage for major Dreamforce announcements, with a General Availability (GA) release planned for later this year.

Why is it hard to build reliable agents for enterprises?

Enterprises demand accuracy, control, and consistency at scale. Yet most agents rely on hand-crafted prompts that create opaque, unpredictable systems. Two fundamental problems emerge:

1) Agents are hard to control

- LLM stochasticity. The nature of language models is rooted in statistical training and inference over very high dimensional latent space that is harder to train clear input-output responses.

- Language ambiguity. Natural language as input is inherently ambiguous.

- Brittle rule trees. Simply adding if/else rules or “flows” as agentic actions can devolve quickly into unmanageable, interdependent logic that’s hard to test and maintain.

- Inconsistent experiences. The result is uneven outcomes across channels, users, and sessions.

2) Monolithic, “one-agent-per-topic” designs

Enterprises demand accuracy, control, and consistency at scale. Yet, most agents rely on hand-crafted prompts that create opaque, unpredictable systems. Two fundamental problems emerge:

Reliable agents require architectural thinking beyond prompts:

- Topology level: define the graph of agents — what agentic nodes exist, the contracts between them, and the transitions that govern information flow, interrupts, and recovery.

- Node level: configure each node’s capabilities — model, tools, instructions, lifecycle actions—that guide a deterministic exit despite probabilistic internals. Example lifecycle actions include: init_agent, before_reasoning, tool_call, etc.

Why this matters: most agent designers today over-emphasize prompt tweaking. That leaves out topology optimization opportunities. Research consistently shows that reasoning + acting with tools, self-reflection, memory, and deterministic control planes (workflows with checkpoints) deliver more robust outcomes than prompt-only tuning. Agent graph makes these topological controls first-class. Recent announcements from OpenAI, ElevenLabs, Intercom and others show that the industry as a whole is acknowledging this strategic direction.

What is your team’s mission for building enterprise-scale LLM agent control systems?

Our mission is to engineer enterprise-scale LLM agents that are both highly autonomous and reliably deterministic, resolving the core tension between agent agency and developer control. Our solution is hybrid reasoning by leveraging an agent graph to guide reasoning.

Instead of monolithic agents, we decompose complex workflows into smaller cognitive tasks — each handled by a focused, steerable subagent (for chitchat detection, query rewriting, etc.). We leverage finite state machines (FSMs) to manage state transitions while preserving the LLM’s natural language understanding, enabling “guided determinism.”

This hybrid reasoning approach delivers agents with the intelligence to handle real-world complexity and the reliability enterprises require, allowing developers to inject custom logic at precise workflow moments while maintaining full LLM capabilities.

How did customers experience agent context loss in production AI deployments — and what engineering breakthrough solved reliability at scale?

Our most sophisticated customers are struggling to solve agent “drop-off” issues (or “goal drifts”), where agents lose track of their primary objectives when users ask tangential questions. For instance, if a major home builder’s agent is guiding users through filling out an inquiry form, but someone asks, “How’s the weather in Austin?” the agent can lose focus on the goal of completing the form. This challenge is common among customers who have implemented agents in complex, real-world scenarios, representing a fundamental engineering problem that the industry has been striving to address — maintaining context and objectives across dynamic conversational flows.

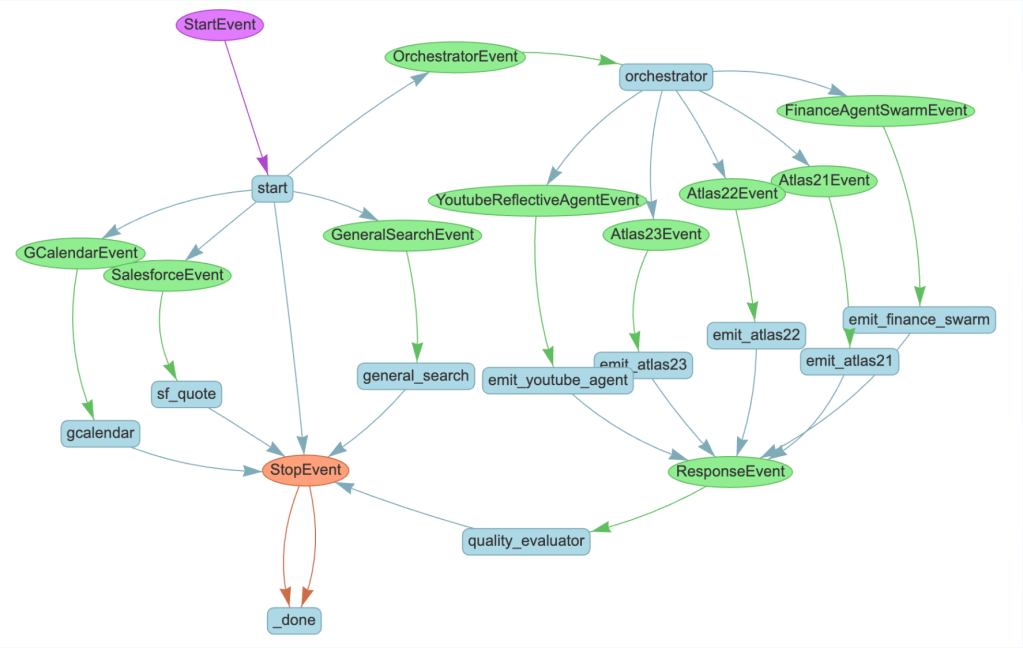

Our team tackled this issue with hybrid reasoning, which implements “guided determinism” through agent graph. A business workflow is modeled as a graph of nodes (discrete tasks) and edges (transitions). Instead of relying just on conversational memory, the agent graph runtime explicitly manages persistent states and enables purposeful tracking of primary goal, conversation history, and current position in a conversation The states are passed and shared between steps, ensuring context is never lost. Consequently, Agentforce can now gracefully handle conversational diversions while remaining anchored to its goals. The result is a very promising solution to agent drop-off problems at an enterprise scale, setting a new standard for conversational AI in complex business environments.

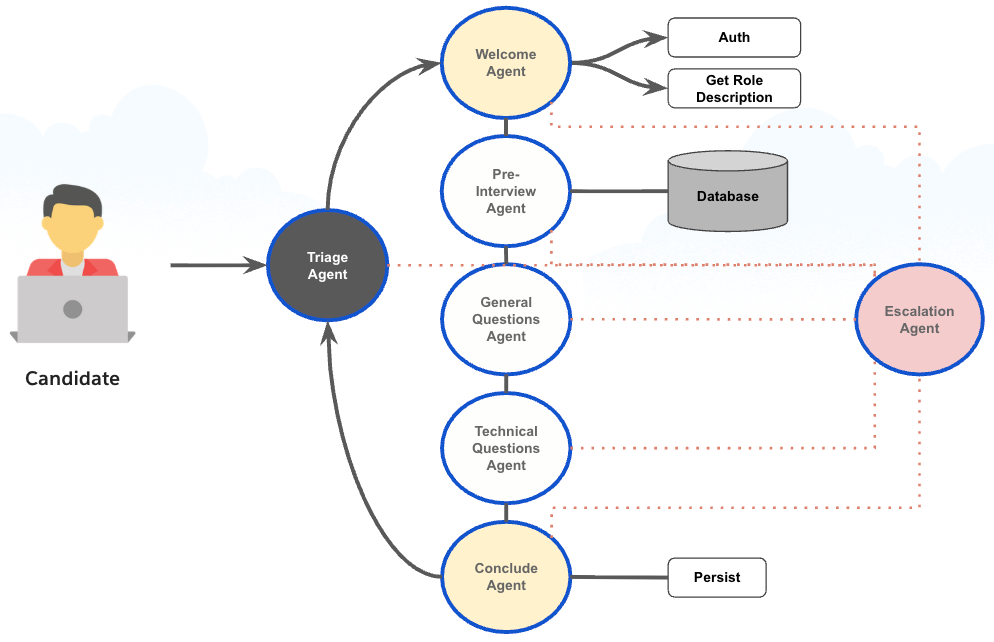

Example of an agent graph that models coordinating agents to provide end-to-end interview experiences for candidates.

What limitations constrained existing AI systems for scaling beyond rule-based architectures — and how did your team build next-generation graph architecture?

Traditional decision trees shatter under production’s weight. Add a product line, shift consumer preferences, and suddenly you’re rewriting brittle rules across sprawling workflows — a maintenance nightmare that doesn’t scale.

Enter the LLM era, and enterprises pivoted to “reasoning through massive prompts.” The result? Goal drift, unpredictable outputs, and zero auditability. Welcome to doom-prompting — that Sisyphean loop of tweaking instructions, refining data, and praying for consistency that never arrives.

The hard truth: LLM reasoning alone cannot carry enterprise load.

What we announced last week at Dreamforce is a natural evolution of our Atlas Reasoning Engine with hybrid reasoning. This style of reasoning takes advantage of a graph runtime that marries LLM intelligence with deterministic control. Agent graph’s customizable runtime operates on low-level graph metadata, now scriptable through Agent Script.

The future isn’t endless prompt refinement. It’s structured, auditable workflows that break the doom-prompting loop. OpenAI’s AgentKit, ElevenLabs’ Workflow, and tools like Flowise and N8N are converging on this insight: reliability demands architecture, not LLM alchemy.

What multi-agent workflow orchestration challenges required new coordination patterns — and how did engineers solve complex coordination?

Multi-agent orchestration confronts two hard realities: LLMs’ stochastic nature makes them unreliable partners in sequential workflows, and managing causality — ensuring dependent tasks execute in proper order with proper context — becomes treacherous when coordination relies on implicit runtime reasoning. The result: unreliable handoffs, orphaned context, broken task chains.

In hybrid reasoning, we introduced two coordination patterns as first-class primitives in the underlying agent graph: handoff (passing full conversation context between agents) and delegation (a “concierge” orchestrator farming subtasks to specialists, then synthesizing their returns).

Consider the causal query: “What’s the capital of France and how many people live there?” The orchestrator recognizes the dependency, delegates the first question, receives “Paris,” then reformulates the second as “How many people live in Paris?” Causality preserved through explicit structure, not model intuition.

The architecture’s key insight: orchestration as design-time configuration, not runtime improvisation. The graph runtime becomes the conductor in hybrid reasoning — coordination patterns flow from its topology, not from fragile prompt engineering. The industry is learning: reliable multi-agent systems demand explicit choreography, not more prompt incantations.

What technical challenges did the team face deploying enterprise AI agent systems at production scale — and how are you measuring breakthrough performance?

Transitioning from prototype to enterprise-scale production brought about fundamental challenges, particularly in handling edge cases and scaling the underlying architecture. We found that production environments often expose complex scenarios, such as users who escalate inappropriately. The line between legitimate complaints and problematic behavior can vary significantly depending on the industry, country, and time period.

To tackle these challenges, we combined architectural innovation wth strategic acquisitions. We brought in specialized expertise through DAGWorks, Watto, and Truva — engineers with deep experience in event-driven workflows and graph-based reasoning.

Early Agentforce success at help.salesforce.com, replacing the traditional browse-oriented help center with an agent-oriented approach led to significant improvements in both automated case resolution and customer satisfaction. This success validates our position as the first company to effectively address these fundamental agent reliability challenges at scale.

What’s next for agent graph?

We would like to help Salesforce teams and our customers optimize how agents think and they can be orchestrated. We are enabling manual customization, as well as automatic optimization at both the prompt-level (per node), as well as topology-level (the overall graph).

We are also experimenting with a higher level language (Agent Script) that can bridge the gap between business users and the underlying metadata controlling the agent graph runtime. Agent Script is designed to be accessible to a variety of frontends and users. Whether you have expertise in graph language or not, you will be able to use this new language to design your Agentforce system. This means you can benefit from the system’s advanced capabilities without needing to understand the intricate details of graph architecture.

We believe that agent graph and Agent Script, along with updated authoring experiences mark a significant step forward in making deterministic agentic workflows accessible to all users. Our product teams are working hard to ship these and more Agentforce innovations in the months ahead!

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.