By Kristin Foss, Jason Nassi, and Vincent Nordell.

In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, meet Kristin Foss, VP of Software Engineering for Customer Centric Engineering (CCE), who led her 180-person Engineering team to revolutionize customer escalations with AI-powered agents and automation tools.

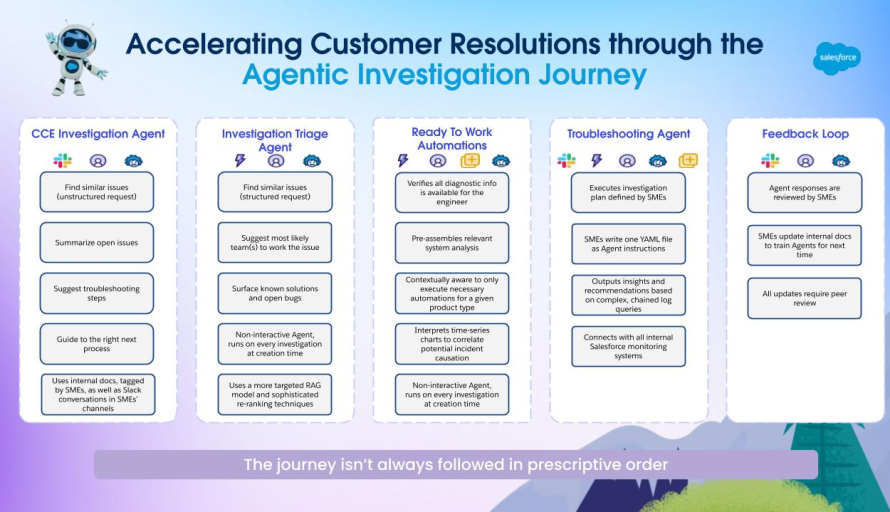

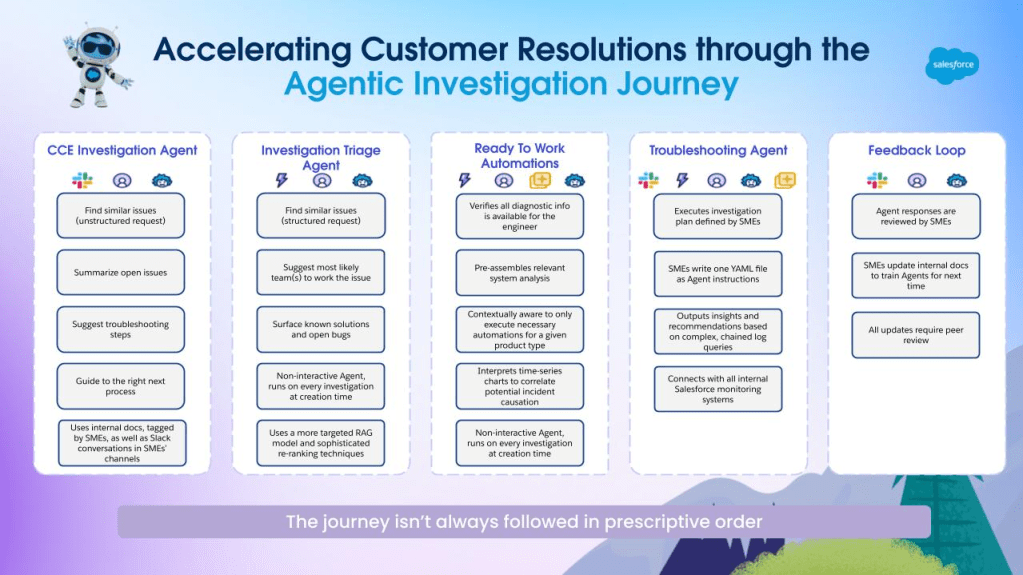

Discover how they increased efficiency by eliminating manual data collection, accelerated problem resolution through intelligent pattern recognition, and scaled specialized expertise across diverse product portfolios — achieving measurable performance gains and transforming reactive firefighting into proactive customer protection.

What is Customer Centric Engineering’s mission as the funded intermediary between customer support and engineering teams?

CCE is dedicated to resolving customer escalations when individual customers face issues that prevent them from completing their business processes, even though our services are running normally. We operate under a funded model, where scrum teams engage CCE to act as a protective barrier, handling escalations known as investigations that customer support is unable to resolve.

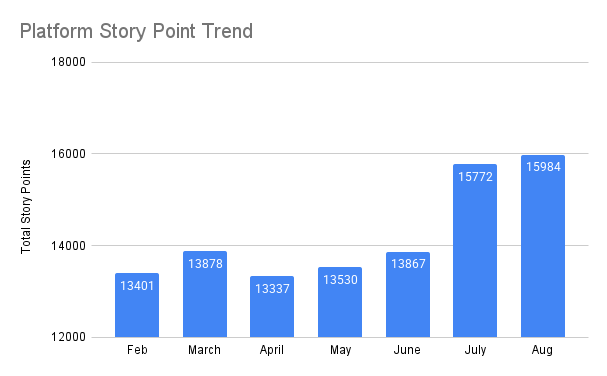

Our analysis has revealed that escalations fall into three distinct categories. About 33% are issues that customer support should have resolved, 25% are bugs discovered by customers before our testing caught them, and the remaining 42% involve complex integration problems. By shifting from average days to close metrics to time-to-close P90 analysis, we were able to uncover granular patterns that averages often mask. This shift revealed specific automation opportunities for each category, which would significantly enhance our team’s efficiency.

We function on two levels: directly solving customer problems when funded by specific scrum teams, and developing tools that enable scrum teams to address customer issues independently when funding is not available. Our mission extends beyond individual problem-solving to building infrastructure that scales customer issue resolution across the organization.

What manual data collection challenges were slowing your engineers, and how did automation eliminate these productivity bottlenecks?

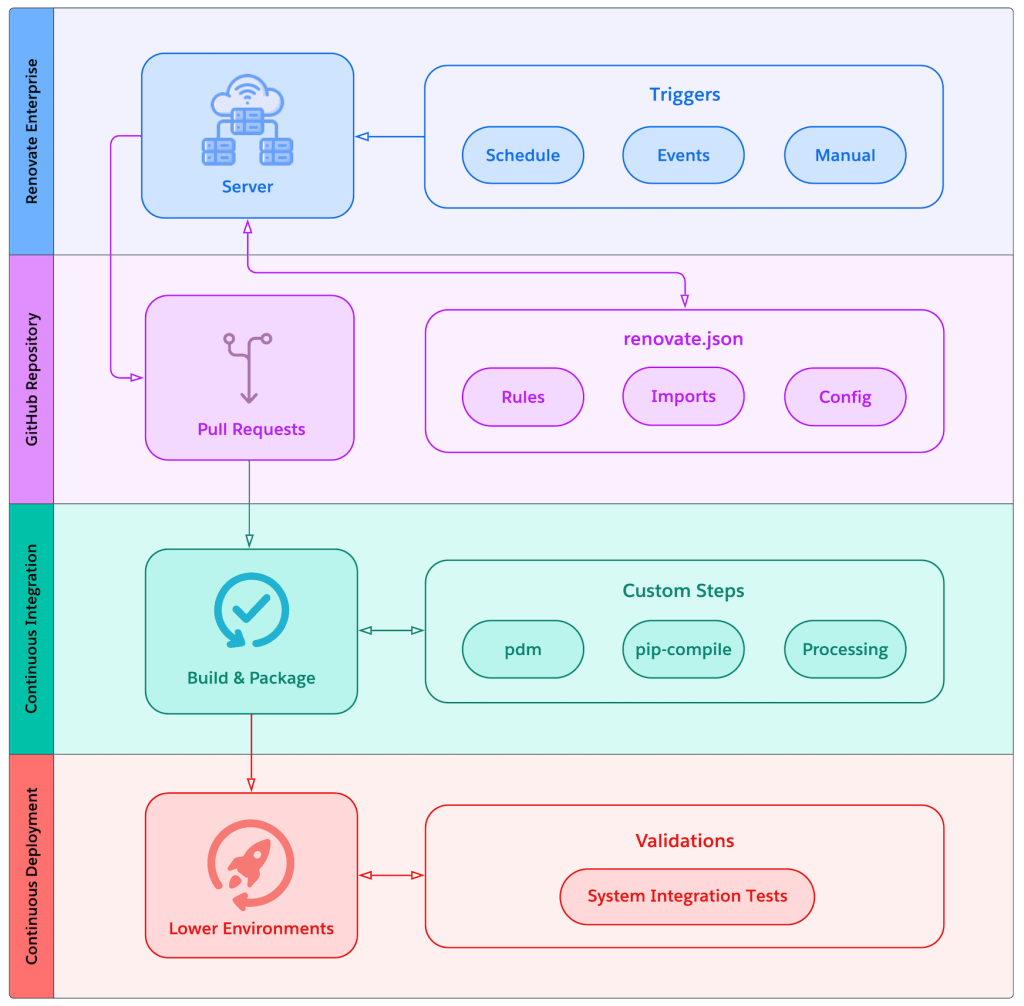

Manual data collection was a significant efficiency drain for our engineers, who often spent entire days gathering Splunk logs and diagnostic information before they could even start troubleshooting. This front-loaded inefficiency meant that a substantial amount of engineering effort was wasted on routine data assembly rather than addressing customer problems.

To tackle this issue, we developed what we call a “ready to work state.” Automated systems now collect all the data that engineers would typically gather manually, eliminating days of repetitive tasks. When engineers open investigation objects, they find comprehensive diagnostic information pre-assembled, including Splunk logs and relevant system data. This allows them to immediately begin problem-solving instead of hunting for data.

The automation is designed to understand that for specific product areas, engineers consistently collect particular data sets. Therefore, it proactively retrieves this information based on problem categorization. This breakthrough completely eliminates the hours engineers previously spent on manual assembly, enabling them to focus immediately on analysis and resolution. Now, what once required days of preparation can be accomplished in just minutes.

What technical challenges did you face building AI pattern recognition systems that connect current customer problems with past solutions?

The efficiency challenge we faced centered on engineers repeatedly solving identical problems because each customer described issues differently, forcing teams to reinvent solutions that already existed in our knowledge base. Engineers were spending considerable time investigating problems that their colleagues had already resolved months earlier.

The complexity arises because customers use entirely different terminology, context, and technical language to describe what ultimately traces back to the same root cause. For example, one customer might describe a timeout issue as “slow performance during peak hours,” while another reports “intermittent connectivity failures in batch processing.” Both issues could stem from the same underlying infrastructure bottleneck, but the symptom descriptions provided no obvious connection.

To address this, we implemented AI agents that excel at pattern matching using large language models. These AI agents significantly accelerate the translation between customer-reported symptoms and documented solutions in our systems. The AI instantly identifies when new problems match previously solved investigations, even when they were handled by different engineers months apart. This breakthrough in knowledge continuity across our organization means that engineers can leverage collective expertise instead of starting from scratch on familiar problems.

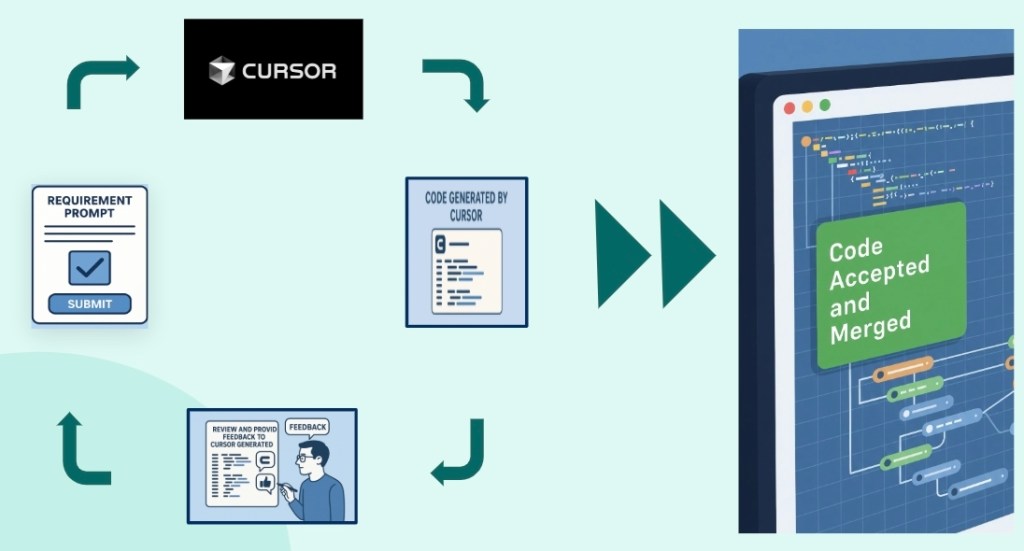

What debugging challenges led your team to adopt AI coding tools like Cursor and Windsurf for customer implementation troubleshooting?

One of the major bottlenecks in our debugging process was the excessive time engineers spent manually reproducing customer-specific issues using internal source code. When customers faced problems with compiled implementations, engineers had to meticulously analyze customer configurations and spend hours, if not days, trying to replicate these issues in basic development environments.

To streamline this process, our CCE engineers now leverage Cursor, Windsurf, and Claude CLI. These AI-powered development tools allow them to quickly create working reproductions that mirror customer problems using internal source code, drastically speeding up our debugging workflows. Instead of the labor-intensive and time-consuming manual methods, these tools provide a systematic and efficient way to identify issues within large codebases in a matter of minutes.

Additionally, they help pinpoint the exact code changes that led to customer issues and offer suggestions for fixes and automatically generate unit tests and regression tests to prevent recurrence. This shift to AI-assisted debugging has fundamentally transformed our efficiency, enabling engineers to handle complex reproduction and analysis tasks that once required extensive manual effort and time. Now, they can focus more on solving problems and less on setting up the environment to do so.

What cross-domain expertise challenges emerge when training AI agents to handle multi-product Salesforce customer scenarios?

The efficiency challenge arises when AI agents, trained on extensive product documentation, provide responses that don’t align with the customer’s specific technical stack. This misalignment forces engineers to shift their troubleshooting approach, costing valuable time and delaying problem resolution.

This issue stems from the fact that our documentation and training data naturally include more detailed examples for certain product combinations. As a result, the AI tends to recommend solutions based on the most well-documented scenarios rather than analyzing the customer’s unique technical environment. For instance, a customer running a complex MuleSoft integration with custom APIs requires MuleSoft-specific debugging methods, not generic Service Cloud troubleshooting steps that might work in isolation but fail in their integrated setup.

Prompt engineering and context engineering are crucial for improving the accuracy of AI agent responses. By continuously refining our context engineering techniques, we ensure that the AI agents understand the specific product context and provide relevant solutions. This approach eliminates the inefficiency of following incorrect troubleshooting paths and allows engineers to focus immediately on the most pertinent fixes.

What customer support automation metrics demonstrate reduced escalation times and executive involvement prevention?

CCE’s AI implementation has brought about significant performance improvements in several areas:

- Automated Decisions: We increased automation in Apex LAPs (single org change decisions) from 25% to 70%, effectively eliminating manual review bottlenecks.

- Response Times: When automated decisions were available, LAP request processing time was reduced from 48 hours to under 10 minutes, a 99.7% improvement that allows engineers to focus on higher-value tasks.

- Our Investigation Triage Agent has found over 200 bugs and over 500 directly relevant work items saving engineering hours and improving TTC for all of our customers

However, it’s challenging to pinpoint the exact impact of specific AI efforts, as we implemented multiple parallel automation initiatives simultaneously. Despite this, the overall trend clearly indicates significant efficiency gains, enabling our teams to address more customer issues with the same engineering resources.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.