In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Madhavi Kavathekar, a Director of Software Engineering who oversees the development of Feedback and Audit Trail, an AI auditing system within Agentforce.

Discover how Madhavi’s team successfully integrated this system with Data Cloud, despite significant technical challenges, scaled it to manage unpredictable AI traffic using Kafka-based ingestion, and coordinated with eight to ten cross-functional teams. This solution now supports 500 enterprise customers and handles 20 million model interactions monthly, all while maintaining the highest standards of trust, security, and compliance.

What is the mission of the Feedback and Audit Trail team for building AI agent auditing systems in Agentforce’s platform?

The Feedback and Audit Trail team is dedicated to designing, building, and scaling backend systems that provide transparency into AI agent behavior within Agentforce. Our goal is to create a reliable, scalable, and secure foundation for capturing and processing generative AI interaction data, enabling both internal data scientists and external customers to understand, debug, and enhance AI agent performance. The Feedback and Audit Trail feature now supports over 500 customers, making it a vital part of our AI-driven solutions.

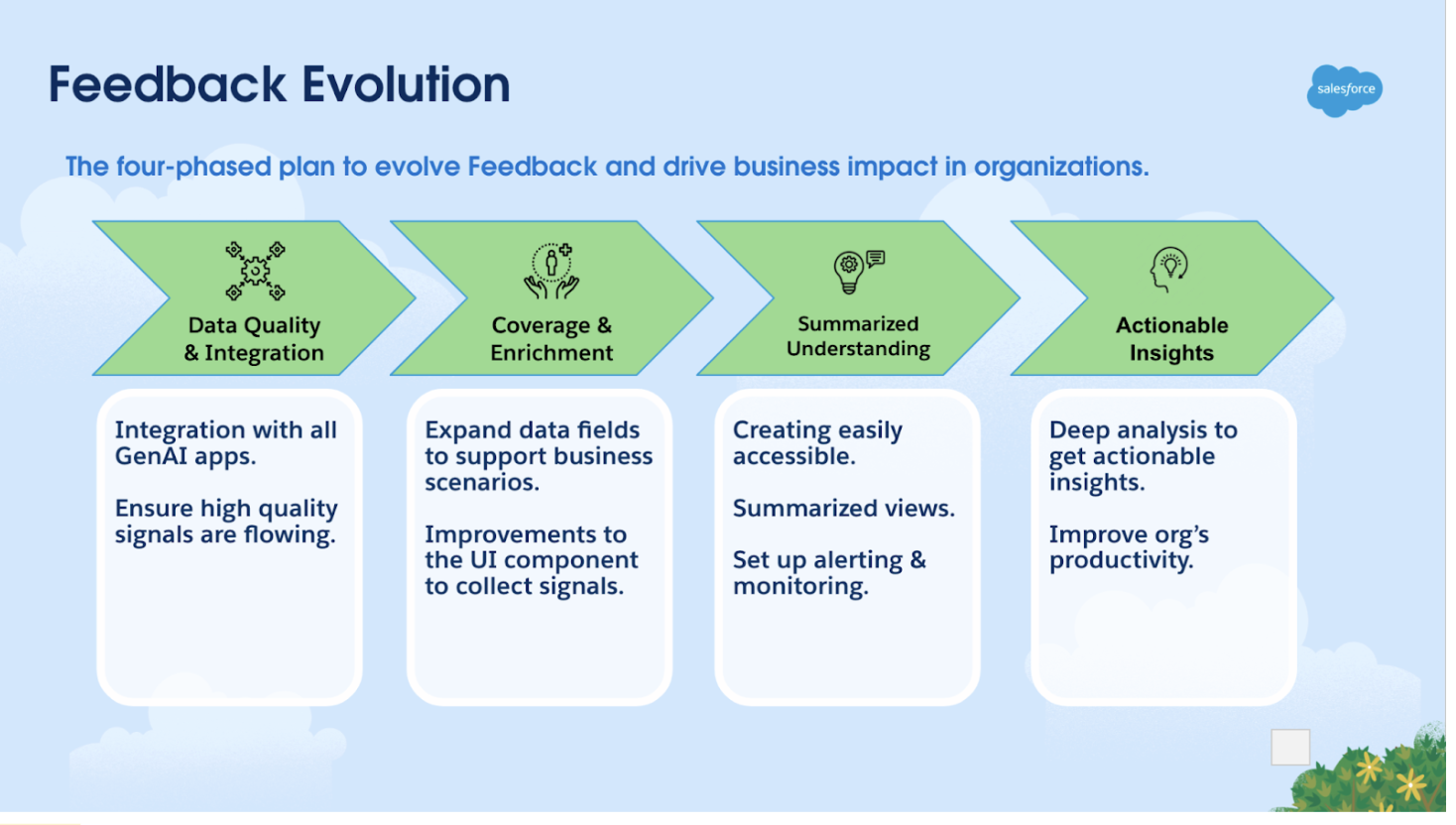

Evolution of collecting generative AI feedback for deriving business impact.

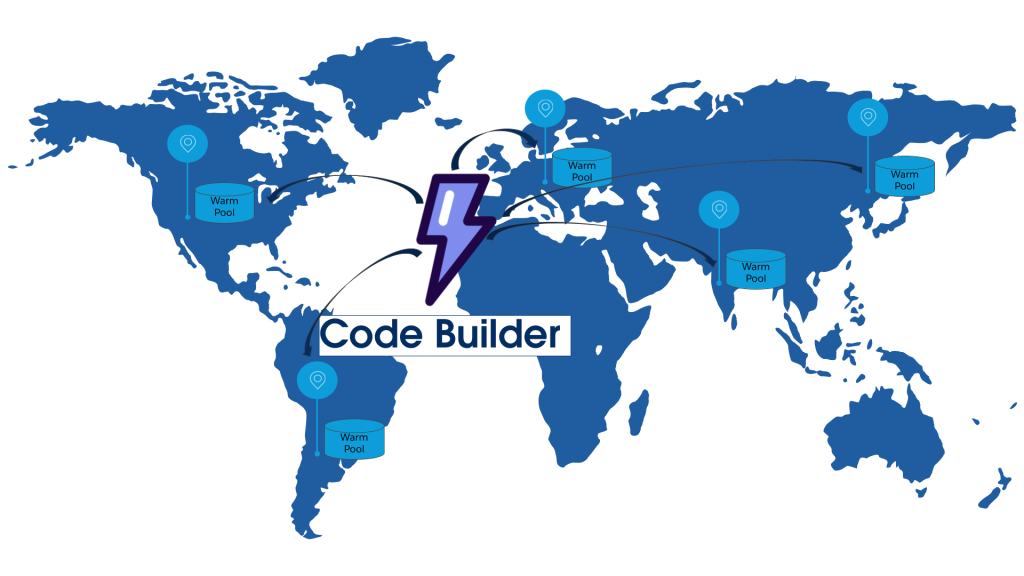

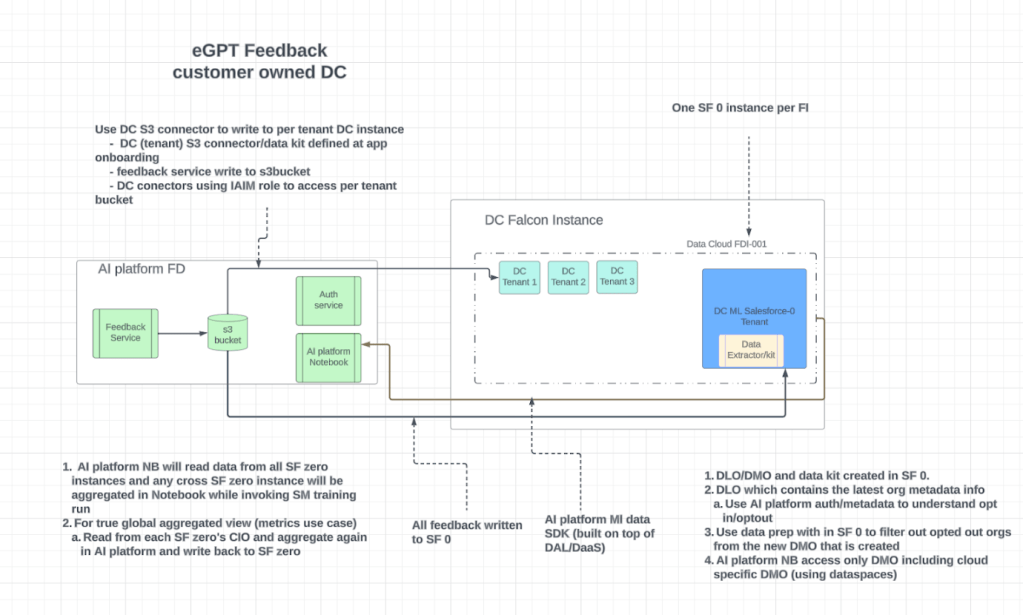

To achieve this, the team developed a robust data pipeline architecture, integrated deeply with Data Cloud, and implemented a Kafka-based ingestion model to handle unpredictable traffic patterns from global users. These efforts ensured that audit data is captured accurately and delivered efficiently at scale.

Collaboration was key, with the team working across eight to ten dependent groups to address feature gaps, API limitations, and architectural constraints. The Tiger Team structure, featuring representatives from each group, was instrumental in managing these dependencies. It helped identify and resolve issues early, driving alignment and ensuring the final product met our high standards of trust, security, and compliance.

What was the toughest technical challenge in integrating Feedback and Audit Trail with Data Cloud for AI auditing?

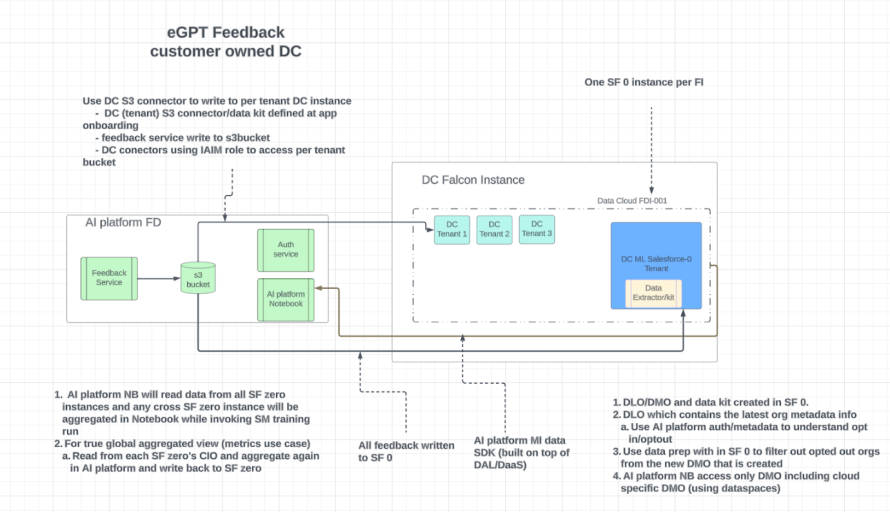

Integrating Feedback and Audit Trail with Data Cloud introduced significant technical challenges, especially as this was the first such integration. Data Cloud supported customer-owned S3 buckets, but our system required Salesforce-controlled S3 buckets, creating a fundamental mismatch. This led to the need for a community-supported solution. The team also faced frequent feature gaps, unexpected limitations, and non-standard scenarios, which required close collaboration with Data Cloud architects to resolve.

To address these challenges, the team adopted an iterative development approach. They built proof-of-concept components, conducted technical spikes, and validated integration patterns. A Tiger Team, consisting of representatives from each of the eight to ten dependent teams, managed cross-team dependencies and unblocked technical roadblocks. Weekly meetings and structured backlog grooming helped identify and resolve issues like file size constraints, ingestion bottlenecks, and edge-case behaviors, enabling steady progress.

The solution was the result of a coordinated, iterative effort, characterized by pragmatic engineering trade-offs under tight timelines.

High-level architecture for collecting feedback and making it available for customers in their Data Cloud instance.

How does the Feedback and Audit Trail system manage scalability challenges for AI model interactions at enterprise scale?

The system supports a vast number of customers and processes millions of LLM requests monthly . Managing scalability has been a major challenge, primarily due to uneven traffic patterns — sharp spikes during business hours and minimal activity at night — along with the unpredictable nature of AI workloads. The architecture relies on a Kafka pub-sub model for data ingestion, which needed significant tuning to handle fluctuating data loads without overloading resources or causing latency.

To address these issues, the team implemented dynamic flow control mechanisms that adjust data consumption rates in real-time. This prevents overloads during peak periods and minimizes resource waste during quieter times. Working closely with Data Cloud, the team established best practices for file sizes, batch counts, and ingestion patterns, ensuring the system can efficiently manage large volumes of AI feedback data without performance degradation.

Scalability is seen as an ongoing process, driven by simulation testing, performance monitoring, and continuous architectural improvements to meet the growing needs of customers.

What strategies are used in the Feedback and Audit Trail system to avoid regressions when scaling AI audit pipelines?

To prevent regressions in a multi-team, cross-cloud environment, structured collaboration and disciplined backlog management were essential. The Tiger Team acted as a central hub for aligning dependencies, highlighting conflicts, and ensuring that changes in one area, like Data Cloud ingestion patterns, didn’t disrupt other parts of the system.

Weekly meetings kept everyone updated on progress, identified blockers, and adjusted priorities as needed. Backlog grooming sessions focused on emerging challenges, such as optimizing Kafka flow control and updating ingestion schemas.

A shared understanding of the architecture, clear roles, and transparency were vital for managing risk. While the process wasn’t perfect, this structured approach significantly reduced unintended consequences and ensured that scaling efforts and new features did not undermine system stability or existing functions.

What ongoing R&D efforts are improving scalability, performance, and reliability in the Feedback and Audit Trail system for AI workloads?

R&D efforts are centered on scaling capacity to manage unpredictable AI traffic patterns while optimizing for cost and performance. A key focus is tuning Kafka flow control to ensure data ingestion matches processing capacity, preventing system overloads during peak times and resource wastage during low-activity periods.

Collaboration with Data Cloud teams is driving research into best practices for file sizes, batching strategies, and ingestion limits, helping the system align with platform constraints. Load simulations and benchmarking are used to validate assumptions and guide these tuning efforts. We are also exploring ways to support real time ingestion for some low latency use cases like Agent health monitoring.

Instead of adding new features, the priority is to refine and strengthen the architecture to support increasing AI agent workloads while maintaining stability and performance.

How does the Feedback and Audit Trail system balance rapid delivery of AI audit capabilities with maintaining trust, security, and compliance for enterprise customers?

Meeting tight timelines for delivering feedback and audit trails required a careful balance between speed and trust and security. As one of the first systems to support the LLM wave at Salesforce, the team had to adhere to contractual obligations that mandated a 30-day audit data retention window. To achieve this, the team prioritized essential features such as prompt capture, response logging, and user feedback (thumbs up/thumbs down) while postponing less critical features for future phases.

Security and compliance were core design principles from the start. The system utilized existing Salesforce and Data Cloud standards to ensure that retention policies were met and data integrity was maintained. Rather than over-engineering for every possible scenario, the team focused on delivering a minimal, compliant MVP (Minimum Viable Product) that could be refined and expanded over time. This pragmatic approach allowed the team to quickly roll out a critical feature without compromising trust, while also providing a foundation for future architectural improvements as AI workloads increased.

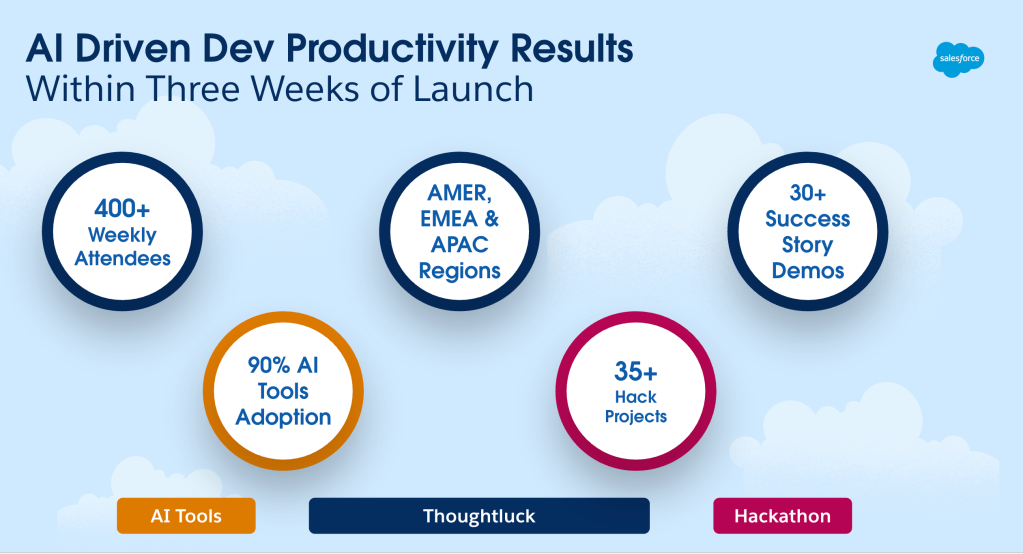

How are AI tools being used to improve developer productivity for building and maintaining AI auditing systems in Salesforce?

AI tools are primarily being used to streamline repetitive development tasks. Key areas where AI has added value include the generation of unit tests and the automation of API validation, reducing manual effort and allowing engineers to focus on higher-value work. Adoption has been mixed, with some engineers quickly embracing AI tools and others needing more encouragement to experiment.

AI tools are seen as accelerators, not replacements for engineering expertise. The emphasis is on using AI for routine tasks while maintaining high standards for design, architecture, and system integrity. Early explorations have also considered how AI might assist customers with creating prompts for generative AI features or tuning models, but these areas are still in the research phase. The current priority is to enhance developer efficiency with AI without introducing new risks or compromising system quality.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.