The Salesforce Charts team is constantly pushing for better runtime performance because of our intense data processing and rendering needs. In an attempt to improve performance I tried various ways of using Web Workers to facilitate chart rendering. This article will walk through the results of my experiments and provide recommendations for using Web Workers with long script executions on large datasets.

The Performance Issue

Our chart execution can be roughly divided into two processes: scripting and rendering. The rendering part asynchronously draws frame by frame with requestAnimationFrame while the scripting part is a synchronous process that cannot be interrupted. As a result, performance is limited to relatively small datasets and sequential chart generation. If a large dataset or multiple charts (see the image below for example) are needed, users will see a blank frozen page for whatever time it takes for all the charts to finish their scripting (since no frames will be available for requestAnimationFrame in the main thread, rendering will be delayed until then). This becomes an obvious performance issue. One solution here is to port the scripting part to the Web Worker and run all the instances concurrently, saving the main thread to process only the rendering part.

The easy way out?

At the time of writing, Web Worker is already pretty stable and with great support for modern browsers. It should be trivial to move the logic to the Web Worker. However, there is still some limits, including No DOM Access. This is a big issue for us since we are heavily relying on Canvas.measureText() for text measuring to position components. Therefore, blindly porting all the logic to workers is not an option for us. However, if this constraint does not apply to your case, then it will be much easier to leverage workers and will likely benefit more as well.

Utils as services

Fortunately, after a series of profiling on the scripting part, we realized that the time-consuming parts do not require any DOM access (or it can be pre-calculated). I have ported those parts to be utility services which can be executed inside workers. Some services only require simple parameters while others require more complex data to be transferred.

Worker Creation

Creating a worker does not come for free (script fetching and compiling). Setting up a worker pool that reuses the workers makes sense most of the time. In most cases you should limit the pool to be no more than the hardware concurrency of the system.

Serialize/Deserialize parameters

To send data to the workers, all the parameters must be serialized to strings. Check out some performance numbers for sending serialized data to Web Workers. For my case, most of the data that needs to be sent to Web Workers is JSON-like objects, such as:

const node = {

x: 1,

y: 2,

color: 'red'

}

Larger datasets mean more objects that must be serialized. Turns out that the time spent calling JSON.stringify almost canceled out the benefits of using Web Workers. I tried a number of approaches to alleviate this.

Less “set” more “get”

In our original implementation, all the objects share some common properties. This inflates the size of the data significantly and leads to a huge payload to serialize/deserialize. Before I reduced the data size, serialization/deserialization of 4000 objects took around 20 ~ 30ms and cancelled out the benefit of porting a ~100ms process to workers. Therefore, I centralized the shared properties and enriched the getter to take care of the shared properties.

get prop(propName: string) {

if (this._props[propName]) {

return this._props[propName];

} else {

return ...check the common properties

}

}

Even though I am paying the tax for every property get, it is still faster than serializing the original list of objects. Moreover, the more services we move to workers, the more conversions are needed, and more time can be saved in this fashion.

AoS vs SoA

Our original implementation put the objects in an array:

const list = [

{x: 1, y: 2, color: 'red', text: 'foo'},

{x: 3, y: 6, color: 'red', text: 'bar'},

...

]

This usually refers to Array of Structure (AoS). An alternative representation is Structure of Array (SoA)

const structure = {

x: [1, 3],

y: [2, 6],

color: ['red', 'red'],

text: ['foo', 'bar']

}

AoS is a more natural representation while SoA does have its benefit towards performance because:

- Properties can be shared / compressed. For the example above, the color property can be shared and saved as

color: 'red'instead ofcolor: ['red', 'red']. - Properties are saved as arrays can take advantage of using Transferable instead of stringify for more compact and efficient serialization / deserialization. For example:

worker.postMessage(JSON.stringify({

x: %X_ARRAY%,

color: ‘red’

}));

const x_array = new Int32Array([…])// copy over the x array

worker.postMessage({%X_ARRAY%: x_array});

- There is a feature being proposed for JavaScript (which is not will supported yet) called SIMD. It can optimize the application of the same transformation / instruction to multiple data. For example, to find the right edge of a charge we could do something like below and it would apply to all the rectangles in the buffer. If the data is in SoA form, we can expect to utilize SIMD to maximize the performance.

structure.right = structure.x.add(structure.w)

Stateless vs Stateful Worker

Originally I tried to use stateless workers but this resulted in a lot of repeated serialization and deserialization. By making the workers stateful I could now process a series of tasks with less overhead.

Result

After applying the optimizations I ran a test on my 16 core machine. The test setup is 8 charts with 8 workers.

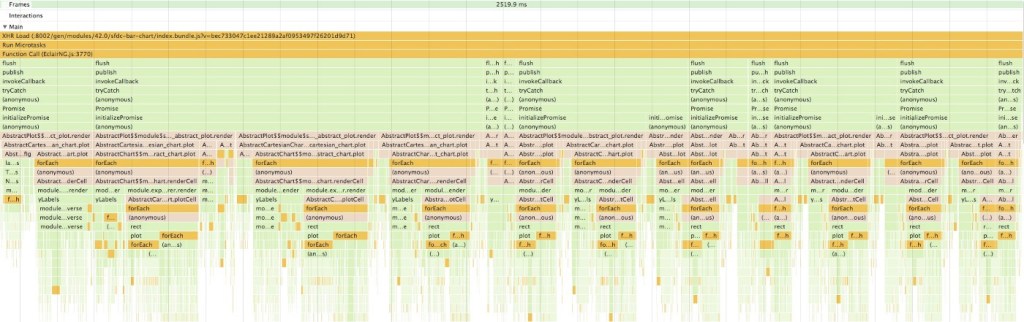

In my first attempt I only ported three util services (out of four parts). The first util service ran concurrently on eight workers (see the profiling diagram below). However, there are still some other time consuming logic on the main thread, interfering with scheduling of the second worker service:

Notice that the second util (the second yellowish section of each worker thread) was delayed in running and not all of the second util services starts at the same time (as you can see from their staggering). The third service depends on heavy serialization (the blueish sections on the main thread). These service calls were still being delayed and staggered due to scheduling contention.

Unfortunately, the result did not achieve the 8x speedup we hoped for. In the end we only achieved about a 20% speedup. I concluded for the following possible reasons:

- Scheduling: Even small amount of work that needs to be done in the main thread will delay the worker scheduling.

- Gluing work: Even after reducing the payload size, the amount of time doing stringify and parsing still cannot be overlooked. Since this work is done on the main thread it blocks scheduling too (like #1).

- Worker setup time: Workers have overhead for fetching and compiling the script. However, this can be alleviated by cache and inline workers.

- Runtime optimization: Some JS runtimes optimize for the same code path if you run it multiple times in the same context. However, if we run each of them in different contexts (threads), we have to pay for time warming up for that context. You can see this in action by looking at the pink sections in the profiling digram below which are smaller on subsequent executions. The last one is around 20% of time of the first execution.

Progressive Rendering

Even though the worker does not help much in the previous setup, it could still help in other cases. For example, we could not have multiple charts rendering sequentially due to a busy main thread. However, after adopting the workers, the main thread was freed up. I can easily render the charts with some overlap between the scripting time and the rendering time of the previous chart.

It does help the perceived performance as the user will see the first chart once it has been created instead of having to wait until all of the charts have been created and the main thread freed up.

Conclusion

Even though Web Worker does not improve the chart rendering time, it does provide a different direction with perceived performance. Deploying workers to a synchronous system is not trivial due to the lack of APIs available to workers. I hope my experiment can help with your evaluations on whether or not you should split your execution with Web Workers. Please provide your feedback and I am more than happy to discuss this topic further.

Follow us on Twitter: @SalesforceEng

Want to work with us? Salesforce Eng Jobs