In our insightful “Engineering Energizers” Q&A series, we delve into the inspiring journeys of engineering leaders who have achieved remarkable success in their specific domains. Today, we meet Indira Iyer, Senior Vice President of Salesforce Engineering, leading Salesforce Einstein development. Her team’s mission is to build Salesforce’s next-gen AI Platform, which empowers both internal teams and external customers to seamlessly build and utilize AI capabilities for predictive, generative, autonomous, and assistive workloads.

Read on to discover the strategies and techniques employed by Indira’s team as they tackled the challenging migration from legacy AI stacks to Salesforce’s new AI Platform. Additionally, learn how they managed the intricate technical considerations involved in building powerful generative AI capabilities on the platform…

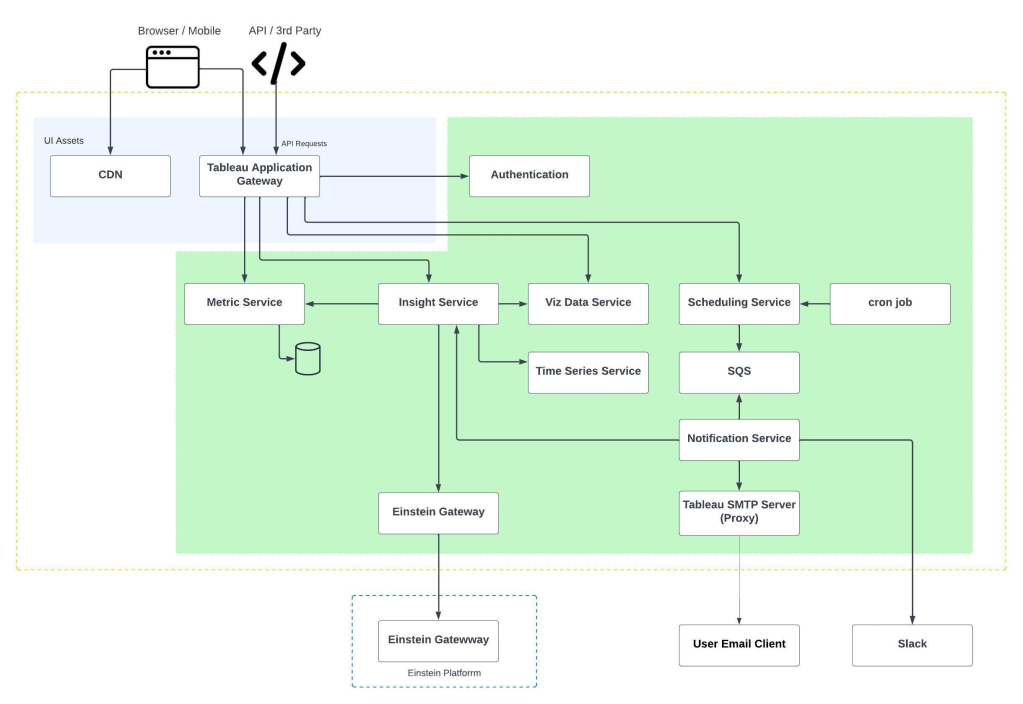

A look at the crucial role the new AI Platform plays within the larger Einstein 1 Platform ecosystem.

What was the primary motivation for migrating five different legacy AI stacks to Salesforce’s new AI Platform, and how does this migration benefit Einstein’s 20,000 customers?

Previously, we struggled to provide a consistent story to customers about the features and availability of our Einstein offerings across different geolocations and their compliance postures. This was because these features were built on separate, siloed stacks. Despite being branded as Einstein, they were actually a collection of different acquisitions and organically built stacks.

By migrating to the new AI Platform we aimed to provide a unified and consistent experience for customers, with clear compliance, trust, and availability information for our AI capabilities. Additionally, we wanted to enable the reuse of feature sets across multiple clouds without duplicating efforts.

For example, the call summarization feature, which is useful in sales, marketing, and service realms, was originally built by the Sales Einstein team on a stack that couldn’t be used by others. Similarly, capabilities for text summarization were specific to Service Einstein. By consolidating these capabilities under a next-generation platform, we could address integration challenges and leverage features across different applications.

This migration decision involved choosing between independently moving the five legacy stacks with limited capabilities or building a new, feature-rich, comprehensive next-generation platform that addressed the needs of the entire lifecycle of AI development. We opted for the latter approach.

Indira highlights some key tools that help increase her team’s productivity.

How did your team overcome the challenges of migrating Einstein customers from different legacy stacks to the new AI Platform?

The specific challenges were twofold. First, they were designed to cater to specific niche needs. This meant that migrating applications built on these legacy stacks and re-platforming them onto the new AI platform required careful consideration and planning across all the key stakeholders.

Second, the challenge was to transparently migrate customers without any disruptions or hiccups. This involved replaying every step of customer provisioning to ensure a seamless transition. It was crucial to move their workloads automatically and transparently, without the customers being aware of the platform change. This was not a small task, as it involved migrating numerous demo orgs, trial orgs, and production orgs across different regions.

To overcome these challenges, our platform team took on the responsibility of building a migration framework. This framework allowed application and cloud teams to plug into it and specify the unique nuances of their workloads. By doing so, we were able to transfer the applications and workloads smoothly from the old legacy stacks to the new AI platform without disruption.

Indira shares how her team is actively exploring several emerging technology trends.

Can you share any lessons learned from the first wave of migrations and how they influenced subsequent waves?

One important lesson was the need for thorough inventory of applications and their . We had to identify the organizations we had and determine which ones required our focused efforts. We noticed that some demo orgs were created but never used, so we developed heuristics to assess their usage over the past six months before deciding whether to invest in migrating them. This was crucial because even provisioning results in compute usage, and it would be wasteful to allocate resources to unused orgs.

Another lesson was the importance of carefully planning the order of migrations and addressing any failures encountered during the process. We applied this lesson across all migrations we performed. We explored ways to pre-set up orgs and have customers continue pointing to the legacy stack until the last possible moment. This involved tasks such as copying over models, provisioning orgs, and enabling customers to perform predictions using the legacy stack while we migrated data, models, and provisioned tenants. Once everything was set up, we seamlessly switched customers over to the new system without any disruptions.

Indira explains why engineers should join Salesforce.

What were the main technical considerations in building the generative AI capabilities on top of the new AI Platform?

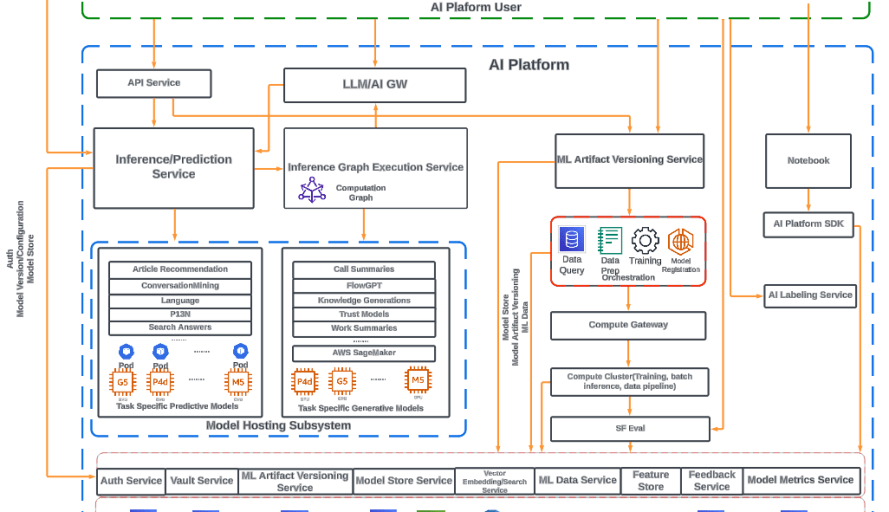

The primary technical consideration was to launch it on the existing platform that hosts the majority of Salesforce’s AI capabilities. This approach allows us to leverage the innovations made in building the next generation platform over the past few years. Additionally, we aimed to create reusable abstractions for common tasks, enabling the quick integration of generative AI capabilities into applications. While some net-new applications were built primarily powered by generative AI, we also saw existing predictive applications adding generative features and/or replacing the predictive model with a generative model.

Incorporating the Large Language Model (LLM) Gateway into Einstein’s feature discovery and integrating it with the existing ecosystem of microservices was another important consideration.

The LLM Gateway supports generative AI request routing to multiple in-house and external LLM providers and is a key capability needed to support generative AI workloads.

This integration was facilitated by treating the LLM gateway as just another microservice, similar to the other services already in place. Additionally, the team had existing integrations with cloud applications for predictive workloads, such as cloud to cloud connectivity and authentication and the ability to provision applications and manage tenancy. These integrations, along with the incorporation of the Data Cloud, further simplified the overall process of building the generative AI capabilities on the new AI Platform.

A look inside the new AI Platform’s architecture.

Learn More

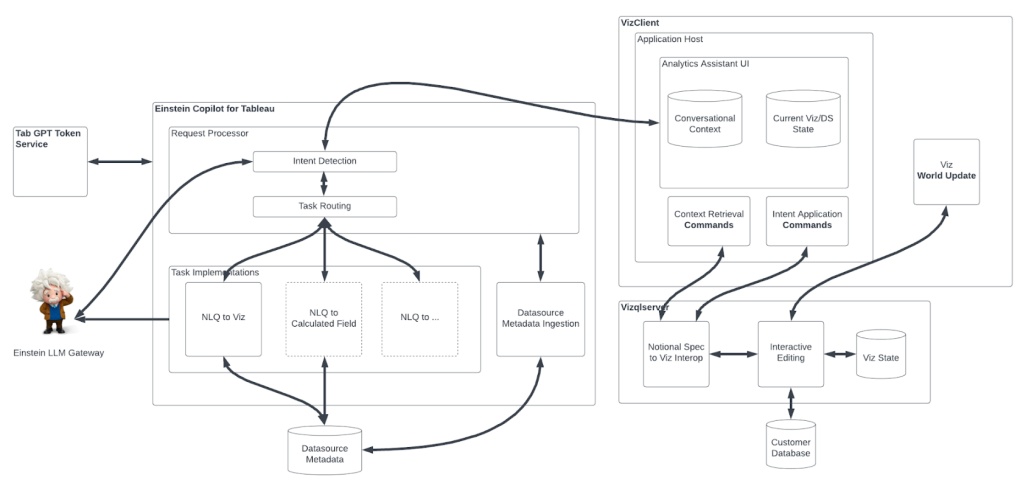

- Hungry for more Einstein stories? Check out how the new Einstein Copilot for Tableau is building the future of AI-driven analytics.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.