In our “Engineering Energizers” Q&A series, we explore the journeys of engineering leaders who have made remarkable contributions in their fields. Today, we meet Venkat Krishnamani, a Lead Member of the Technical Staff for Salesforce Engineering and the lead engineer for Salesforce Einstein’s Machine Learning (ML) Console. This vital tool for internal AI and ML engineers at Salesforce to streamline AI model lifecycle management with an intuitive interface, boosting productivity and simplifying AI development.

Discover how Venkat’s team enhances developer efficiency, overcomes technical challenges, and incorporates user feedback to refine features.

How does ML Console enhance internal developer productivity and simplify AI model lifecycle management?

My team designed ML Console with a singular focus: To boost developer productivity in AI model management. ML Console does this by simplifying the AI model lifecycle across Salesforce Einstein. By tackling the complexities of operational processes, it allows developers to easily monitor, debug, and manage deployed models, tenants, applications, and pipelines — cutting down on time spent navigating intricate systems.

Key to ML Console’s success is its integration of an extensive suite of features within an intuitive interface. This not only streamlines workflow management but also provides developers with easy access to essential data, minimizing errors and enhancing efficiency. With tools like straightforward log access and actionable commands tailored for the dynamic requirements of modern AI projects, the ML Console is a critical asset for developers aiming to navigate the complexities of AI applications.

Venkat explains what makes Salesforce Engineering’s culture unique.

What were the main challenges in developing the UI for data observability in ML Console, and how were they resolved?

The process of obtaining and denormalizing live data, as well as surfacing it, proved to be a significant hurdle, as it involved multiple artifacts — models, applications, pipelines, and flows — and services.

Another challenge we faced was determining how to bundle the data together and establish connections between different artifacts across various services. The sheer volume of data that needed to be surfaced for observability purposes also presented a major challenge. With potentially millions of records being generated daily, managing and interacting with such a large amount of data required careful planning.

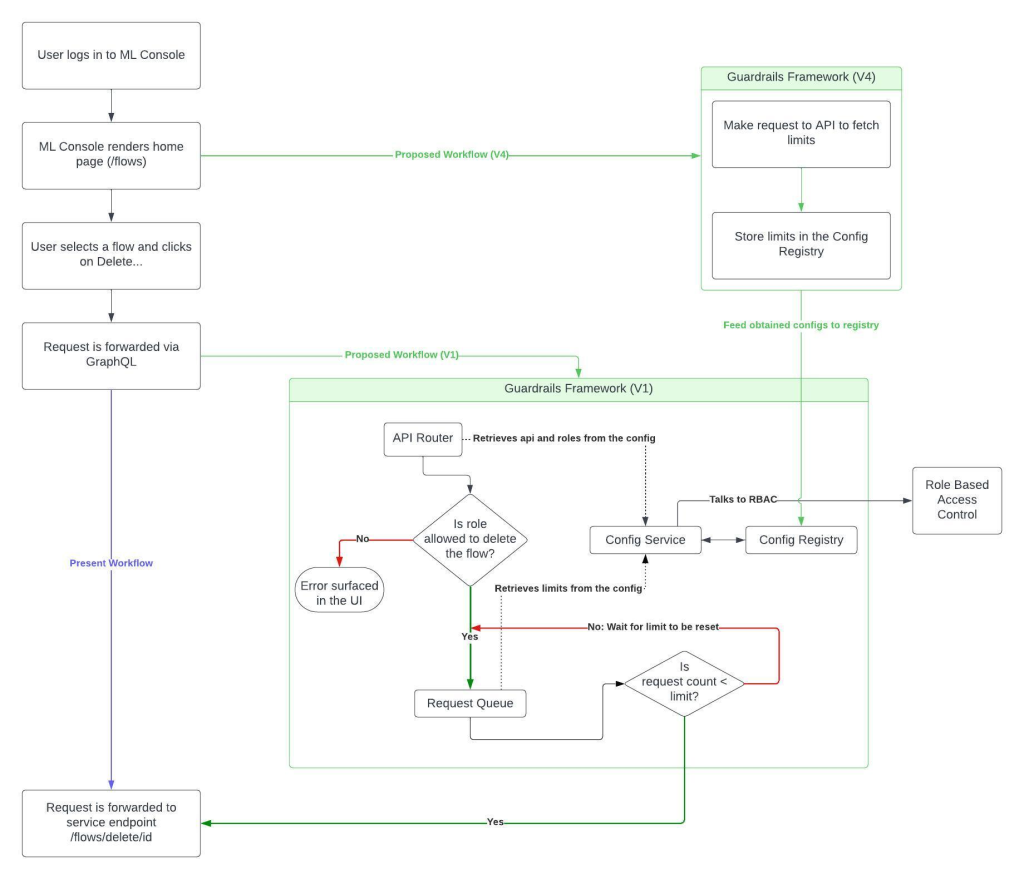

To address these challenges, we implemented a guardrails framework. This framework consists of several key components, including an API router, configuration history, and a request queue. By defining the APIs and connecting them together, we were able to establish connections across services. Configurations were utilized to run these APIs and prioritize requests, ensuring that users who required immediate access to data could obtain it through on-demand data refresh capabilities.

A detailed look at the guardrails framework.

How does your ML Console team address the resource-intensive nature of searching and filtering across millions of records, given the continuous data growth?

One approach we employ is data splitting. By categorizing and segmenting the data based on user preferences, we ensure that only relevant data is indexed for easy searching. Additionally, we are planning to create a separate list of the most frequently searched entities and records. This will enable instant access for users when performing searches.

In terms of data reliability, we focus on both retention and deletion. While important data is retained in the main index for daily interactions, older records that are no longer actively accessed are moved to a separate index. This ensures that the most crucial and frequently used data remains readily accessible while optimizing storage resources.

Enhancing search capabilities is equally important. We make search more feedback driven by incorporating features like spinners, loading buttons, and estimated time of completion. This provides users with visibility into the progress and resource requirements of their searches.

How is real-time availability of data ensured despite the limitations and constraints of data storage?

Previously, every 24 hours, we had a scheduled job that collected and updated data from all services, making it available in ML Console. However, with the integration of Event Bus, we will enhance this process.

Instead of daily data pulls, we’ll leverage Event Bus’ streaming capabilities to identify and refresh only the modified data. This approach significantly reduces the number of data calls, minimizes API overload, and optimizes the system’s performance.

By refreshing only a small subset of the data, we can ensure real-time availability of information while mitigating the limitations and constraints of data storage. This not only improves efficiency but also reduces the strain on the system, resulting in a more streamlined and reliable experience for users.

Venkat shares why engineers should join Salesforce.

How does ML Console’s UI support AI model accuracy and empower developers to make informed decisions on model refinement?

Our UI enables efficient exploration of large language model (LLM) responses to determine the suitability of introducing a model into our ecosystem. It also facilitates exposing the model for inferencing via a prediction service and subsequently surfacing it in Einstein Studio. This is achieved through seamless integration with multiple key features:

- Performance assessment: The internal model hub is a dedicated page where users can view all deployed models, regardless of their organization. This centralized view allows developers to easily assess the performance of their models.

- Comparing model responses: With our UI, users can send a query to deployed LLMs and observe their responses on a single page. This streamlined approach simplifies the debugging process and facilitates targeted fine-tuning efforts.

- Model switching: Switching between LLMs is effortless through a dropdown menu in the model hub. This flexibility enables developers to interact with specific models and evaluate their performance.

- Toxicity analysis: Our UI provides information on the toxicity of model responses. This data helps developers frame their fine-tuning strategies and ensure that model outputs align with desired standards. For this, ML Console leverages Salesforce’s AI Trust Layer, an inferencing playground and model evaluation to deliver accurate data insights.

A look at ML Console’s user-friendly UI.

How do you collect feedback from developers and internal teams to continuously enhance ML Console?

To continuously enhance the UI and address emerging challenges, we employ a variety of channels to gather feedback from developers and internal teams. These channels include:

- Slack: We have a dedicated channel where users can report issues, provide recommendations, and suggest improvements. This platform fosters open communication between developers and consumers of ML Console.

- AI platform internal demos: Every two weeks, we conduct demos to showcase new features and gather valuable feedback from participants. This allows us to understand how the UI is being received and identify areas for improvement.

- Workgroup meetings: We hold meetings with stakeholders and service teams to gather feedback and ensure alignment. These meetings address UI-related changes or upcoming developments, ensuring that our UI meets expectations and aligns with project goals.

- Roadmap discussions: We collect feedback through roadmap discussions, gathering input across different products and considering the needs and preferences of our users. This helps us produce unique content that can be utilized across the platform.

By utilizing these various channels, we can gather comprehensive feedback and make informed decisions to enhance the UI of our platform.

What’s the future of ML Console?

While ML Console started off providing functionality for internal developers, we see a lot of synergy and similar demands from external developers. We are actively collaborating with teams across Salesforce to combine our efforts and provide a unified experience for both external and internal developers.

Learn More

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.