In our Engineering Energizers Q&A series, we explore engineers who have pioneered advancements in their fields. Today, we meet Rahul Singh, Vice President of Software Engineering, leading the India-based Data Cloud team. His team is focused on delivering a robust, scalable, and efficient Data Cloud platform that consolidates customer data to enhance business insights and personalize customer interactions, meeting the diverse needs of their customers.

Discover how Rahul’s team tackled major technical challenges — including optimizing platform scalability, reducing processing times, and managing high transaction rates — to deliver high-performance solutions…

What is your Data Cloud team’s mission?

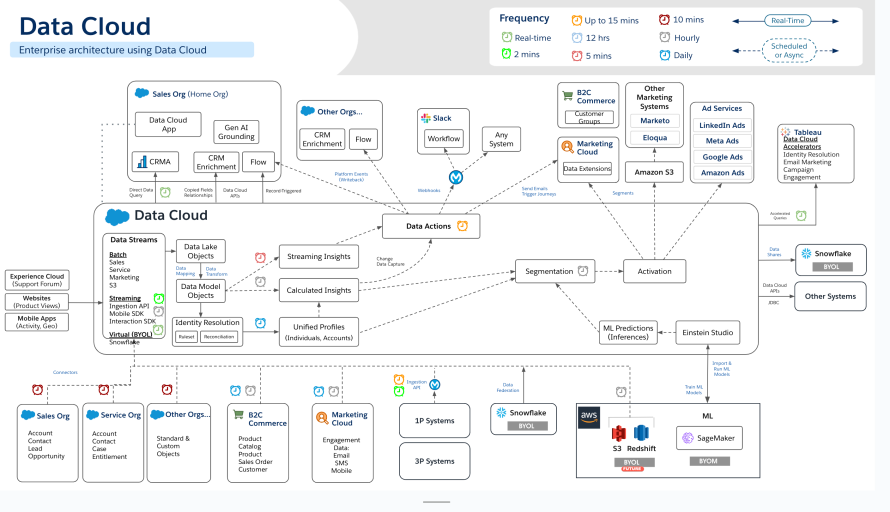

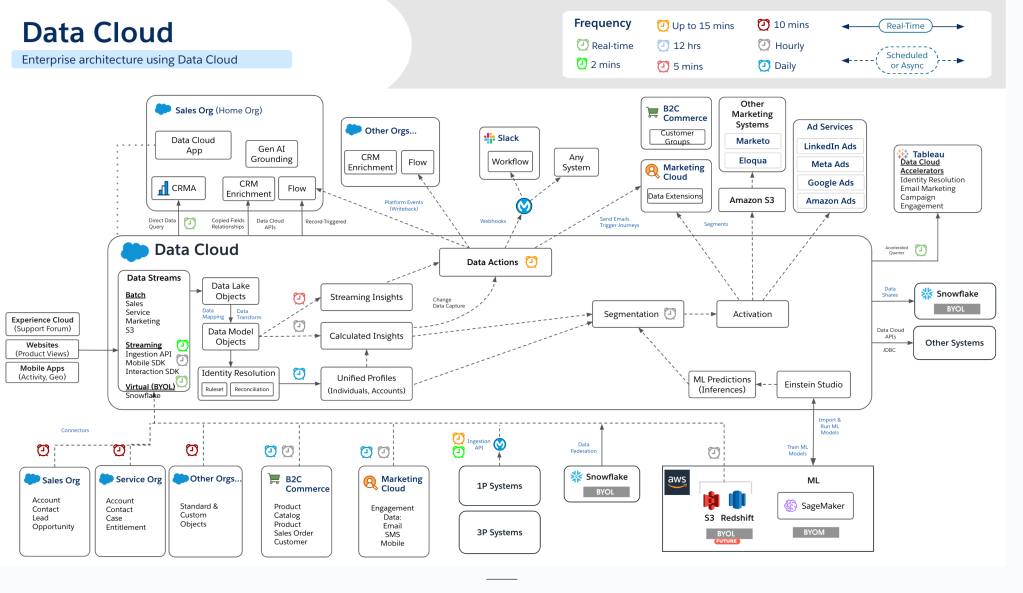

A significant part of our charter is to handle massive scales efficiently. We optimize our platform to support high transaction rates and large volumes of data with high reliability without compromising on performance. This involves implementing advanced technologies and innovative solutions that enhance our platform’s capabilities.

We are also committed to contributing to the open-source community. By sharing our advancements and collaborating with other experts, we drive further innovation and bring valuable insights back into our projects. This helps us stay ahead of the curve and ensures our solutions are cutting-edge.

Rahul explains why Salesforce’s culture is unique.

What were some of the major technical challenges your team faced with Data Cloud?

One major challenge our team faced was enhancing our platform’s efficiency to manage large-scale operations. A key example involved one of our largest non-banking financial customer in India. They required end-to-end data processing capabilities within a very stringent 30 to 40-minute timeframe, despite our usual SLA being one to two hours per module.

This client serves nearly 500 million customers, necessitating rapid data processing from ingestion through to segmentation and activation in Data Cloud.

Additionally, we had to manage and orchestrate workflows across various services in Data Cloud. This required us to implement special tweaks and changes to our existing infrastructure to handle higher demand more efficiently.

How did your team overcome those challenges?

To meet the stringent SLA requirements of that customer, we had to make several optimizations across different parts of the platform. Our team collaborated extensively to address not just the individual SLA components but the overall end-to-end SLA.

This involved deep dives into various services to identify and eliminate bottlenecks. This ensured we could meet the required performance standards while bringing the entire process down to the required time frame.

Additionally, we implemented special optimizations to our infrastructure to handle bigger workloads more efficiently. This collaborative and detailed approach allowed us to support their scale needs with modern architecture. Ultimately, these efforts made it one of the largest and most complex set of Data Cloud workloads we’ve had in India.

Lastly, by optimizing resource allocation and leveraging technologies such as autoscaling (horizontal and vertical), better price-performance compute (e.g. AWS Graviton) and spot/reserved instances, we designed highly cost-effective solutions without compromising on performance.

Diving deeper, what technical adjustments have you made to optimize the performance of Data Cloud?

We made several key adjustments:

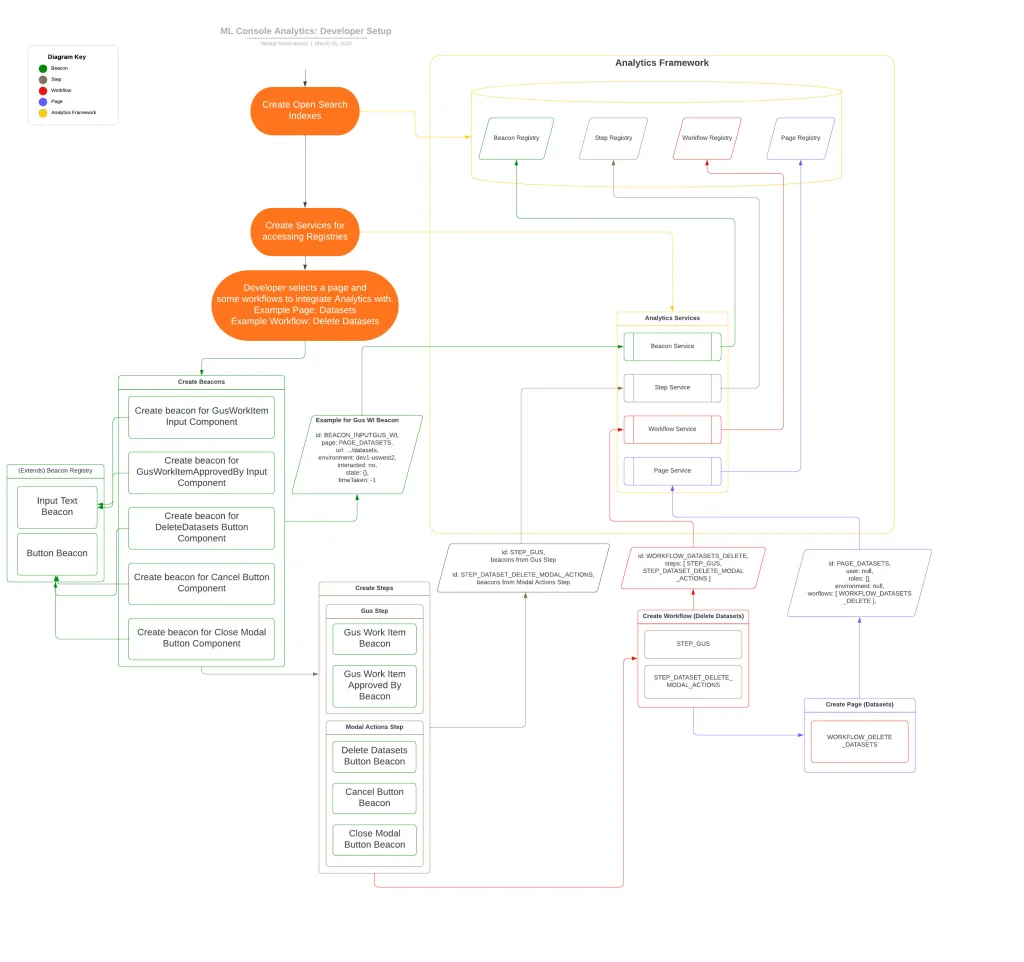

- Enhancing the orchestration of workflows. Every service inside Data Cloud must interact with our platform to run specific workloads. We implemented several critical changes to optimize this interaction

- Streamlining tenant provisioning. By refining this process, we reduced the time to set up and configure new tenants, ensuring faster onboarding

- Improving the admin services control plane. We enhanced how administrative tasks were managed, allowing our system to handle higher demand more effectively. These improvements ensured that administrative processes did not become bottlenecks, particularly under heavy load

- Optimizing resource allocation and workload management. This included implementing tweaks to how workloads were scheduled and executed, ensuring optimal performance even under peak demand

By making these adjustments, we managed workloads more efficiently and met the stringent end-to- end SLAs required by the Indian customer. These optimizations were crucial in supporting large-scale operations and maintaining high performance standards across Data Cloud.

What role does customer feedback play in Data Cloud’s optimization process?

Working directly with customers provides us with real-world insights into their specific needs and challenges as they seek to scale their businesses. This close collaboration enables us to tailor our solutions to meet their requirements.

For example, a customer’s feedback on latency and processing times pushed us to re-evaluate and enhance our platform’s performance. By understanding their pain points and operational demands, we were able to make targeted improvements that significantly boosted efficiency..

Furthermore, regular feedback loops with our customers allowed us to stay aligned with their evolving needs. This continuous dialogue ensured that our optimizations were not just reactive but also proactive, anticipating future challenges and opportunities.

What innovations have you implemented in Data Cloud to manage workload distribution and improve data processing efficiency?

One significant innovation was the development of a sophisticated orchestration mechanism that dynamically manages workload distribution based on real-time signals. This mechanism ensured optimal resource utilization and minimized processing times, allowing us to handle large volumes of data more efficiently..

We have advanced our workload management by implementing techniques that leverage metadata and table statistics to analyze data volumes. This strategic approach has significantly enhanced the efficiency and speed of our data processing tasks, enabling more precise workload categorization. Consequently, our platform can now handle complex data sets with greater agility and accuracy.

Our Data Cloud control plane design is continuously evolving to scale seamlessly and meet the increasing demands of our microservices. It supports a variety of functions, from processing large-scale data analytics with Spark, running complex queries with Trino & Hyper, to managing unstructured multi-modal use cases with our newly introduced vector database. Our platform is equipped to handle any workload, from small Kubernetes jobs to large-scale deployments that utilize thousands of nodes of various types, ensuring elasticity.

To support this scalability, we utilize horizontal scaling of compute resources beyond the confines of a single AWS account and auto-scaling of EKS clusters. These capabilities are bolstered by efficient load balancing and request routing algorithms, catering to the growing needs of Data Cloud Everywhere.

We are dedicated to optimizing and developing new resource management and allocation algorithms, fine-tuning them to ensure that our compute resources are used most effectively, particularly in high-demand scenarios. This continuous enhancement fosters improved performance and adaptability.

How does your team ensure the scalability of Data Cloud to meet future demands?

Scalability is central to our design philosophy. We constantly test our systems under various stress scenarios to ensure they can handle future demands, identifying bottlenecks and fine-tuning for optimal performance.

In line with Salesforce’s vision, our goal is to deploy Data Cloud across all regions, preparing the platform to scale up to 100 times its current capacity. This strategic expansion is designed to develop solutions that are not only effective today but also capable of supporting significantly larger workloads in the future.

To achieve this, we are leveraging public cloud infrastructure such as Hyperforce. This allows us to dynamically and elastically scale our resources, ensuring that our platform can grow seamlessly alongside our customers’ evolving needs. This approach guarantees that as customer demands increase, our platform remains robust and responsive.

This flexibility enables efficient resource allocation and quick responses to changing demands. Additionally, continuous monitoring and analysis of system performance allow us to make necessary adjustments, maintaining scalability and robustness to meet future challenges effectively..

Rahul shares why engineers should join Salesforce.

How does your team balance innovation with maintaining stability and reliability in Data Cloud?

Balancing innovation with stability is indeed a challenge. We achieve this through rigorous testing and a phased implementation approach. Before rolling out any new feature or optimization, we conduct extensive testing in controlled environments to ensure it doesn’t disrupt existing functionalities.

Our testing process involves multiple stages. Initially, new features are tested in isolated environments to identify potential issues. Once stable, they are gradually introduced into broader testing phases, simulating real-world conditions to verify performance and reliability.

Additionally, we actively collect continuous feedback from our customers during the pilot phase, where they serve as early adopters. This process allows us to gather valuable insights and pinpoint areas that require refinement. Establishing this feedback loop is crucial for ensuring the stability of new features before full-scale deployment.

Lastly, we focus on monitoring and analytics. Continuous monitoring allows us to quickly detect and address stability issues, maintaining high reliability while advancing innovation.

Learn More

- Hungry for more Data Cloud stories? Read this blog to learn about Data Cloud’s secret formula for processing one quadrillion records monthly.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.