In our “Engineering Energizers” Q&A series, we delve into the journeys of engineering leaders who have made notable strides in their areas of expertise. This edition features Adithya Vishwanath, Vice President of Software Engineering at Salesforce. He leads the Data Cloud team, a pivotal platform that integrates diverse data sources, offering real-time insights and streamlined data management within Salesforce’s Customer 360.

Discover how Adithya and his team address challenges in managing unstructured data, from processing multiple data formats to resolving scalability concerns.

What is your team’s mission?

We transform the way Salesforce manages and processes data via Data Cloud. We are dedicated to crafting a robust platform that integrates flawlessly within the Salesforce ecosystem. Our key responsibilities encompass developing the infrastructure necessary for Data Cloud, ensuring seamless platform integration, and addressing the complexities of unstructured data.

Handling unstructured data means working with a variety of formats, including audio, video, and PDF files. Our task involves decomposing and processing these types to render them useful and accessible for Salesforce applications.

Our goal is to ensure that Data Cloud not only enhances the capabilities of Customer 360 but also establishes new benchmarks in data processing and integration across the Salesforce ecosystem. By concentrating on these critical areas, we are committed to delivering innovative solutions that propel the future of data management and utilization at Salesforce.

Adithya explains how his team uses cutting-edge tools to enhance their productivity.

What challenge did your team face with unstructured data and how was it addressed?

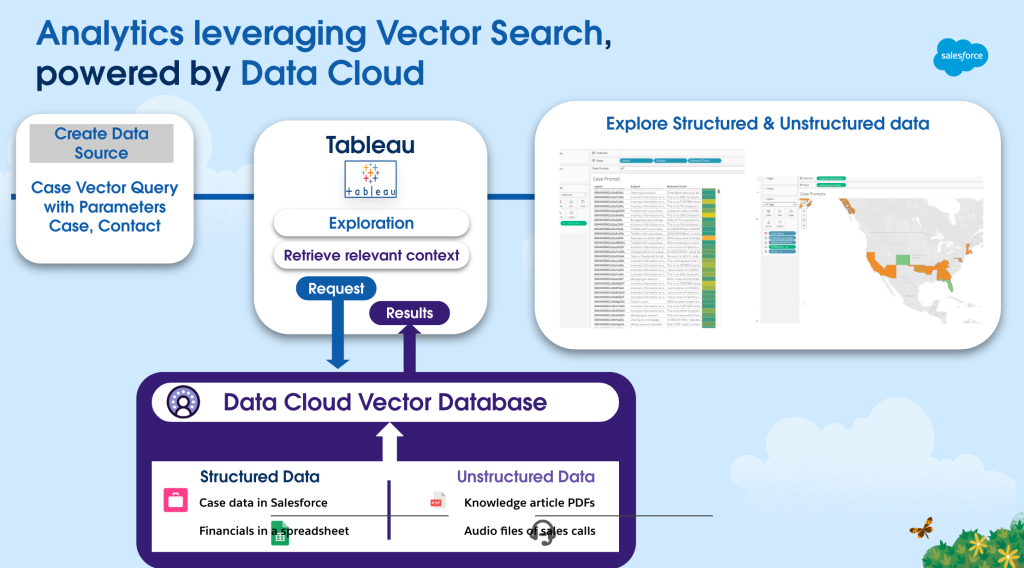

Unstructured data posed a distinct challenge due to its diversity and complexity. For example, when working with audio and video files, our team needed to develop a method for chunking and vectorizing the data. This involved breaking down the files into smaller, manageable segments and converting them into floating-point numbers to facilitate analysis.

A significant hurdle was ensuring accurate and efficient vector similarity searches across extensive datasets. To address this, we implemented advanced AI algorithms and optimized our infrastructure to manage the high volume of transactions effectively.

These improvements allowed us to quickly provide insights and extract pertinent information, ensuring that our users could access and utilize unstructured data efficiently. By upgrading our systems to support these intricate processes, we achieved substantial progress in managing unstructured data, thereby enhancing data analysis capabilities for our customers.

What was the biggest challenge your team faced during the initial development of Data Cloud?

One of the most formidable challenges we encountered was the integration of the monolithic Salesforce core ecosystem, which operates on a large-scale Java service, with our microservice-based Data Cloud ecosystem. The monolithic structure necessitated a rigorous quality cycle and comprehensive testing, whereas the microservices required a more agile development approach. Bridging these two systems to ensure a seamless customer experience presented a significant obstacle.

We had to ensure that despite the differing development life cycles, we could swiftly advance code on the microservices side without undermining the stability and performance of the monolithic core. This involved a meticulous balancing of quality controls, employing sophisticated sets of gates to manage releases while not impeding the agile processes inherent to the microservices.

Achieving this equilibrium was essential for upholding high standards in both development environments. The core challenge was to integrate these systems in a manner that supported both rapid innovation and consistent, reliable performance.

How did your team scale systems to manage unstructured data for Salesforce’s large customer base?

With Salesforce serving over 150,000 customers, each with potentially thousands of users interacting simultaneously, it was crucial to ensure that our infrastructure could support these transactions without any drop in performance.

To accomplish this, we scaled our operations by deploying models directly on Amazon EMR on EKS within Kubernetes clusters, leveraging their scalability and efficiency. This allowed us to run mini-models on every node and manage transactions independently, enabling us to process approximately 250 trillion transactions across Data Cloud tenants per week while maintaining high performance and reliability.

Furthermore, we concentrated on optimizing our data processing pipelines to handle unstructured data efficiently at scale. This involved refining our infrastructure and adopting robust data management practices. These efforts ensured that we could meet the demands of our large user base while continuing to provide seamless and efficient data processing.

What strategies did your team use to balance quality and speed in development?

Initially, our team faced challenges with this, particularly due to the different demands of our monolithic and microservice ecosystems. To address this, we introduced a series of quality gates designed to ensure that any component deemed ready could be released without compromising the integrity of the overall system. However, we soon discovered that excessive controls were hindering our agile processes.

To refine our strategy, we began focusing on identifying and prioritizing “holy grails” for each release — these were critical features that were essential and non-negotiable. This strategy allowed us to concentrate resources on these key tasks, ensuring high-quality results without compromising development speed.

Additionally, by scheduling QA teams in advance and optimizing our processes, we successfully achieved a balance that allowed for rapid yet dependable development. This approach helped us uphold high standards across both our monolithic and microservice systems, enabling us to deliver robust and reliable products while continuing to meet our swift development timelines.

Please share an example of a significant trade-off your team made during development.

During the development of sandbox support for Data Cloud, we faced a significant trade-off. Sandbox environments are essential for developers, allowing them to test and customize features in Data Cloud before full deployment. However, the implementation of this support required substantial resources, necessitating the reallocation of efforts from other projects.

For example, our development on a particular ecosystem had to be significantly reduced. Despite the system handling millions of transactions, we maintained only minimal support to focus on the sandbox initiative.

This decision was crucial as it allowed us to deliver an important feature without compromising the overall functionality and stability of Data Cloud. By strategically reallocating resources and prioritizing urgent needs, we managed to maintain our commitments and effectively deliver key capabilities to our customers.

Adithya previews an emerging engineering technology that his team is researching now.

What are your team’s future goals?

Looking ahead, our team is concentrating on four key areas: 1) enhancing unstructured data pipelines; 2) strengthening governance; 3) expanding remote Data Cloud features; and 4) improving developer experiences. Our ongoing efforts to improve unstructured data handling are crucial as we aim to advance our AI capabilities and develop a more comprehensive knowledge graph.

In terms of governance, we are committed to establishing robust data governance frameworks. This includes implementing role-based access, attribute access, and field-level masking to ensure data security and compliance across various applications.

Additionally, we are focusing on the development of remote Data Cloud features to better support our large-scale customers. This will enable them to manage multiple Salesforce instances more effectively. These initiatives are designed to foster innovation and maintain Data Cloud’s leading position in data processing and integration technology. By prioritizing these areas, we aim to provide advanced solutions that address the changing needs of our customers and the market at large.

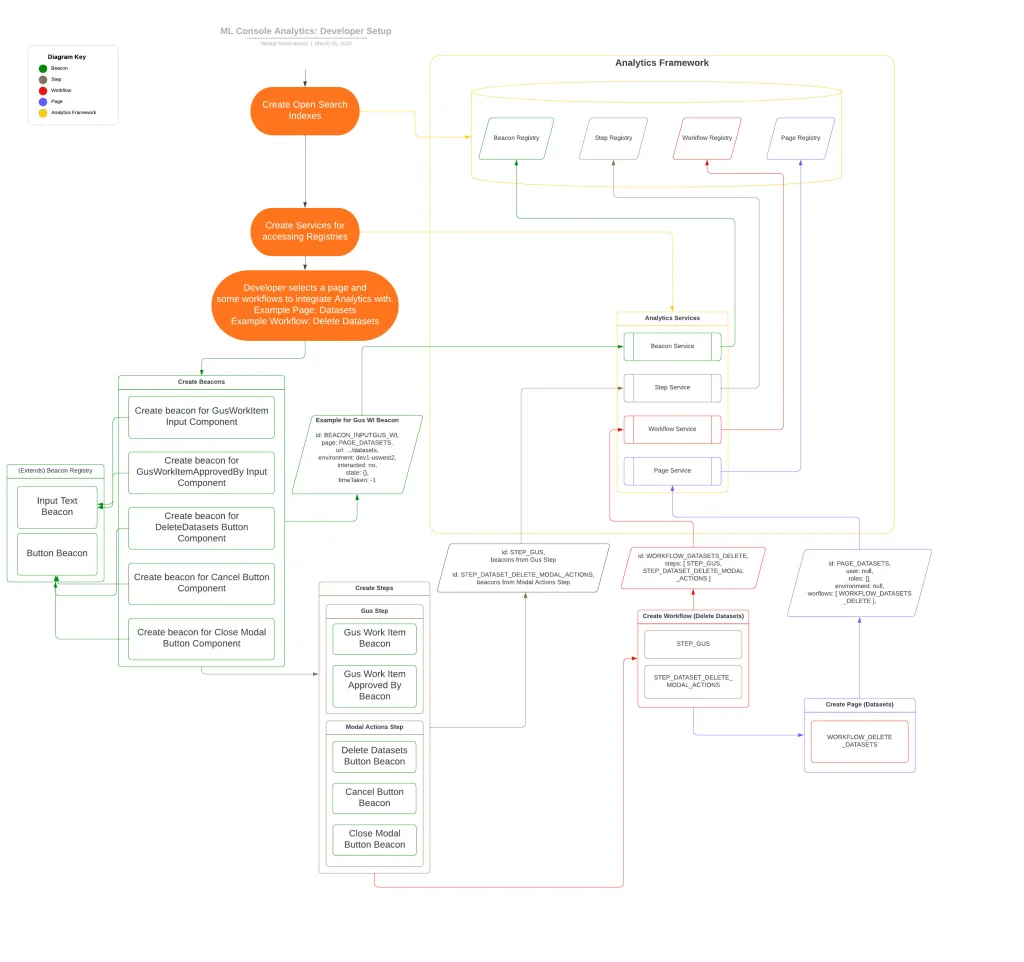

Lastly, we’re focused on improving the developer experience, enabling both internal and external app developers to use the Data Cloud platform through a variety of libraries, toolkits, and utilities.

Learn More

- Read this blog to learn how Data Cloud’s team are scaling massive data volumes and slashing performance bottlenecks.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.