There has been a major buzz lately around transitioning to HTTP/2. Ever since the protocol draft has been ratified, we’re hearing more and more about browsers, web servers and web sites adding support for HTTP/2. Web performance experts encourage everybody to adopt the new standard and enjoy the empirically-proved speed gains. There are many posts out there which explain what HTTP/2 is, so we’ll skip that and focus on one critical aspect that has not been addressed as well.

It is an old networking problem called “Head of Line Blocking (HOLB)”. You can think about it as the long queue at the DMV office, where people are served by a strict order. The “head of line” person is the one currently being served, and they can potentially block the whole line of waiting people. Now imagine a queue of data packets that has to be processed in order, add variable processing times, network latency or some packet loss, and then you have HOLB.

HTTP/2 is solving one kind of HOLB by multiplexing HTTP requests. If we’ll go back to the DMV analogy, this is like serving multiple folks in parallel. But how is it possible? The DMV employee can not speak at the same time with all of them, right? But imagine them saying: “please stand aside while the computer is processing your request. Next in line, please!”. In this example, the computer slowness is the reason for HOLB, and we work around it by re-purposing the computer processing time, otherwise lost, to start handling a new request.

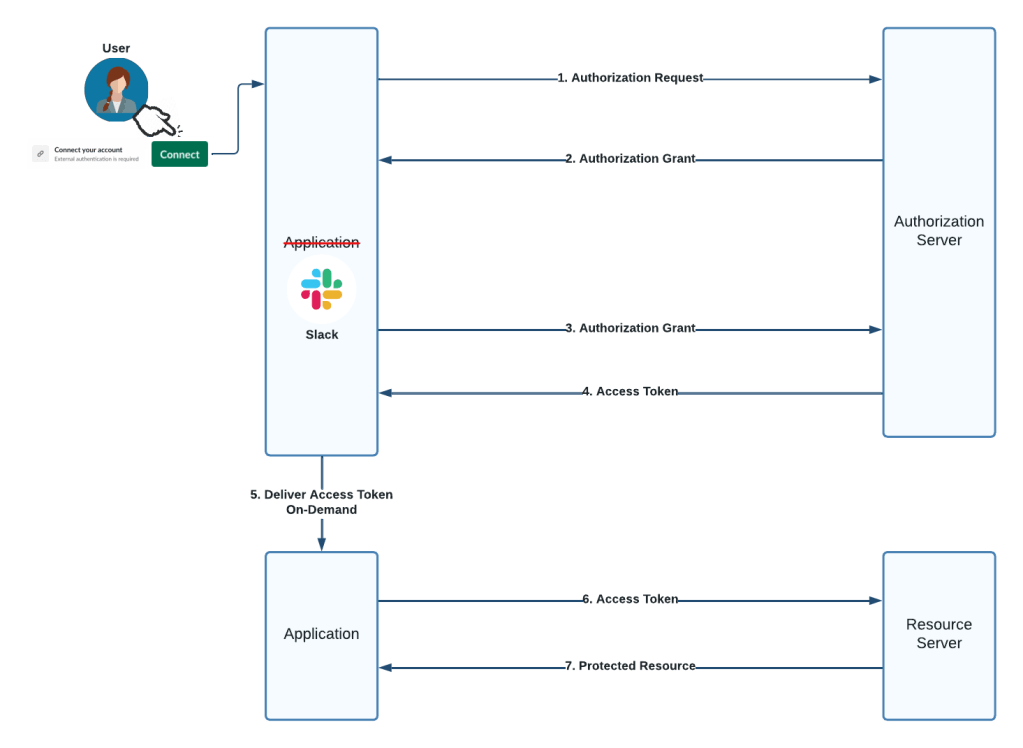

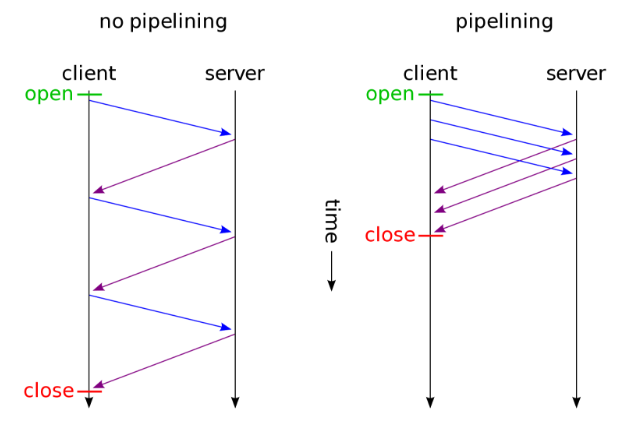

The “HTTP lost time” refers to the request-response round-trip and the server processing time: a new request cannot be started before we got the response of the previous one, so the network latency and complexity of a request dictates how idle we are. It is worth noting that HTTP/1.1 supports pipelining. But it really did not solve this kind of HOLB since a request that takes a lot of server processing time can still hold up subsequent responses. With HTTP/2 multiplexing, what we have is akin to the DMV example above where multiple requests and responses can be in flight at a time. So does HTTP/2 solve HOLB?

The full answer is “Yes and No”. Actually, there are two HOLB problems: one at the HTTP level and one at the TCP level. Just as the HTTP server processes requests, the TCP stack has to process packets and ensure all the bytes have been received in order for HTTP to consume them. Imagine a queue of packets that correspond to several application requests. If a packet at the head of that queue is delayed or lost, you have HOLB at the TCP layer.

HTTP/2 is indeed solving the problem at the HTTP level. However, it’s layered on top of the same TCP protocol just like HTTP/1.1, so it cannot solve the TCP HOLB. But are there scenarios where it could worsen the situation? Yes. The reason is that applications using HTTP/2 can send many more requests over a single TCP connection due to the pipelining/multiplexing features. Unexpectedly large variations in latency or a loss affecting the segment at the TCP head of line makes HTTP/2 more likely to hit HOLB, and its impact is larger as well. Basically, the receiver stands idle till the head is recovered, while all the subsequent segments are held by the TCP stack. This means that an image might be downloaded successfully and still not show up due to HOLB.

TCP has some recovery means for HOLB, but at a high cost — several seconds in bad cases. There’s at least one round-trip involved in retransmitting the “head of line” piece. These lost round-trips have a devastating impact on mobile performance.

This is probably why Google has not stopped at SPDY (HTTP/2’s precursor), but rather continued experimenting with QUIC, with one of the stated goals being mitigating HOLB. There’s also an IETF draft that proposes how to modify TCP to tackle HOLB. While this is a recognized problem, there have not been good solutions that developers can readily adopt.

Salesforce has a powerful and field-tested approach for mitigating HOLB at the TCP layer: we decouple the relation between an HTTP request and a TCP connection. Think about your transport as composed of multiple TCP connections (as many as the network context would need). Any part of the HTTP request can go over any TCP connection. So if you hit the HOLB in one connection, it not only helps in mitigating affected requests, it also minimizes impact to other application requests using healthy connections. The result is an ability to enjoy the benefits of multiplexing and pipelining at the HTTP layer while minimizing risks of HOLB.

HTTP/2 is an exciting new revision to the HTTP protocol. There are no doubts about the performance gains it can deliver in the field. However, it also presents questions about how best to use these new features and experience from real world deployments will be our best guide.

Follow us on Twitter: @SalesforceEng

Want to work with us? Salesforce Eng Jobs