By Ruchita Jadav and Rishu Aggarwal.

In our Engineering Energizers Q&A series, we shine a spotlight on engineering innovators who are shaping the future of AI. Today, we feature Ruchita Jadav, whose team is revolutionizing the AI model serving at Salesforce. They are innovating on how to enhance model inferencing by researching and implementing new advancements in this field, thereby enhancing overall efficiency.

Discover how Ruchita’s team addressed the challenges of reducing AI infrastructure costs, improving GPU utilization across various AI workloads, and efficiently scaling AI infrastructure to meet growing business demands — all while ensuring smooth AI model deployment and maintaining cost efficiency.

What is your team’s mission?

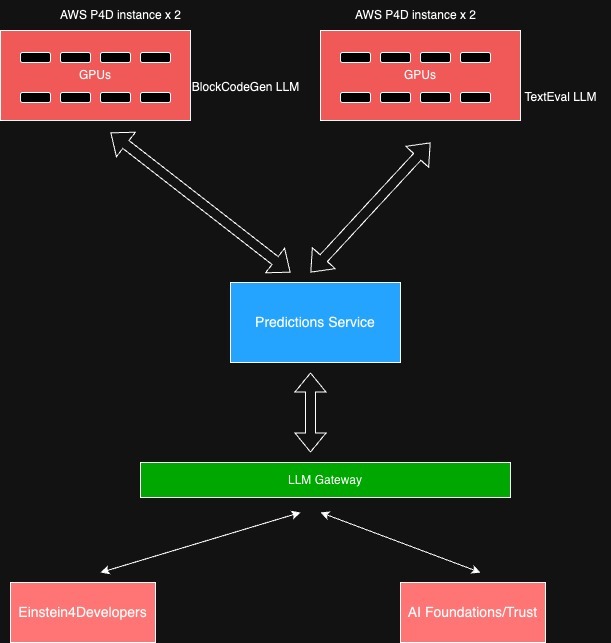

The Einstein AI Platform Model Serving team is dedicated to developing and managing services that power Large Language Models (LLMs) and other AI workloads within Salesforce AI. Our mission is to optimize cost efficiency, streamline model deployment, and enhance inference performance, ensuring seamless integration into products such as Agentforce4Developers and Agentforce4Flow.

A core part of our focus is on model onboarding, providing customers with a robust infrastructure to host a variety of ML models. We achieve this through an SDK and deployment pipeline that automates the deployment process while maintaining reliability at scale. We also continuously improve performance and scalability by enhancing our inference systems with advanced monitoring, observability, and maturity features to ensure reliable real-world operation.

To cater to different customer needs, we offer both self-served and fully-managed AI services, providing businesses with the flexibility they need to adopt and deploy AI. By integrating these capabilities, we help accelerate AI adoption within Salesforce, balancing performance, cost, and flexibility.

What was a major technical obstacle your team faced in optimizing GPU utilization?

One of the biggest hurdles we faced was efficiently managing GPU resources for models with vastly different performance and infrastructure needs. Our platform supports models ranging from a few gigabytes to 20–30 GB, each with unique production demands.

For larger models (20–30 GB) with lower traffic, we needed high-performance GPUs, but these models often underutilized multi-GPU instances. Conversely, medium-sized models (around 15 GB) with high traffic required low-latency, high-throughput processing, but they often faced over-provisioning and higher costs due to the same multi-GPU setup.

To tackle this, we partnered with AWS to introduce GPU-level isolation within multi-GPU instances, enabling multiple models to dynamically share GPU resources based on their actual demand. This solution has brought several key benefits:

- Optimized Resource Allocation: Models now share GPUs more efficiently, reducing unnecessary provisioning.

- Cost Reduction: By minimizing idle GPU resources, we’ve achieved significant infrastructure savings.

- Enhanced Performance for Smaller Models: Smaller models can now use high-power GPUs to meet their latency and throughput needs without incurring excessive costs.

By refining GPU allocation at the model level, we’ve improved resource efficiency and maintained performance across a wide range of AI workloads.

AI Platform inference via single model endpoints.

AI Platform inference via inference components endpoints.

How do you manage challenges related to scalability in AI engineering?

One of the biggest challenges in AI engineering is ensuring scalability while keeping costs under control and managing limited GPU resources. GPUs are expensive and often in short supply, so it’s crucial to scale AI systems efficiently without over-provisioning hardware. Our customers at Salesforce AI are constantly facing growing demands, which require us to optimize inference to support a wide range of business use cases.

To address these challenges, we leverage AWS SageMaker’s Inference Components. This technology, typically Multi-Container Endpoints, helps us make the most of our GPU resources on large AWS EC2 instances. It dynamically redistributes resources during traffic spikes, which means we don’t have to manually adjust the hardware every time demand changes.

The latest AWS EC2 instances, such as the P5, P5e, and P5en, are equipped with Nvidia H200 Tensor Core GPUs. These GPUs offer high performance and heavy computing capabilities, but they come with a significant cost, which often limits their usage.

However, with AWS SageMaker Inference Components, we can significantly reduce these costs by up to 8X. This is achieved by hosting multiple large language models (LLMs) on these powerful AWS EC2 instances.

These cost savings are substantial and open up new opportunities for using high-end, expensive GPUs in a cost-effective manner. Through the Salesforce AI Platform, customers can now leverage these advanced GPUs for serving ML inference without the usual financial constraints.

One of the key areas of development is focused on Inference Components, which are crucial for optimizing costs while providing scalable solutions. Currently, AWS SageMaker doesn’t offer a fully on-demand service, especially for high-power GPUs like the H100s and A100s. This limitation can create significant capacity constraints for Salesforce Clouds, requiring us to forecast traffic accurately and manually provision AWS resources.

To tackle this issue, we partner with AWS to enhance Inference Components in AI Platform with AWS provided auto-scaling capabilities. This allows each model to dynamically scale up or down within a container based on configured GPU limits. By hosting multiple models on the same endpoint and automatically adjusting capacity in response to traffic fluctuations, we can significantly reduce the costs associated with traffic spikes. This ensures that AWS EC2 capacity is only provisioned when necessary, leading to more efficient resource management and cost savings.

By leveraging these auto-scaling capabilities, Inference Components can set up endpoints with multiple copies of models and automatically adjust GPU resources as traffic fluctuates. This ensures that our AI models can handle varying workloads efficiently without compromising performance.

In summary, Inference Components help the Salesforce AI Platform and its customers achieve greater scalability by optimizing GPU usage. This approach allows our customers to make the most of their AI infrastructure investments, ensuring long-term sustainability and enabling Salesforce to serve more customers with the available GPU resources.

How did your team introduce new features to SageMaker while maintaining stability, performance, and usability?

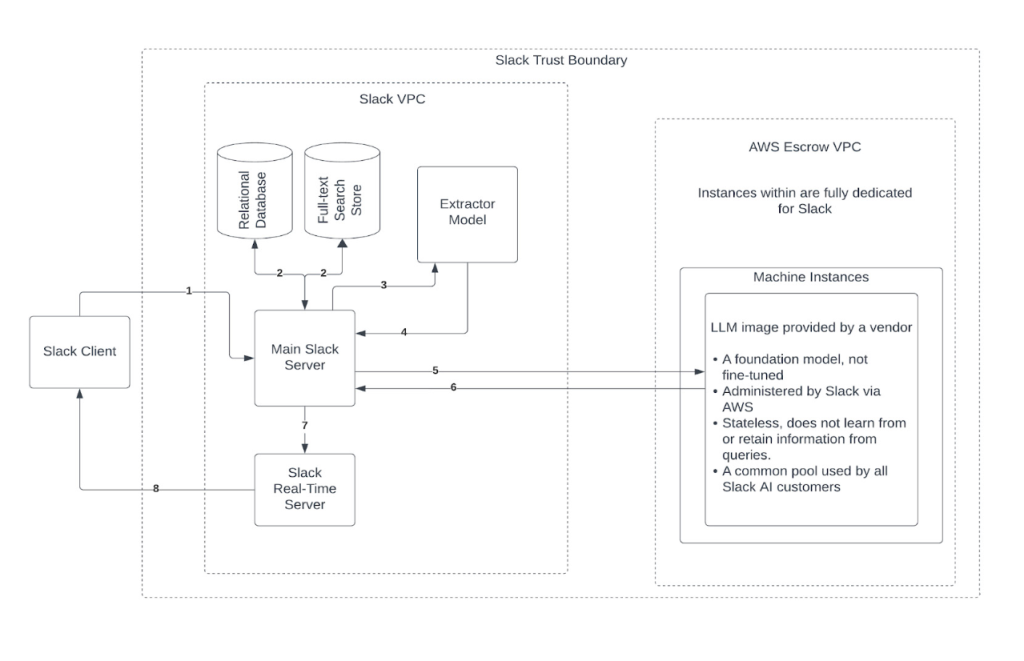

To expand hosting options on SageMaker without affecting stability, performance, or usability, we introduced the Inference Component (IC) alongside the existing Single Model Endpoint (SME).

The Single Model Endpoint (SME) provides dedicated hosting for each model, ensuring that each model runs on its own isolated instance with reserved resources. This setup guarantees consistent and predictable performance.

The Inference Component (IC) adds flexibility by allowing model packages to be decoupled from specific instances. Unlike SME, IC supports multi-model endpoints, enabling multiple models to share instance resources efficiently. Here’s how it works:

- Endpoint Creation: We first create the endpoint using predefined instance types.

- Dynamic Model Attachment: Model packages are then attached dynamically, spinning up individual containers as needed.

By offering both SME and IC, we give customers the choice between dedicated hosting for consistent performance and resource-efficient deployment for cost savings. This careful separation of functionalities ensures that the introduction of IC does not disrupt the existing SME deployments, maintaining overall system stability and performance.

What was the most significant technical challenge your team faced recently?

One of the most significant technical challenges the AI Platform team has faced is reducing the Cost to Serve (CTS) for AI business applications. As AI adoption continues to grow, ensuring long-term sustainability requires a financially viable and scalable approach, further complicated by the need to support multi-cloud environments for greater deployment flexibility.

With the surge in Generative AI in 2023, continuing over into 2025, the focus has shifted to latency improvements, cost optimization, and platform sustainability. Many AI customers struggle with high infrastructure costs, making it difficult to scale. To tackle this, the team has developed solutions that significantly lower CTS while maintaining performance and reliability.

A key innovation is the Multi-Container Endpoints, which represent a significant evolution from the traditional Single Model Endpoint approach. This technology allows multiple models to share AWS EC2 instances (such as P4Ds, G5.48XLs, and P5s) by optimizing GPU utilization. This can reduce inference costs by up to 80% compared to single-model deployments. This is particularly beneficial for LLM models with moderate to low traffic, making AI more accessible and cost-effective while enabling businesses to scale without incurring excessive infrastructure expenses.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.