By Claudia Santoro and Sandeep Pal.

In our Engineering Energizers Q&A series, we shine a spotlight on the innovative engineering minds at Salesforce. Today, we feature Claudia Santoro, SVP of Software Engineering, whose team developed MultiCloudJ — a cloud-agnostic Java SDK that allows Hyperforce service teams to deploy seamlessly across AWS, GCP, and Alibaba Cloud without the need for provider-specific code.

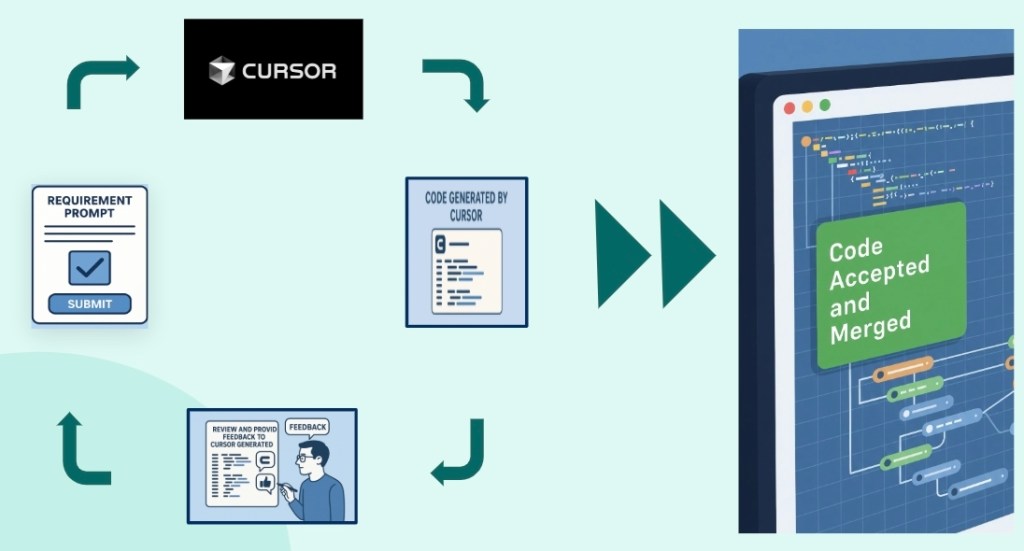

Discover how AI coding tools like Claude and Cursor accelerated MultiCloudJ’s development tasks from weeks to days, enabling the team to meet tight 3-4 month GCP onboarding deadlines. Learn how WireMock’s record/replay testing ensured consistent behavior across the three cloud providers without compromising security in CI. Additionally, explore how an AI-powered MCP server streamlined multi-week migration efforts into guided workflows that can be completed in just days.

What is your team’s mission building MultiCloudJ, the cloud-agnostic Java SDK for Salesforce?

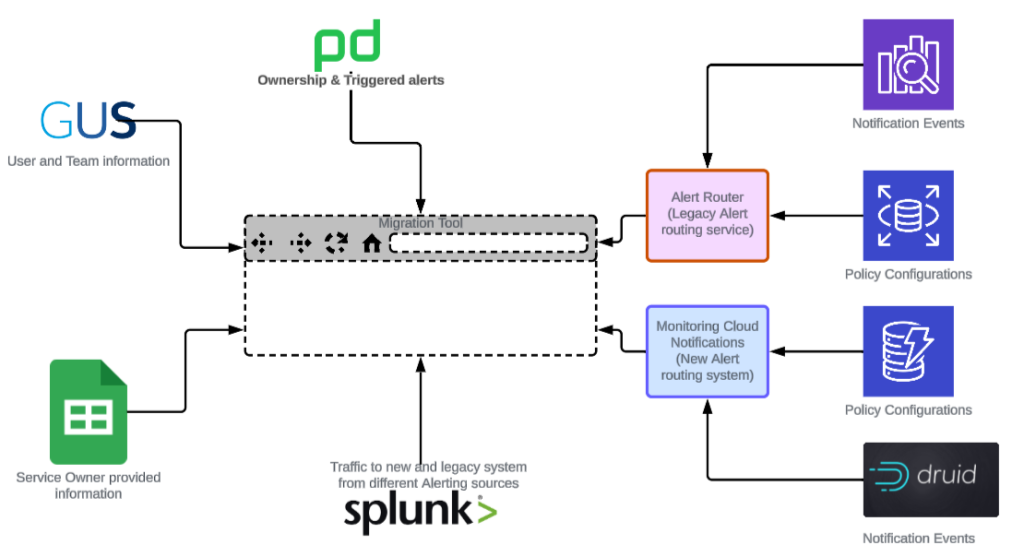

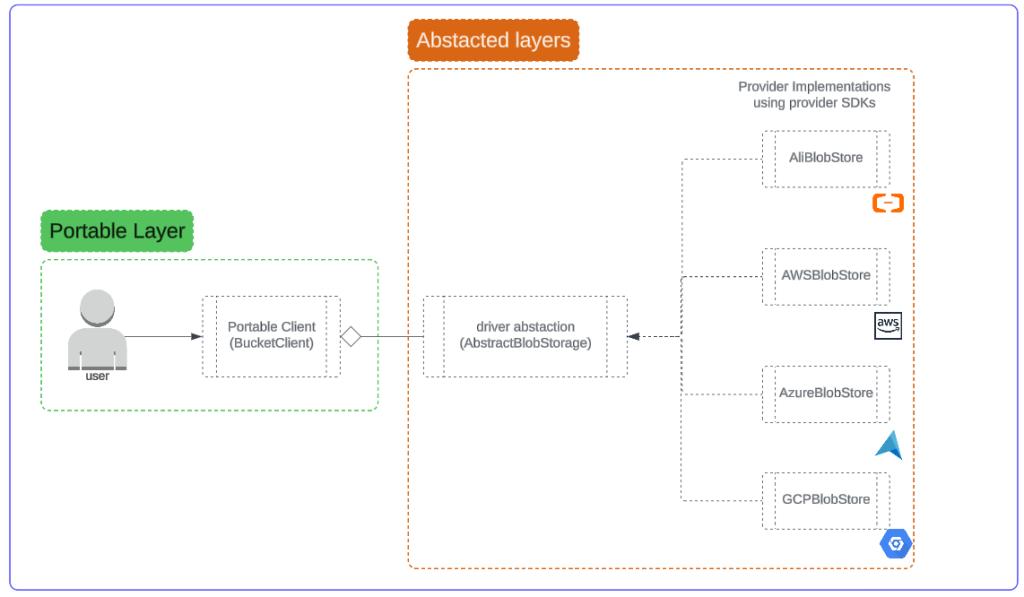

Our team developed MultiCloudJ to make our services cloud-agnostic by default. We designed a three-layer architecture to absorb semantic differences across cloud providers, so service teams never have to write provider-specific code paths.

- Client Layer: Provides a stable, portable API contract.

- Driver Layer: Bridges provider contracts and translates between our portable API and provider implementations.

- Provider Layer: Normalizes behavior, including status codes, pagination styles, checksums, conditional operations, and error mapping.

High-Level 3-Layer architecture of MultiCloudJ.

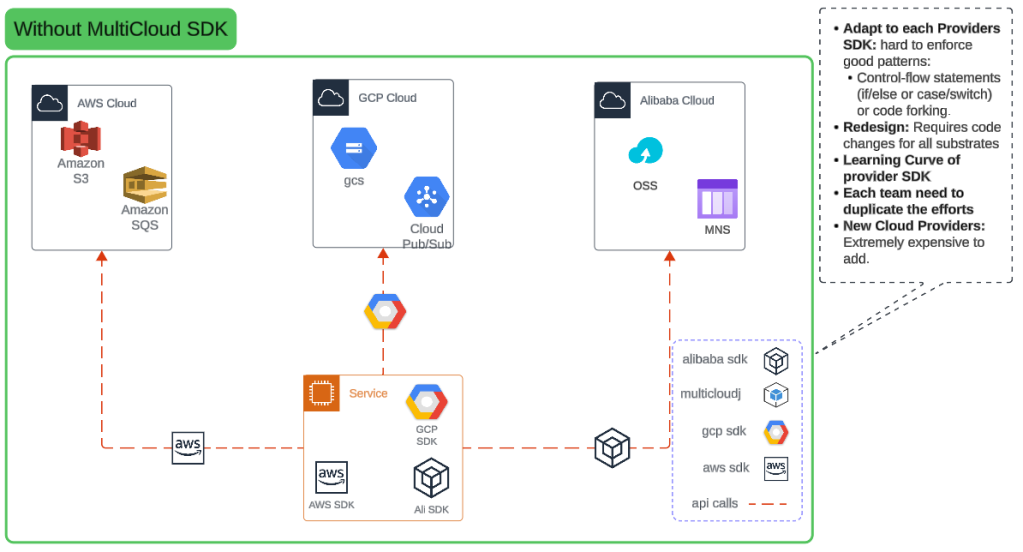

We built this architecture to solve a fundamental portability problem. Before MultiCloudJ, internal development teams faced duplicate-repository issues, maintaining separate codebases for each cloud provider. This multiplied the maintenance burden and slowed feature velocity. Cloud provider APIs look similar but behave differently in critical ways. For example, deleting a non-existent object returns 200 OK on AWS but 404 Not Found on GCP. Without MultiCloudJ, every service team had to solve these same semantic differences redundantly. A little more complex examples are pagination on document stores such as DynamoDB providing LastEvaluatedKey explicitly where Firestore in Google just relies on explicitly building cursor based on the last document of the page

In response, we offer portable APIs for object storage, pub/sub, and key-value stores. These APIs ensure that delete-missing operations are idempotent and return success everywhere, and pagination is unified through iterators. When we need to add a new cloud provider, we implement a new provider layer in MultiCloudJ — service teams don’t need to rewrite anything.

With AI-assisted onboarding via an MCP server and tools like Claude and Cursor, teams can quickly move from provider SDKs to our single, maintainable abstraction, accelerating the transition process.

Claudia shares what keeps her at Salesforce.

What technical challenges did AI coding tools solve building MultiCloudJ within aggressive 3-4 month GCP timelines?

We faced aggressive 3-4 month timelines to deliver MultiCloudJ for GCP onboarding while ensuring reliability across multiple cloud providers. The challenge was to determine which APIs to prioritize and catalog the behavioral differences across AWS, GCP, and Alibaba, while designing with Azure in mind. Our goal was to keep the portable API consistent and cover the majority of use cases required by service teams.

Our design choices were crucial for velocity. We chose provider SDKs over raw REST APIs to leverage built-in features like authentication, signing, retries, timeouts, and pagination. This allowed us to focus on the normalization layer, ensuring consistent behavior across different cloud providers. We also added custom features such as credential overrides and configurable retry policies to handle edge cases at Salesforce scale.

AI coding tools like Claude and Cursor, along with robust CI practices, enabled faster iteration. These tools accelerated our development cycles, and unit and conformance tests ensured over 80 percent coverage, maintaining high quality. This combination allowed us to develop faster, safer, and more confidently.

We conducted a thorough org-wide use-case analysis to prioritize the most common APIs. With three clouds already supported, the contracts and conformance harness are in place, making future additions smoother. AI-assisted workflows dramatically reduced development time, turning weeks of effort into days of focused work.

Claudia dives deeper into how Cursor boosts her team’s productivity.

What WireMock testing challenges emerged validating consistent multi-cloud behavior across AWS, GCP, and Alibaba providers?

Conformance tests were the most challenging yet crucial part of our project. We needed to ensure uniform behavior across different cloud providers, addressing specific differences like delete operations, pagination, and multi-part uploads. However, testing these providers in CI was a hurdle because integration tests require credentials.

To solve this, we leveraged WireMock, an open-source tool, as a forward proxy. Using its record/replay feature, we recorded real HTTP transactions on developer machines with credentials and then replayed them in CI. This method keeps CI safe, deterministic, and fast, while still reflecting real-world traffic.

Our test suite is designed to be cloud-agnostic. The conformance tests focus on the portable API and are written once. Each cloud provider only needs to configure its client and settings. The same test suite then validates consistent behavior across AWS, GCP, and Alibaba. If any provider deviates from the expected behavior, we catch it in CI before it goes live.

This testing strategy has earned significant trust. With over 80 percent coverage from both unit and conformance tests, we ensure deterministic cross-cloud behavior and catch issues early. For infrastructure that hundreds of services depend on, this level of testing rigor is essential for reliable adoption.

What AWS-to-portable code migration challenges did AI-powered automation solve for teams adopting cloud-agnostic infrastructure?

The migration posed two significant challenges. First, trust — service teams needed assurance that MultiCloudJ would behave consistently across different cloud providers before they committed to the move. Second, the transition from AWS-specific code to a portable contract required weeks of manual refactoring for each service. Given Hyperforce’s scale, with hundreds of services, we couldn’t expect every team to spend that much time on manual migration.

To build trust, we focused on rigorous test coverage and deterministic cross-cloud behavior. This gave teams the confidence they needed in the SDK’s reliability.

To make the migration practical at scale, we introduced an AI-powered MCP server for the Substrate SDK. When paired with editors like Cursor and Claude, the MCP server guides in-place refactoring, mapping provider calls to portable APIs and fixing patterns much more quickly than manual efforts. What used to take weeks now takes just a few days, thanks to this guided workflow.

Service teams can transition from AWS-specific code to a single, substrate-agnostic contract without forking repositories. They can migrate incrementally and safely within the same repo, maintaining code quality through the guided refactoring process.

Claudia explores an emerging AI tool that boosts her team’s efficiency.

What measurement challenges emerged quantifying AI acceleration impact, and what productivity gains did you deliver?

The measurement challenge was twofold: quantifying productivity in building the SDK and in enabling service teams to adopt it. We needed concrete metrics to show whether AI tools actually shortened development timelines and whether the MCP server truly sped up migrations, rather than just shifting the effort elsewhere. Without quantifiable data, we couldn’t prove that a single portable interface was more efficient than multiple provider-specific code paths at scale.

To address this, we achieved measurable productivity gains in three key areas:

- Development Velocity: Using AI tools like Claude and Cursor, we reduced the development effort from weeks to days. This allowed us to meet aggressive GCP onboarding timelines. The compression in development time was significant, as we had to catalog behavioral differences across three cloud providers while maintaining over 80 percent test coverage.

- Migration Velocity: AI-assisted adoption transformed the migration process, turning weeks into days and months into weeks for initial pull requests. Engineering teams that once spent weeks manually refactoring AWS SDK calls now complete migrations in just a few days, thanks to the MCP server’s guided workflow.

- Sustained Productivity Multiplication: A single portable interface, as opposed to multiple provider-specific code paths, means less code to write, review, test, and maintain across hundreds of services. Once services are portable, onboarding a new cloud provider becomes a one-time SDK effort in MultiCloudJ. This change benefits teams across the board, turning what used to be a per-service migration headache into a centralized infrastructure task.

Provider-specific runtime implementation burden without MultiCloudJ.

How services use a single portable interface from MultiCloudJ for runtime operations.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.