In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Scott Yancey, EVP of Software Engineering, who led a significant cultural and technical transformation within his team. Under his guidance, the organization achieved impressive AI adoption rates, even while dealing with complex technical limitations and organizational resistance.

Discover how the team broke down cultural barriers to AI adoption, addressed technical hurdles in large-scale codebases, and extended AI capabilities beyond code generation to enrich the entire development process.

What is your team’s mission as it relates to AI adoption and accelerating platform engineering productivity?

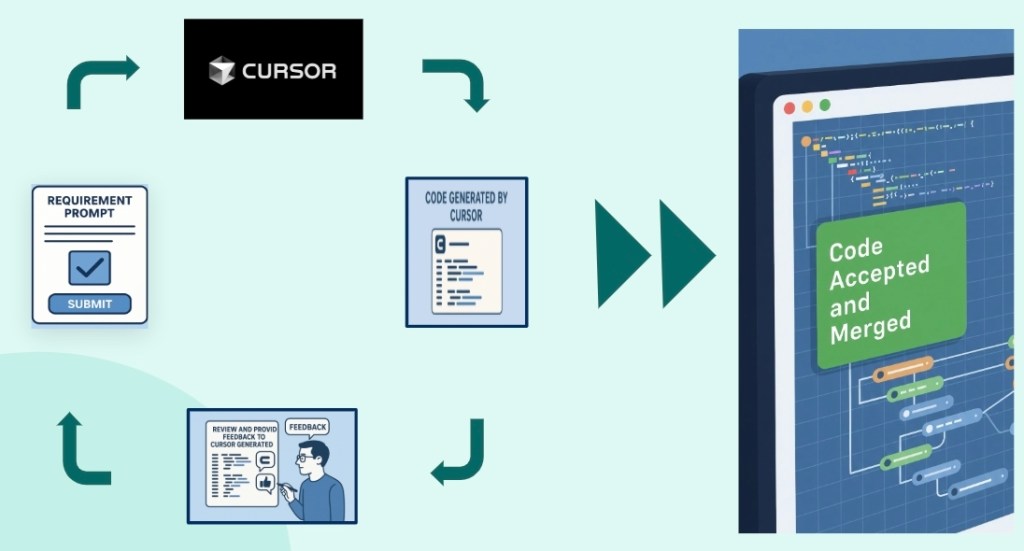

The team’s mission is to leverage AI to eliminate inefficiencies, time-consuming redundancies, and laborious manual processes that often plague the end-to-end software development lifecycle. Our goal isn’t simply to increase developer productivity by 50% or achieve 100% Cursor-type tool adoption—those are just proxies for our true objective. The real aim is to deliver more high-quality product to market safely and efficiently by reducing engineering toil that can be replaced with higher order and more enjoyable work.

It’s easy to fall into the trap of thinking AI is only about generating lines of code in an IDE, but that’s just a small piece of the puzzle. AI can greatly streamline and reduce friction throughout the entire development process. While code generation is certainly a benefit, here are three highly specific yet diverse examples:

- The team deployed AI in log analysis workflows for production issue analysis. Engineers can now parse million-line production logs in minutes, a task that previously required hours of manual investigation. This not only saves time but also enhances the accuracy and reliability of the development process.

- The team implemented AI-powered UI scanners that analyze components against WCAG accessibility compliance rules. These scanners automatically flag issues such as contrast ratios, missing alt text, and keyboard navigation problems. Work that once took developers hours is now completed in just 10 minutes.

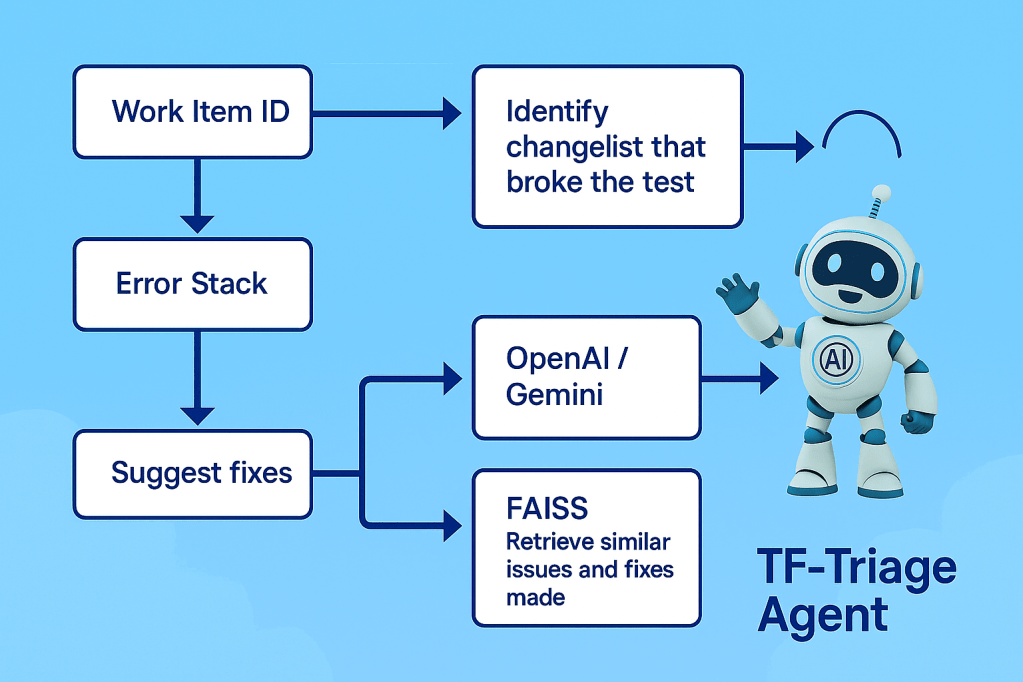

- The team created tools that efficiently triage and route production issues and automated test failures, saving hours of work by minimizing manual effort and reducing “hot potato” handoffs.

The team is dedicated to continuously evaluating emerging AI capabilities to identify new opportunities and use cases, as well as to refine and evolve these tools. Their goal is to eliminate development friction and accelerate product delivery workflows.

What was the biggest resistance challenge you faced from engineers who rejected AI adoption, and how did you overcome their technical objections?

I addressed engineer concerns by helping them see AI as a career enhancement opportunity. This isn’t just about learning how to use existing AI tools; it’s about rethinking how work can be done through an AI-based approach. I advocated that engineers who embrace these technologies and develop expertise with AI tools will become more valuable contributors, focus on higher-level problem-solving, and enjoy their work more by reducing low-value tasks. The key was communicating that AI expands their capabilities rather than replaces their expertise. Engineers will always remain the creative problem-solvers, deep analyzers, and optimizers, with AI serving as a powerful productivity multiplier.

To those with technical skepticism based on past “AI fails,” I emphasized the rapid evolution of AI technology. Improvements happen weekly, not annually. Engineers who had disappointing experiences months ago need to recognize that the underlying capabilities have matured significantly and continue to do so. AI will get some things wrong, but it will get a lot right, just like humans. Our team’s approach was to encourage a fresh evaluation of current tools and ongoing experimentation as the technology advances. This helps us identify where AI excels and where it falls short. Staying current with these emerging capabilities enhances every engineer’s professional development and ensures they are at the forefront of innovation.

What was the biggest technical challenge your team faced implementing AI tools?

The primary challenge we faced was Cursor’s inability to handle Salesforce’s massive monolithic codebase. A significant portion of my team works in the Core monolith, which consists of hundreds of thousands of files. Cursor simply couldn’t manage codebases of this scale, initially leaving many developers unable to use the tool effectively.

To overcome this, we developed specific module loading strategies and workspace configurations that isolated subsections of the Core monolith that Cursor loaded and had visibility into. By understanding Cursor’s implementation patterns, we designed workspace boundaries that aligned with the team ownership models established in the codebase architecture. So instead of loading the entire Core codebase, we configured Cursor to load only the specific modules or subfolders that individual teams actually work with.

Most developer commits happen within a small set of team-owned modules, so a scoped approach worked well for their daily workflows. We accepted that the 0.1% of work requiring large refactors across many modules would need different methods.

Initially, people assumed Cursor should work with the entire codebase at once, but it’s more practical to use Cursor for the majority of the code they work on daily. In other words, don’t dismiss Cursor (or any AI tool) because it can’t help with 20% of the work; adopt it because it can solve 80% of what you do.

What challenges did you face expanding AI adoption beyond code generation to automate non-coding workflows across the software development lifecycle?

The fundamental challenge was helping the organization understand that AI adoption extends far beyond code generation tools, which continues to get the most attention by the tech press and executive class. While our initial leadership messaging focused loudly on adopting a specific code generating tool like Cursor, which was needed to really draw attention to AI tooling in a measurable way, I used it as an opportunity to broaden the team’s perspective on AI’s overall potential impact.

The real opportunity lies in the fact that many developers spend only ~40% of their time actually writing code in large enterprise environments like Salesforce. The remaining ~60% of development time is dedicated to meetings, issue triaging, environment management, documentation writing, and many other non-coding activities. The team recognized that focusing solely on code generation tools meant missing out on the massive potential impact in the majority of developers’ daily workflows.

To address this, we fostered a culture of exploration and knowledge sharing around AI applications that emphasized those beyond just code generation. The team built custom AI integrations for automated code review feedback, deployment pipeline optimization, and real-time accessibility compliance checking. They amplified success stories of engineers using AI for non-coding workflows, celebrated innovative implementations in public forums, and created environments where engineers felt encouraged to experiment with AI across their entire development experience. This approach helped shift a mindset focused primarily on adopting a specific code gen assistance tool, to creating a culture of integratings AI across all aspects of software development.

What challenges did you encounter proving to leadership that AI adoption was delivering measurable ROI beyond basic tool installation?

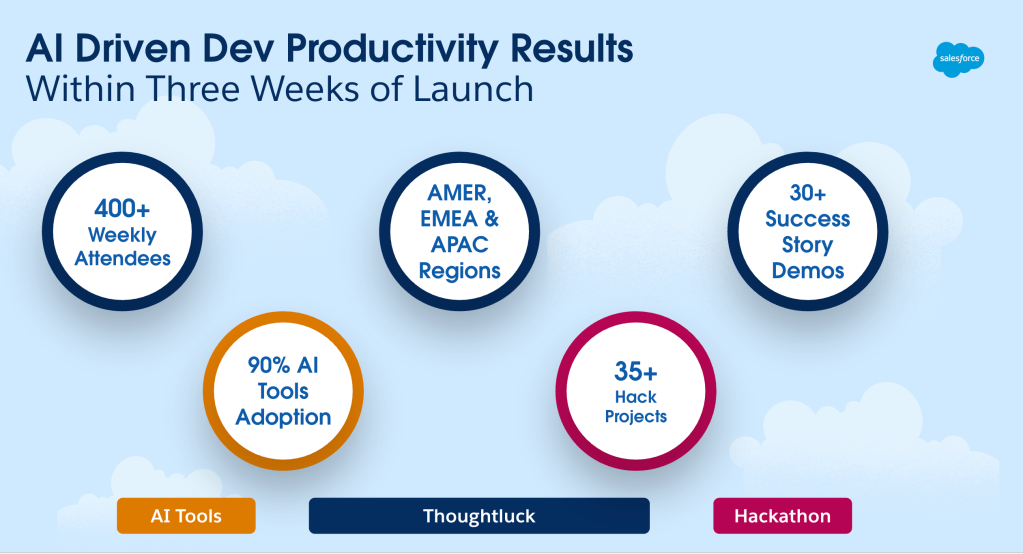

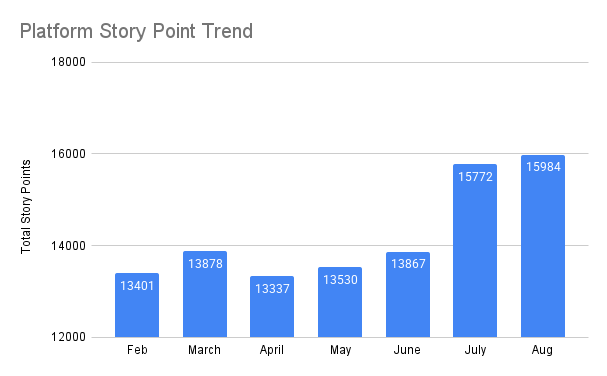

The team faced a significant challenge: the industry lacks reliable frameworks to measure AI’s impact on overall engineering productivity. There are no established metrics that can effectively gauge the relationship between broad AI adoption and improvements in developer efficiency, productivity, and business-impacting delivery. Despite this, the team, which includes over 1,300 engineers, achieved a rapid 20% increase in “Story Points” completed from April to the end of August.

Story Point estimation, derived from Agile methodology, is not perfect and can be subject to various flaws. However, at Salesforce, it is a widely adopted method for engineers to size their completed work. In the last six months, our Story Point processes and training remained unchanged, as did our engineering staffing levels. Therefore, I view this as the best available historical metric to track the overall product delivery to the market as our AI tool adoption began.

As broad-based AI adoption increased across the SDLC, team delivery output has surged by 19% in just 3 months.

Why do we believe AI tool adoption drove this surge? There were no other significant changes to processes or staffing during this period. With over 1,300 engineers involved, the sample size is substantial. What did change was the team’s mid-April focus on fostering continuous AI tool experimentation, knowledge sharing of successes and failures, and refining promising tools.

Additionally, we expanded our AI tool inventory from zero to over 50 tools and best practices in just two months, creating an environment where engineers are constantly exploring new applications and techniques for AI adoption. The team is confident that sustained experimentation, adoption, and refinement will continue to deliver real, quantifiable, and durable productivity gains, even if current metrics are not sufficient and need to evolve to fully capture the extent of this organizational transformation.

What scaling challenges did you encounter maintaining high AI adoption across your engineering organization, and what systems did you build to sustain engagement?

One of the biggest challenges the team faced was ensuring that AI tool adoption remained meaningful and not just a superficial point-in-time exercise. To tackle this, the team focused on building genuine engagement and recognition systems.

I actively engaged in technical discussions across multiple communication platforms, spotlighting innovative AI implementations. By celebrating individual engineers and sharing their achievements with senior leadership, I aimed to create a culture of recognition. When engineers saw their work being valued and recognized, not just by executives but by their peers as well, especially in large public forums and Slack channels, it naturally spurred motivation to keep experimenting.

This approach helped foster a sense of ownership and pride, encouraging the team to continue pushing the boundaries of AI tool adoption and driving continuous improvement.

The team maintained adoption rates of over 95% by treating AI exploration as a fundamental aspect of professional development, rather than an extra mandated task. This approach, which included a systematic recognition program, helped sustain high adoption levels, unlike the typical drop-off seen after initial exec-driven mandates in many organizations. By allowing engineers to dedicate work time to AI experimentation and by being flexible with delivery timelines, the team created a culture that supported long-term capability building. The strategy acknowledged that short-term investments in learning and tool development would eventually result in significant long-term productivity gains as these skills and tools matured within the organization.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.