By Tripti Sheth and Anshul Jain.

In our Engineering Energizers Q&A series, we shine a spotlight on the innovative engineers at Salesforce. Today, we feature Tripti Sheth, Senior Director of Software Engineering, who leads the Monitoring Cloud Notification Management team. Her team is responsible for migrating 4.3 million daily alert notifications to the Hyperforce infrastructure.

Discover how they revolutionized the process by converting outdated spreadsheets that tracked 1,200 policy migrations, 250,000 alerts notifications into dynamic, AI-powered dashboards in just three days — what typically would have taken 6-8 weeks. The team also empowered backend engineers to develop advanced UI tools using Cursor, Windsurf, and Claude, and implemented automated validation frameworks that saved countless hours across 1,000 services, compressing multi-release projects into a five-month delivery timeline.

What is your team’s mission building AI-enhanced alert notification systems on Hyperforce?

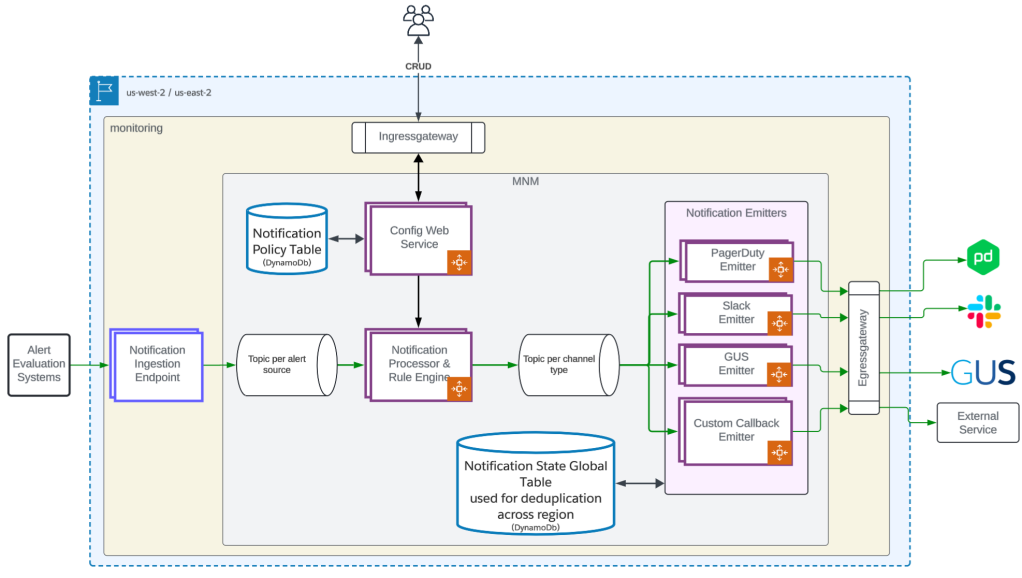

Our team engineers Monitoring Cloud Notification Management (MNM), a Hyperforce-native service designed for scalability, reliability, and high availability across two AWS regions. MNM provides unified notification processing and routing management, integrated with monitoring cloud telemetry stores that process metrics, traces, events, and alerts.

We focus on delivering a tier-1 system that processes 4.3 million notifications daily across two regions, ensuring no alerts notifications are dropped or delayed. MNM enables service owners to define notification policy once for their team and use it across all their alerts. Service owners are able to define rules for notification routing, grouping, suppression and enrichment in the new platform with enhanced AI capabilities in future.

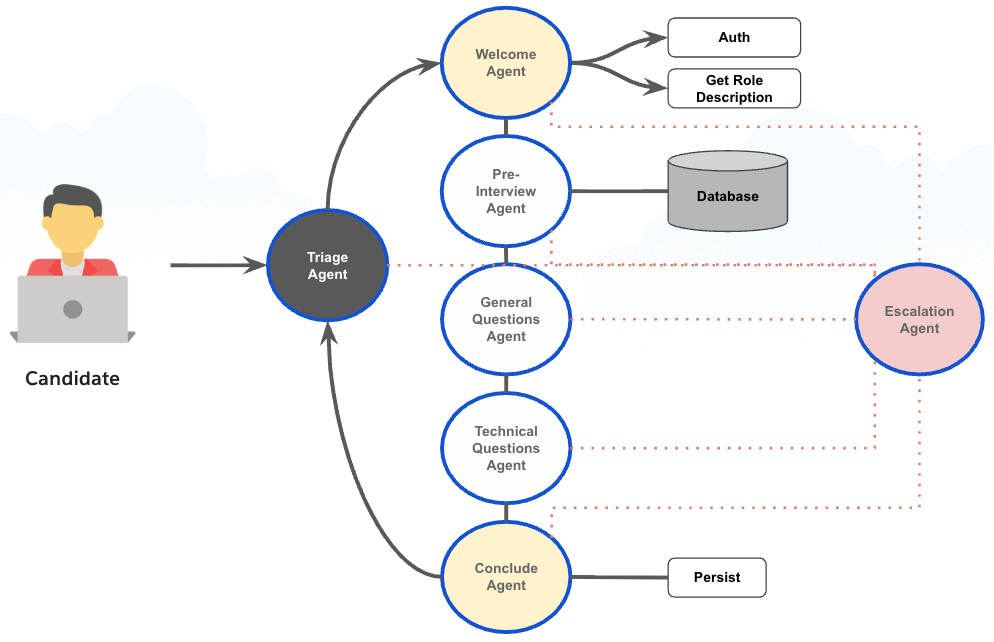

Monitoring Cloud notifications architecture.

To achieve this, Our team has developed multiple microservices, including config-driven metadata and queue-based services. These microservices scale automatically based on Kafka topic lag and utilize an event-driven architecture, making the system highly resilient to downstream outages.

The notification processing services are deployed in both us-west-2 and us-east-2 regions in an active-active configuration. Each region is responsible for fully processing the notification. At the end of notification processing, right before sending the message to the external system, we attempt a strongly consistent check on DynamoDb Global Table to ensure only one region sends the final notification.

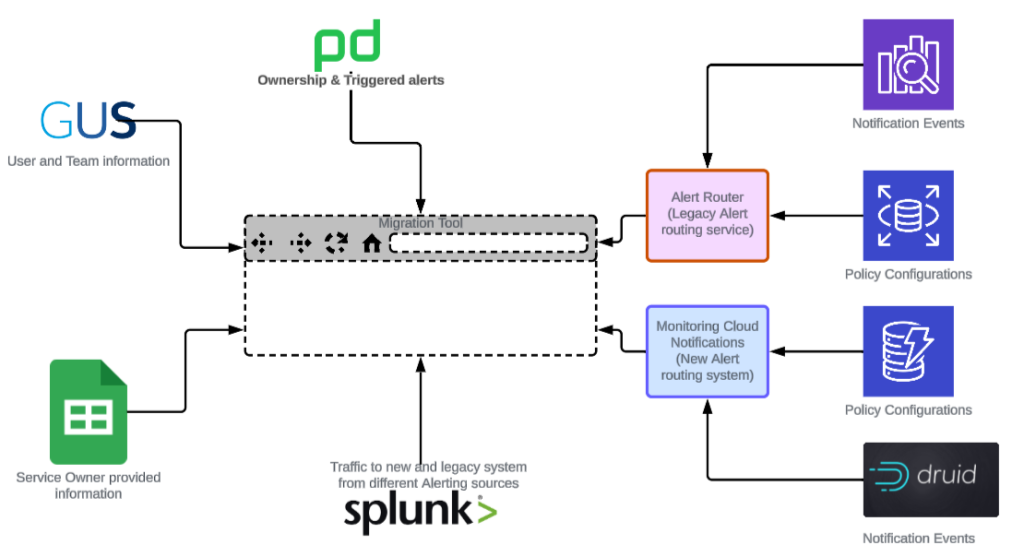

One of our key missions is to replace the legacy Alert Routing Service. This involves migrating 1,200 notification policies that span 400 teams and 250,000 alert configurations. Given the constraints of the safe-change compliant Hyperforce architecture, where engineers do not have direct database access to evaluate the magnitude of volume the migration had to support, we have had to develop innovative migration tooling.

Migration challenges and questions from all stakeholders.

What challenges emerged managing 1,200 migrations before AI-powered automation tools?

The migration involved three main components:

- Complex Policy Topology: With thousands of routing rules developed over years along with complex dependencies between policies.

- Alerting Notification Configurations: 250,000 alerting configurations needed to be migrated.

- Alert Evaluation Systems: 15+ Alert evaluation systems sending alerts to these various policies, spanning first-party, Heroku, and Hyperforce stacks.

As we started migration activities, we quickly realized that the legacy system lacked a clear ownership for these thousands of policies and alerts. Additionally, there was no clear understanding of which team owned the alert evaluation systems, sending thousands of alerts per minute to these policies. Further, service owners created a complex web of dependencies between these policies over the years, making it really hard to plan a clean migration. The legacy and the new system differed in the capabilities provided, and a modern infrastructure and architecture, which essentially meant a re-write of these policies as opposed to a simple database migration.

Our engineers wrote scripts on the fly to pull and process the data, which was then inserted into a spreadsheet and analyzed using complex formulas. As new dimensions were identified, we had to keep updating the sheets and the formulas, and spend a few hours every week to keep the data fresh. Additionally, presenting multi-dimensional data on the spreadsheet quickly became challenging.

To address these issues, we leveraged AI assistants like Cursor, Windsurf, and Claude, which intelligently connected:

- GUS for team ownership

- PagerDuty for on-call rotations

- Notification events and logs for system behavior, understanding the alerting source and traffic migration

- Legacy Alert Router for policy configurations

- MNM for migrated policy

This eliminated the need for manual data pulls and daily data joins, providing live and accurate data.

What challenges did your team face with planning this migration?

Our team excels in distributed systems engineering, where we focus on building scalable backend infrastructure rather than user interfaces. However, we realized the need for a visual tool to help all stakeholders understand the migration process and speak the same language. We also needed to ensure the reliability of migrated resources by replaying old notifications and verifying consistency, given the criticality of notifications to flow through without disruptions.

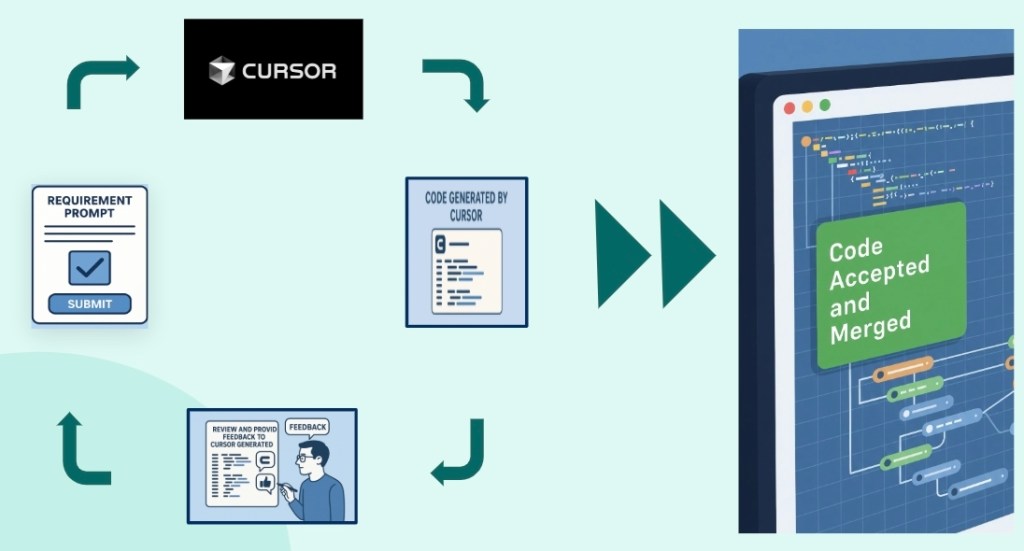

Over a single weekend, we harnessed Cursor, Windsurf, and Claude. What began as a quick hack soon transformed into a daily-use tool. These assistants processed API signatures and sample responses, incorporating our business domain knowledge. In our environment, where engineers don’t have direct database access, API-first principles were essential, and allowed the UI to pull the freshest data on the fly.

Various different data sources to build a consolidated view of the migration scope.

The tool automated several intricate workflows:

- It pulled all policies that needed migration and extracted the relevant user lists.

- It queried GUS to determine the teams associated with those users.

- It looked up Engineering Managers for users who were no longer active.

- For users belonging to multiple teams, it identified the most appropriate team using PagerDuty details and Slack channels.

- Eventually, it pulled policies, merging the data with migration status and team ownership.

Traditionally, these tasks would have taken multiple days to write the necessary scripts and an additional hour each day to maintain up-to-date data. Our engineering team, however, brought the UI migration tracking tool to life in just three days, a process that would have typically required six weeks of development.

What code quality challenges emerged using AI coding tools like Cursor, Windsurf, and Claude together?

What began as a simple hack quickly turned into a daily-use tool. Initially, we started with Cursor, using straightforward prompts without any rules or structured knowledge. This approach led to unmanageable code, where each simple task added 1,000 lines with no modularization, reuse, or abstraction. Bugs that required one-line fixes necessitated changes across hundreds of lines.

We soon realized this method wasn’t sustainable. As engineers committed to best software design practices, we needed code that was modular, testable, and repeatable, free from duplication. We avoided complex architectures, focusing instead on simple, clean logic that both human developers and AI agents could understand.

This allowed us the opportunity to work with different AI coding assistant tools and test out their capabilities:

- Cursor: We began with simple prompts, without rules and structure which resulted in unmanageable code.

- Windsurf: We used rules and memories, allowing it to learn from previous context and refactor code into more manageable structures.

- Claude: We started Claude with a well-documented CLAUDE.md file, which served as a permanent, shareable memory that we could upload to GitHub, ensuring consistency across team members.

We helped the AI understand the business context and domain knowledge of each data source. This evolution not only improved code quality but also shifted our mindset. Now, whenever we need a quick solution, we have the confidence that AI tools can assist us without us defaulting to a “no” response, which was our usual stance in the past.

What validation challenges arose before AI automated testing across 1,200 notification policies?

Given the criticality of alert notifications, we needed robust validation tools to compare the previous policy state with new configurations. An incorrect migration could result in service owners not receiving notifications about service disruptions, leading to customer issues and a loss of trust. The old and new systems shared some, but not all, capabilities, making consistent behavior essential.

The validation tool we developed provided an exceptionally clean framework with automated workflows:

- Retrieved Policies: It fetched policies from both the legacy and new systems.

- Retrieved Previous Alerts: It pulled all previous alerts from the legacy system.

- Replayed Alerts in Dry-Run Mode: It simulated alerts across both systems to observe their behavior.

- Documented Gaps: It identified and documented discrepancies, generating reports that showed differences in routing and suppression rules.

- Daily Validation: It ran validation checks for all 1,200 policies every day, making sure any changes in the policy and new alerts are replayed to identify gaps.

By the time we were at a stage to build tooling for the above, we had already established a pattern and framework to allow AI coding assistant to take over the task and integrate it into the migration tool, which handled everything except root-cause analysis.

This transformation was significant. Traditionally, service owners would wait for new alerts, check various dashboards, and then eventually migrate — a process that could take days. With our new tool, they could switch in minutes after a single validation check.

The AI-assisted tool proactively identified gaps in the new service’s rule engine. While the tool provided visibility, human involvement remained crucial due to the critical nature of the task. Human judgment was essential for deciding when to reach out to service owners, which bugs to fix, and what should be deprecated.

The tool minimized 600 to 800 validation hours across 1,200 services, reducing validation time from days to minutes. This transformation turned an expected multi-quarters effort, initially expected to take three to four quarters, into a two-quarter project, with automated migrations targeted by December.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.