In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Tanya Shukla, Senior Product Manager on the Hyperforce Secured Networking team. Her team dramatically improved cost-to-serve economics of Salesforce’s Hyperforce platform by redesigning key components to better leverage public cloud capabilities.

Discover how they redesigned critical network path to enhance scalability for migration of over 90% of Salesforce traffic to Hyperforce while achieving significant cost savings with seamless service reliability.

What is your team’s mission as it relates to cost-to-serve optimization for Hyperforce?

We optimize network infrastructure while ensuring availability, scalability, and security that customers expect from Salesforce. Our primary focus is the egress gateway, a mission-critical service that enables all outbound customer connections across Salesforce’s Hyperforce platform.

The egress gateway is a vital component, sitting in the critical data path for every customer interaction. Without it, customers cannot receive responses from any Salesforce service, which makes cost optimization efforts particularly challenging. The team must strike a delicate balance between aggressively reducing expenses and maintaining a seamless customer experience. Any disruption could impact the entire platform’s ability to serve customer data, emphasizing the importance of ensuring reliability and performance.

Outbound traffic routing in Hyperforce (prior to Optimization)

What AWS infrastructure challenges were creating Hyperforce bottlenecks? and how did your team resolve it?

The primary challenge emerged with migrating our first-party infrastructure design patterns to the public cloud. The economic model of the public cloud, which operates on a pay-as-you-go basis, differs significantly from our first-party model. Given the massive scale of Salesforce’s data, the traffic costs skyrocketed.

The egress gateway, designed as a forward proxy for internet-bound traffic, began to face a bottleneck during the Hyperforce migration. All internal traffic within Hyperforce defaulted to egress gateway for routing, leading to massive cost challenges and concerns with Hyperforce scalability and availability.

Initially, the team had limited visibility into the traffic patterns. We developed advanced analytics tools to gain better insights. The turning point was when our data analysis revealed that less than 5% of the traffic was legitimate external customer data, while 95% was traffic originating from internal access to other Salesforce/AWS services that should not have been routed through the expensive, guarded controls of the egress gateway.

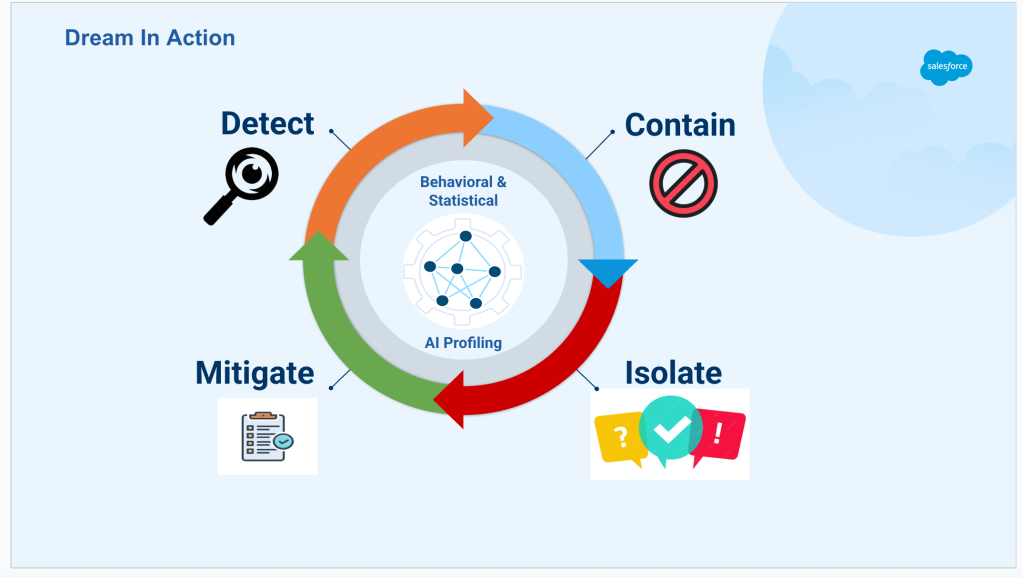

Once we had the visibility, we implemented a systematic data-driven approach to categorize traffic based on destination endpoints and defined optimal network path for each traffic category:

- Same region traffic to AWS services – routed through AWS gateway endpoints (S3 traffic) or Private Linker for other other AWS services .

- Same region traffic to Salesforce services – routed through Service Mesh or Private Linker service

- Cross-region traffic to AWS services – routed through Private Linker service [cross-region support in progress]

- Cross-region traffic to Salesforce services – routed through Private Linker service

Private Linker Service was designed to manage AWS Private Links at scale and bypass internal traffic off the egress gateway path. Another AWS infrastructure limitation was lack of support for cross-region data transfer over Private Links where team worked with AWS to enable support.

Outbound network traffic routing in Hyperforce (post optimization)

These optimized paths not only reduced costs but also improved latency, security (bypassing internet), and availability for the Hyperforce platform while simplifying the network path .

What measurable financial improvements and performance gains did the optimization program achieve?

The team’s cost-to-serve optimization delivered impressive financial and operational results. We achieved a cumulative savings $20 million (recurring) over the last 2 years through architectural improvements and optimized traffic routing across the Hyperforce platform. From traffic efficiency standpoint, the team increased the percentage of legitimate external traffic from less than 5% -> 40%, an 8x improvement in resource utilization.

Internet bound traffic grew while the unit cost for Egress Gateway decreased.

This optimization also ensures that egress gateway service serves its primary purpose of monitoring and securing external customer traffic while minimizing unnecessary hops, thereby reducing latency and expenses for internal communications. This has also helped us scale efficiently, as more than 90% of Salesforce traffic migrated from first-party infrastructure to Hyperforce over the past two years.

These enhancements continue to drive ongoing operational efficiency through reduced infrastructure overhead and better resource allocation. The financial benefits are sustained annually as traffic patterns remain optimized while growing through the new routing architecture.

What operational constraints limited infrastructure optimization for a platform-critical service—and how did the team maintain reliability during architectural changes?

Running a mission-critical service posed significant optimization challenges. Any disruption to the egress gateway could halt customer connections to Salesforce services, making aggressive cost reductions too risky. The team had to maintain 99.99% uptime while revamping the traffic routing architecture.

To ensure reliability during the migration, we implemented a rigorous validation process. Each new routing path was thoroughly evaluated end-to-end before being considered for traffic migration. The team worked closely with service owners during the migration, rolling out changes gradually through our established environments — development, test, performance, staging, and production — validating use cases at each stage.

Continuous end-to-end monitoring helped track latency and performance, ensuring a seamless migration. Throughout the process, we maintained dedicated Slack channels , office hours to address customer feedback, which helped keep customer trust strong and the system stable.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.