By Roni Ben-Oz and Menash Yamin.

In our Engineering Energizers Q&A series, we shine a spotlight on engineering leaders tackling complex technical challenges at Salesforce. Today, we feature Roni Ben-Oz, Senior Manager of Software Engineering, who heads the development of AI-powered relationship generation in Tableau and Data Cloud. Her team is revolutionizing the way users work with structured data by automating the identification of relationships, eliminating the need for manual configuration.

Discover how Roni’s team ensured that AI-generated relationships are accurate and aligned with real-world data, optimized real-time performance, and scaled their AI-driven modeling to handle massive datasets, pushing the boundaries of AI-powered data modeling in Tableau and Data Cloud.

What is your team’s mission?

The team automates and improves data modeling within the semantic layer in Tableau Next and Data Cloud, making it easier for users to create structured relationships between tables while building semantic models.

Relationship generation was developed to eliminate the complexity of data preparation, allowing users to focus on analysis rather than the manual definition of joins, foreign keys, or relationships. By embedding AI-powered automation directly into Tableau’s and Data Cloud’s semantic modeling layer, this feature helps to ensure ensures that relationship discovery aligns with complex data structures while maintaining accuracy and efficiency at scale.

Relationship generation operates at the foundational modeling level. This helps to ensure ensures that AI-powered queries and insights are built on a structured, AI-optimization schema, improving the accuracy and performance of analytical workloads.

The team collaborated with AI researchers, data platform teams, and product engineers to refine relationship discovery, optimize AI-generated suggestions, and seamlessly integrate the feature across Salesforce environments.

What were the biggest technical challenges in developing AI-powered relationship generation?

One of the biggest technical challenges was ensuring that AI-generated relationships accurately reflect real-world data. Unlike deterministic SQL joins, AI-based relationship inference requires a deep contextual understanding of schema metadata, column similarities, and user intent. The system needed to infer and validate relationships, even when explicit foreign keys or referential integrity constraints were missing.

Additionally, multiple valid relationships could exist between tables and the AI needed to determine the most contextually relevant ones while avoiding false positives. To address this, a multi-stage validation pipeline was developed to cross-reference column types, schema definitions, and query patterns. This pipeline filters out weak or irrelevant relationships, preserving the most likely useful ones.

The system also had to function seamlessly across Salesforce’s varied environments, including Tableau and Data Cloud. This required a modular architecture to standardize AI-driven relationship discovery across different database engines, metadata structures, and governance policies, helping to ensure adaptability and consistency across the Salesforce ecosystem.

What were the biggest technical hurdles in optimizing the latency of AI-powered relationship generation?

One of the biggest issues was addressing inefficiencies in large language model (LLM) processing and network delays associated with retrieving metadata from large datasets. Since relationship discovery must occur in real time, reducing response time was a critical priority.

To tackle LLM efficiency, the focus was on optimizing the input data. Initially, full schema definitions introduced unnecessary computational overhead. By restructuring LLM prompts to include only essential metadata, token consumption was significantly reduced, which in turn decreased inference time. A multi-level caching strategy was implemented to further enhance performance.

Additionally, a second-level cache dynamically adjusted the token output length, helping to ensure the AI returned only the most critical data while discarding verbose descriptions.

Schema ingestion was also redesigned to streamline preprocessing. Instead of sending full table schemas, the system now performs selective extraction of relevant fields. This approach minimizes the amount of data that needs to be processed. To handle complex schemas more efficiently, parallel processing was enabled, allowing multiple AI queries to execute simultaneously. This further reduced overall latency, helping to ensure a smooth and responsive user experience.

How was the cost-to-serve reduced while maintaining high accuracy?

Cost optimization efforts focus on minimizing LLM inference costs while preserving service quality. Every query incurs a cost based on input and output token usage, making efficiency a key priority. Here’s how cost-to-serve reduction has been achieved:

- Output Token Reduction: Redundant metadata and unnecessary elements in AI-generated responses have been eliminated. Additionally, the number of generated relationships is restricted. To further optimize efficiency, we have carefully selected an LLM model that strikes the right balance between performance, cost, and processing time. These optimizations ensure that only essential relationship details are retained, reducing the number of output tokens and lowering costs.

- Pre-Processing Optimization: The pre-processing phase has been optimized to handle common relationship cases without invoking the AI model. This optimization is based on a rule-based approach and heuristics, significantly reducing the volume of AI inferences and leading to substantial cost savings.

- Dynamic Rate Limiting: A dynamic rate-limiting system has been implemented, adjusting token allocation based on query complexity and user behavior. This prevents unnecessary AI calls, helping to ensure efficient resource usage.

- Caching for High-Confidence Relationships: Frequently used relationship mappings are stored in a cache. This eliminates the need for redundant AI queries, reducing costs and improving response times.

- Query Deduplication: Repeated analysis of the same schema is prevented, which can trigger unnecessary AI inference costs. By identifying and avoiding duplicate queries, each analysis is both efficient and cost-effective.

How were scalability challenges addressed in large-scale data processing and user concurrency?

Scalability in relationship generation is a complex challenge, especially with large datasets and high user concurrency. At the heart of our approach lies the semantic layer, which narrows the problem space by focusing on a subset of tables.

The auto-join feature within the semantic layer automates join definitions and eliminates the need for manual join type specification. This streamlines relationship generation, enhancing both efficiency and accuracy.

Another key challenge is the exponential increase in possible relationships with each additional table, making a brute-force approach impractical. To address this, we introduced an intelligent schema-cleaning mechanism that optimizes the dataset while maintaining high precision and recall, helping to ensure scalability without compromising accuracy.

We further optimized interactions with LLMs to minimize processing overhead. Instead of making multiple LLM calls, we rely on a single call, with a few parallel calls strategically used to power our smart cleaning mechanism. This approach strikes a balance between speed, accuracy, and cost-effectiveness, allowing us to scale efficiently while maintaining high performance.

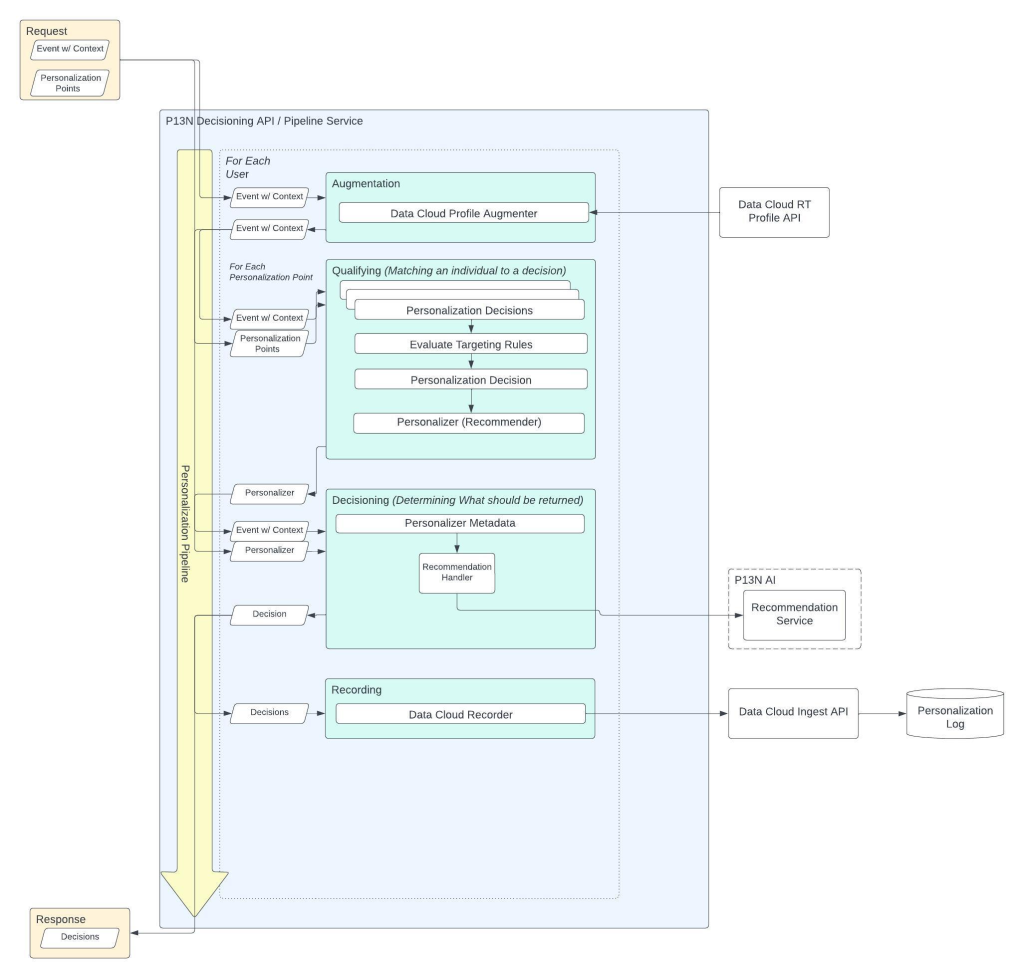

This diagram illustrates the high-level process of relationship generation, beginning with Salesforce Core, flowing through the Semantic AI Service and our Trusted AI Platform. It also highlights key integration points within our testing framework.

How is regression testing and AI accuracy validation handled?

To ensure the accuracy of AI relationship generation across updates, a robust validation pipeline is in place to detect regressions. Unlike traditional software, AI systems introduce stochastic variability, which complicates consistency validation. A dual-layer testing framework has been implemented:

- Automated Regression Testing: The first layer consists of automated regression testing within CI/CD pipelines, helping to ensure new deployments maintain validated relationship mappings. A baseline accuracy threshold is enforced, requiring a minimum of 80% regression test coverage before an update can be released. This prevents unexpected variations in AI-generated relationships.

- AI Benchmarking and Precision/Recall Tracking: The second layer involves AI benchmarking and precision/recall tracking. Each LLM update undergoes a 40-iteration benchmark test to measure precision, recall, and false positive rates. Given the non-deterministic nature of LLMs, accuracy monitoring extends beyond unit tests into statistical trend analysis. Production logs are analyzed to identify relationships frequently overridden or corrected by users, providing real-world accuracy feedback and enhancing future model iterations.

What security and compliance measures ensure trust in AI-driven relationship generation?

Ensuring trust in AI-driven relationship generation involves rigorous security and compliance measures. The system is built with a multi-layered security framework to protect enterprise datasets and adhere to data governance policies.

A significant challenge is preventing data leakage when schema information is sent to the AI model. To address this, a data masking layer was implemented. This layer redacts sensitive attributes, such as personally identifiable information (PII) and proprietary business logic, while maintaining the structural integrity of the data. This helps to ensure the AI model receives only the essential information needed for accurate relationship generation.

Additionally, role-based access control (RBAC) and object-level security (OLS) are integrated to filter relationship generation suggestions based on user permissions. Access control policies are enforced at the database level, restricting queries based on schema visibility and authentication tokens. An AI response filtering system is also in place to validate generated relationships against known metadata constraints.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.