By Yaheli Salina, Karthik Prabhu, and Omri Alon.

In our Engineering Energizers Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we spotlight Software Engineer Yaheli Salina. She and her Agentforce Speech Foundations team developed Speech Invocable Action — a new AI tool that standardizes repeatable actions, delivering sophisticated AI power throughout the Salesforce ecosystem, including secure, native speech automation housed within the platform trust boundary.

Explore how the team integrated native speech automation by developing speech-to-text as a primary action under rigid multi-tenant constraints, engineering protective barriers to stop automation errors from impacting Flows and Agentforce actions, and leveraging AI tools to speed up architectural exploration while maintaining high security standards.

What is your team’s mission as it relates to building native speech automation on the Salesforce platform?

The team simplifies speech capabilities by creating native building blocks within the Salesforce platform. Previously, speech-to-text required routing audio to third-party services, which forced users to manage credentials and accept security tradeoffs. This old model created friction for enterprise environments that prioritize data residency and trust boundaries. Conversely, this current approach ensures audio data stays within the Salesforce trust boundary. Processing occurs through platform services to preserve privacy while enabling hands-free automation.

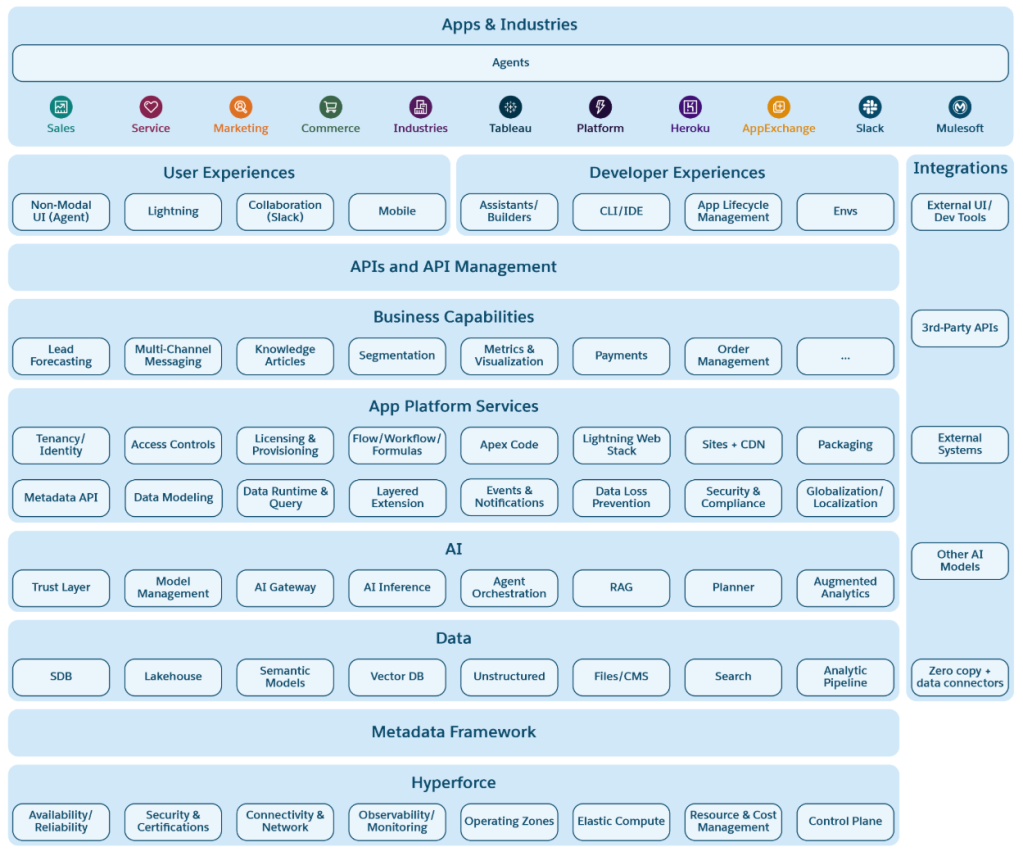

By integrating speech capabilities as a suite of standard actions, the team democratizes voice access for all builders. Speech-to-text, text-to-speech, and translation are now standard composable actions. Admins and Developers can trigger voice-driven logic in Flows or Agentforce without writing boilerplate code for audio streaming or WebSocket management. This shift transforms speech into a reusable tool rather than a specialized integration.

The team aims to make voice a natural extension of workflows so users build speech-driven experiences with total confidence.

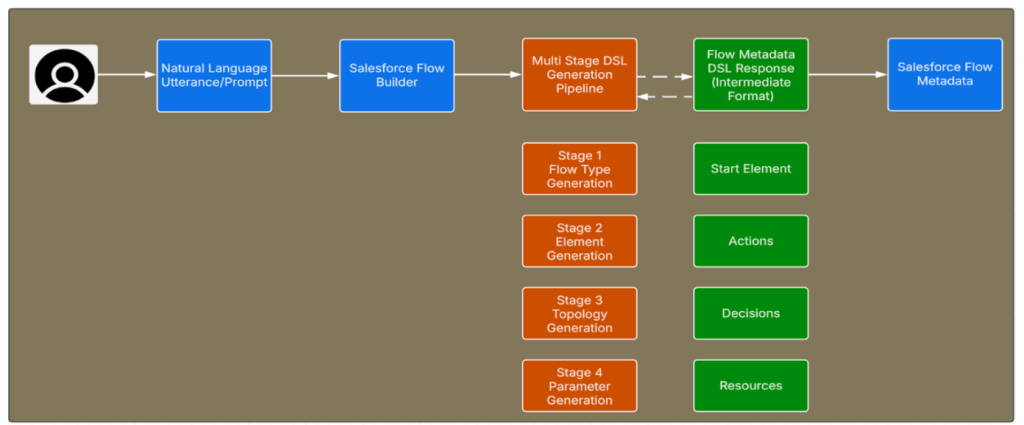

Inside the Speech Invocable Action architecture: Bridging Salesforce platform consumers with Agentforce Speech Foundations through standardized core actions.

What architectural constraints shaped how native speech automation was built inside the Salesforce platform?

Building inside the Salesforce platform presents architectural realities that differ from deploying external services. The platform operates as a large, multi-tenant system where thousands of features share memory, compute, and execution paths. Every new capability must coexist safely with all other platform processes.

Speech-to-text processing demands significant resources, especially regarding memory usage during audio handling. Since these resources are shared, the team evaluates how speech actions behave when multiple Flows or Agentforce actions run concurrently. Each automation step assumes other platform workloads compete for the same underlying resources.

To manage these demands, the team prioritizes disciplined resource management and rigorous performance testing. They validate usage patterns against Speech Foundations API limits and tune execution paths for maximum efficiency. These efforts maintain platform stability and ensure speech automation performs predictably under heavy loads.

How did reliability requirements influence the design of speech automation for Flows and Agentforce actions?

Speech automation often operates in synchronous contexts like Flows and Agentforce actions, where execution pauses until a task completes. A single failure in these scenarios can stall an entire automation or disrupt an agent interaction. This makes failure behavior as critical as success behavior.

The team uses a defensive design strategy to ensure predictable outcomes. The speech action returns structured error categories instead of generic system errors. This allows builders to handle issues explicitly. Downstream automation can then respond with intentional actions like retrying, branching to a fallback path, or logging the event.

Extensive testing validates this approach through unit, integration, and end-to-end scenarios. These tests ensure the speech action behaves consistently when combined with other platform tools. Controlled failure modes ensure speech automation strengthens workflows and maintains reliability.

What delivery pressures shaped how the team executed this work with a small team?

The delivery of speech automation happened under fixed timelines and high operational expectations. Because this action operates deep within the platform, the team treated correctness and guardrails as non-negotiable requirements.

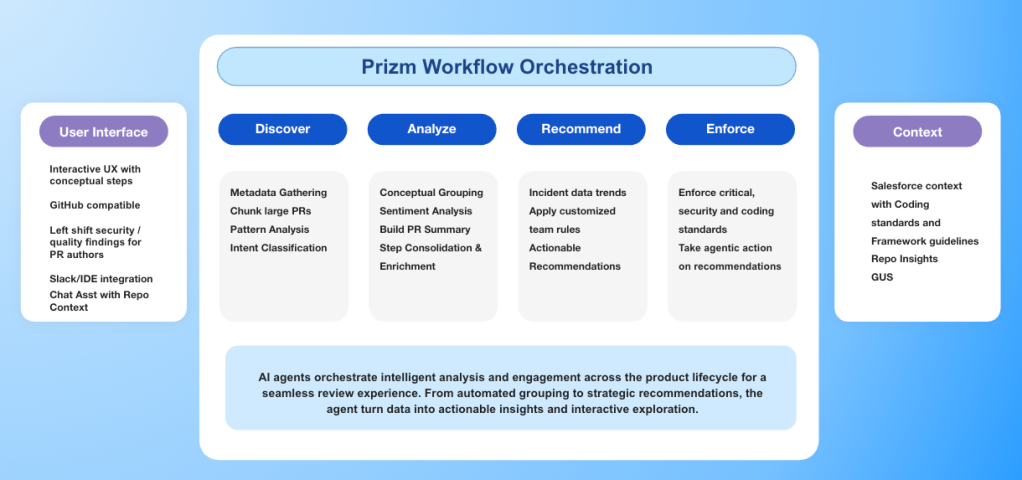

The Speech Foundations and Standard Actions teams adhered to a design for bulk processing from the beginning — crucial for scalability and efficient governor limit consumption in Salesforce’s multi-tenant environment. To implement speech tasks (such as transcription) within the complex codebase, the team used AI tools like Claude Code. This enabled a small team to autonomously deliver production-ready code that met these strict constraints with unprecedented speed.

Testing focused on how builders use speech automation inside Flows and Agentforce actions. By validating real execution paths end-to-end, the team ensured the feature could ship confidently despite tight timelines.

How did AI tools change developer productivity while working inside an unfamiliar platform codebase?

Working within the Salesforce platform required navigating a massive codebase and complex internal APIs. Usually, onboarding to such an environment requires weeks of documentation review and trial-and-error exploration.

AI development tools transformed that experience. Tools like Claude and Cursor served as architectural guides and helped the team understand system components and existing patterns. This AI-assisted approach allowed the team to query the codebase, find relevant examples, and generate tests that met internal standards.

The team estimates AI shortened development and discovery time by seven to eight weeks. Beyond speed, AI shaped how engineers learned, reasoned about, and extended a complex system at scale and reduced cognitive overhead. This allowed the team to focus on speech automation logic rather than platform mechanics.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.