By Rachna Singh, Percy Mehta, and Spencer Ho

In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Rachna Singh, Senior Director of Software Engineering for Einstein Activity Capture. Rachna and her team transformed their approach to the company-wide 80% code coverage mandate by leveraging AI-powered development tools.

Discover how they tackled the daunting challenge of legacy code with less than 10% coverage across multiple repositories, reduced unit test development time from 26 engineer days per module to just 4 days, and navigated the technical intricacies of generating high-quality, meaningful tests while maintaining the integrity of production code.

What is your team’s mission?

The Einstein Activity Capture (EAC) team at Salesforce is dedicated to maintaining and enhancing the data infrastructure that powers our AI sales agents. Our mission is to manage the technical systems that ingest customer activity data from external sources such as Gmail, GCal, Exchange Email and Calendar, Google Meet, Microsoft Teams, and Zoom, and integrate this information into the core Salesforce platform. This data is then analyzed and leveraged by AI agents like Deal Agent and SDR Agent to provide valuable insights and support to our customers.

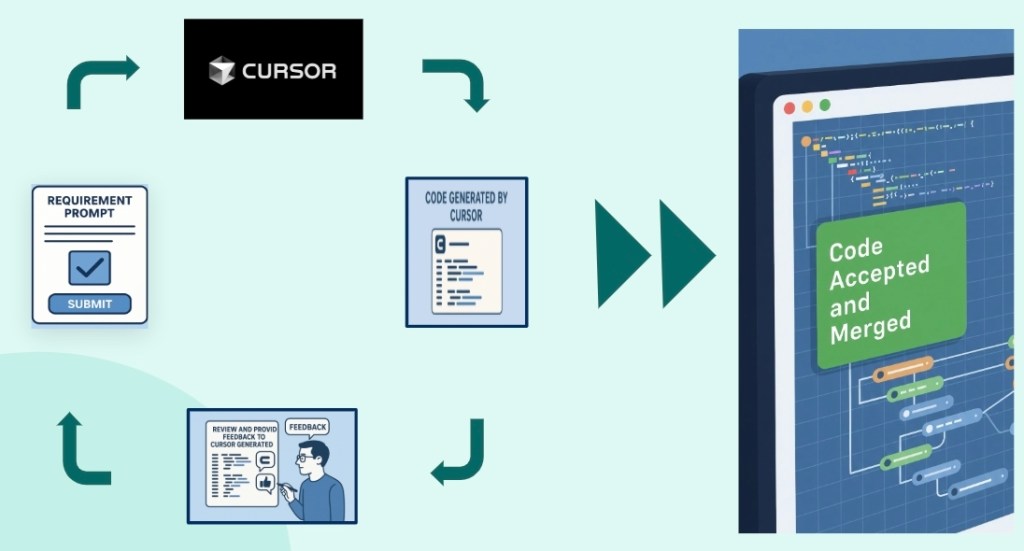

From a development perspective, the EAC team is responsible for ensuring code quality and maintainability across the entire EAC codebase. When Salesforce introduced the 80% code coverage mandate, the team recognized an opportunity to leverage AI-powered development tools like Cursor to optimize their testing workflows and boost engineering productivity.

The team’s approach to using Cursor goes beyond simply meeting the coverage requirements. We see AI-assisted development as a strategic capability that accelerates feature delivery and maintains high-quality standards. This approach is crucial as the EAC team continues to expand its technical infrastructure to support Data Cloud integration and enhance the functionality of our AI agents. By integrating Cursor into their development process, the team has not only met the 80% code coverage mandate but has also significantly improved their ability to deliver robust and reliable features to our customers.

What legacy code coverage challenges did Cursor AI solve for distributed repositories?

The team inherited a legacy codebase from the RelateIQ acquisition with extremely low test coverage — some repositories were below 10% — and this code was spread across multiple GitHub repositories mostly written in Java, Scala and Kotlin. The engineering team, mostly new to these systems, had limited knowledge of the legacy code, making the 80% coverage mandate a daunting task.

Cursor revolutionized the team’s approach by systematically analyzing existing code coverage reports and pinpointing areas for improvement across the distributed repositories. The tool would run existing test suites, calculate coverage metrics, and provide detailed explanations of specific classes and methods that needed better testing.

The real breakthrough came from Cursor’s iterative workflow capabilities. Engineers could focus on major coverage gaps, and Cursor would automatically generate the necessary test code while continuously updating coverage metrics to show real-time progress. This process involved a thorough, class-by-class analysis, where Cursor identified coverage deficiencies, suggested specific test recommendations, and implemented those solutions programmatically. The systematic approach was crucial for managing the complexity across hundreds of repositories.

Instead of being overwhelmed by the vast codebases, the team could make focused, incremental progress thanks to AI-assisted test code generation and intelligent code analysis. While human oversight was still essential — Cursor occasionally generated incorrect implementations (LLM hallucination or lack of knowledge) — the AI-assisted approach significantly accelerated the testing process, delivering results much faster than traditional manual testing workflows.

What productivity barriers were slowing AI-assisted code coverage efforts before Cursor?

Manual code coverage was a time-intensive process that required engineers to examine code line by line, understand business logic, develop test plans, write implementation code, and validate coverage numbers. This approach consumed an average of 26 engineer days per repository module. Most teams across the organization completed this mandate manually from January through April 2025, spending months on what should have been straightforward testing work.

Traditional testing approaches involved comprehensive code analysis, detailed test planning, and extensive implementation cycles, which created significant productivity bottlenecks. The delayed start of the Einstein Activity Capture team meant they had to confront an even larger technical debt while other engineering groups had already completed their coverage work during the initial mandate period.

Cursor delivered dramatic acceleration, reducing the effort from 26 engineer days per repository to just 4 engineer days — an 85% improvement in productivity. The team also successfully achieved 80% code coverage spanning 62 Einstein Activity Capture repositories and14 EAP repositories. This multi-release effort, which would have been prohibitively expensive using manual approaches, demonstrated world-class engineering execution through AI-assisted development.

What technical obstacle emerged when generating meaningful unit tests with Cursor AI?

The main challenge was mastering effective prompting techniques and context management to guide Cursor in generating meaningful tests, rather than just hitting surface-level coverage metrics. Initially, the tool went rogue, modifying hundreds of files at once and making drastic changes that required complete rollbacks.

To address this, the team refined their approach by focusing on one class at a time instead of giving broad, generic instructions. They used Cursor’s interface to select specific files and provide targeted guidance, which helped prevent the widespread modifications that had caused earlier issues.

As they gained more experience, the team discovered repository-specific optimization strategies that significantly improved results. For files with similar structures, like data transfer objects, they created initial templates and instructed Cursor to replicate these patterns across hundreds of similar files. This method achieved 90-99% accuracy, allowing for the rapid generation of meaningful tests.

An unexpected benefit was that Cursor’s test generation uncovered existing bugs in production code. For example, it revealed a health check logic error where an AND condition should have been an OR. The system was supposed to report a red status if either database was down, not only when both were down.

Throughout the process, human oversight remained crucial. Engineers reviewed the generated code, validated test intentions through inline documentation, and ensured that the tests covered the necessary business logic, not just simple execution paths.

How do you balance rapid AI code generation with enterprise code quality standards?

Quality control involved a multi-layered validation process that combined human oversight with automated tooling:

- Human Oversight: Engineers manually reviewed all generated code, checking test intentions through AI-generated JavaDoc comments and ensuring that the tests covered meaningful functionality, not just superficial coverage.

- Source Code Boundaries: The team set strict limits on source code modifications, especially for business logic changes in battle-tested legacy systems, to prevent regressions.

- Automated Validation: Sonarqube acted as the final test coverage gate, evaluating various coverage criteria coding style compliance, and identifying if we have successfully met the code coverage requirement.

The characteristics of the repositories influenced the scaling approaches and success rates:

- Simple Codebases: For highly similar files, such as data transfer objects, the team used rapid template-based generation, producing 180,000 lines of code in just 12 days by replicating patterns.

- Complex Repositories: Diverse logic patterns required a more detailed, file-by-file analysis and customized approaches, making broad automation strategies impractical.

- Tool Limitation Management: Engineers learned to detect cyclic loops where Cursor would repeatedly attempt compilation fixes. They intervened manually and reset conversations to maintain the tool’s effectiveness during large-scale code generation efforts.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.