By Sangeeta Patnaik, Deepu Mungamuri, and Raghav Bhandari

In our “Engineering Energizers” Q&A series, we highlight the engineering minds driving innovation across Salesforce. Today, we feature Sangeeta Patnaik, who played a key role in one of Salesforce’s largest internal migrations, rewriting 57,000 unit tests from xUnit to Jest.

Discover how her Application Intelligence team learned and evolved from the limitations of super prompting to a scalable modular AI agent architecture, maintained trust through dual-run validation and manual escalation, and developed custom tooling that achieved a 99.9% pass rate — transforming a six-year manual effort into a nine-month project.

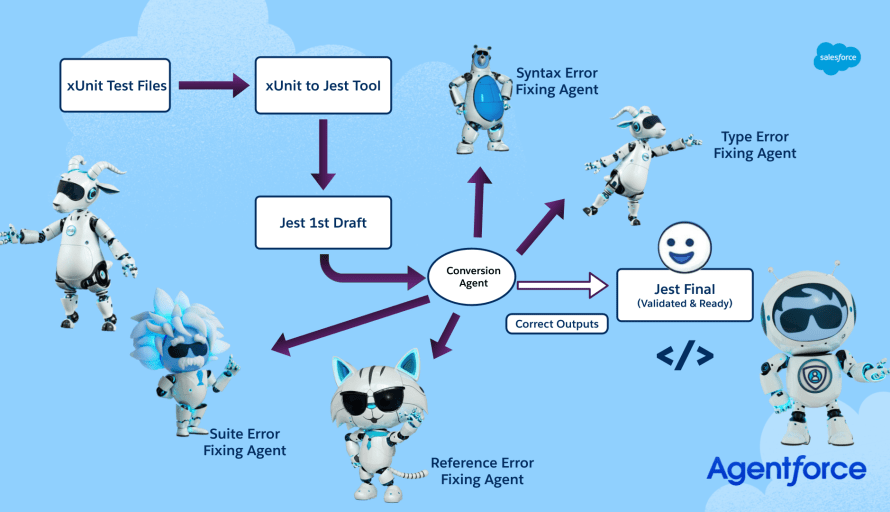

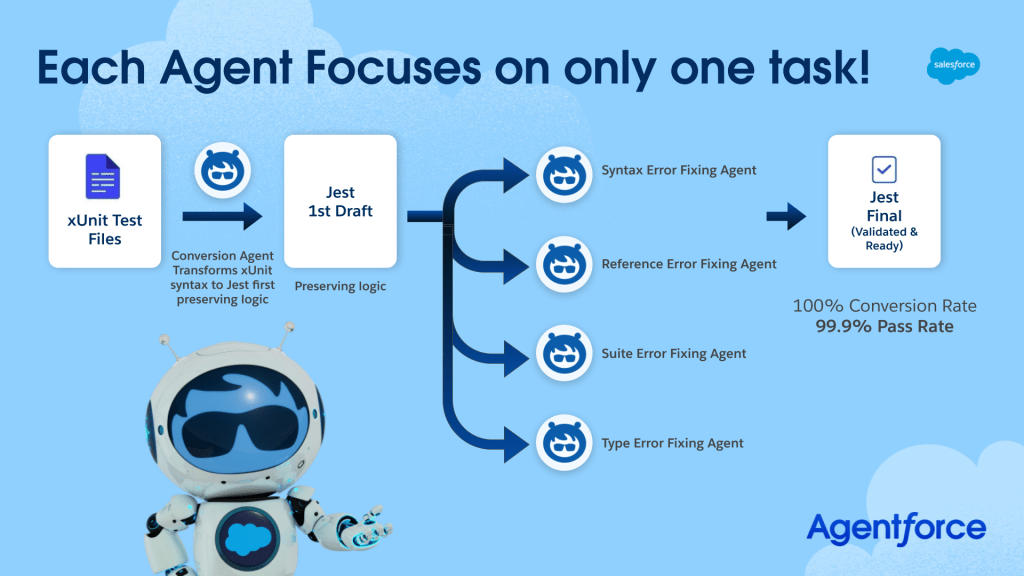

Illustration of various AI agents built & utilized in migrating 57,000 unit tests from xUnit to Jest.

What is the team’s mission — and how did the migration align with your mission?

The Application Intelligence team is dedicated to boosting developer productivity by modernizing foundational tools and automating large-scale migrations. One of our major initiatives involved retiring the legacy xUnit testing framework and transitioning all existing tests to Jest. This move was crucial because Jest offers better support for Lightning Web Components (LWC) and aligns with current JavaScript and TypeScript standards.

xUnit, the default testing framework for Aura components, was outdated, lacked community support, and was harder to maintain as frontend architecture evolved. As LWC adoption grew, the reliance on xUnit became a significant bottleneck, creating operational overhead and complexity. Transitioning to Jest was essential to improve CI/CD reliability, test performance, and platform strategy alignment.

The project successfully migrated 57,000 unit tests across numerous teams. The goal was to modernize the testing framework without disrupting developers. Instead of asking engineers to rewrite tests or learn new tools, the team delivered fully converted Jest files directly into their pipelines, ready for immediate use. This approach eliminated the need for retraining, ensuring a smooth transition and minimal friction. By modernizing the testing framework, the team enabled engineers to work more efficiently on a scalable foundation, supporting Salesforce’s mission to enhance developer productivity and innovation.

What technical challenges or trade-offs did the team face when applying AI to this large-scale migration — and how did the team adapt the approach?

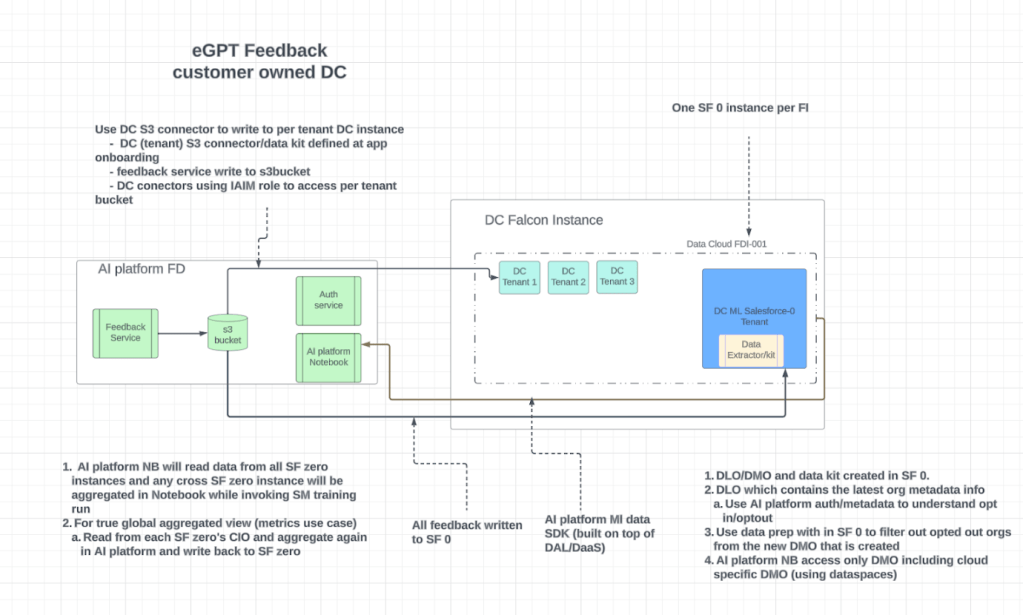

Initially, the team used a single monolithic “super prompt” to handle all test conversions at once. While effective for simple cases, it struggled with complex files, leading to inconsistent output and unmanageable debugging.

To address these issues, they shifted to a new architecture using sequential AI agents built on Agentforce for Developers. Each agent handled a specific task, such as fixing syntax errors, type mismatches, or applying Jest-specific patterns. These agents worked in a chain, passing files to the next agent for further processing.

This chain-of-responsibility model broke down complexity, scaled reliably, and included fault tolerance with retry logic and manual fallback. By abandoning the super prompt early, the team reduced error rates and gained flexibility. This new approach allowed the project to be completed in nine months, a task that would have taken six years manually, achieving an 8x acceleration in delivery speed with Agentforce.

How did the team ensure quality and trust in the AI-generated test code — especially in edge cases?

To ensure reliability across tens of thousands of code files, the team implemented a multi-layered quality assurance model. They preserved all original xUnit test files and never deleted source artifacts, allowing for direct comparison at any point.

A dedicated engineer reviewed the AI-generated test cases for accuracy, behavior alignment, and adherence to team-specific patterns. Manual intervention was required for only about 5% of test cases, mainly those with deeply embedded business logic or inconsistent test setup, which were handled individually to ensure correctness.

Each Jest test created by the Agentforce for Developers system was internally validated by the App Intelligence Tool team to ensure conversion accuracy. No tests were implemented without verification. At the project’s conclusion, owning teams had the option to conduct additional sanity checks, reducing the overall effort across multiple scrum teams.

To build trust, the team ran a two-month dual-run strategy, where both xUnit and Jest pipelines operated in parallel for every deployment. This allowed teams to detect any divergences or failures during real-world CI/CD cycles, catching edge cases that initially escaped review. The AI-generated tests achieved a 99.9% pass rate, validating the integrity of the multi-agent architecture and the safeguards in place.

What internal tooling or workflows did the team build to make the AI-powered migration efficient and reliable?

The team developed a purpose-specific, internal migration pipeline using Agentforce for Developers GPT and integrated it with the Agentforce platform. This pipeline required no changes from the developers who owned the test cases. Instead, it handled all the heavy lifting internally and delivered converted files directly into the source repositories for immediate use.

The migration began with an initial conversion of about 75% of the tests using our xUnit-to-Jest tool, achieving a pass rate of roughly 64%. With additional retries and minimal manual intervention, we raised the coverage to around 95% and improved the pass rate to approximately 78%. The final 5% of tests required manual conversion, handled individually using Agentforce to ensure completeness and accuracy. Notably, about 20% of the overall improvement in pass rate was directly driven by the AI agents’ ability to resolve complex issues during the conversion process.

The pipeline was designed to be sequential, with each agent focusing on a specific task:

- The first agent identified syntax-level issues.

- The second agent handled type errors.

- Subsequent agents corrected references, transformed assertions, and addressed framework-specific syntax.

These agents learned from each other’s output and iteratively refined the tests, similar to a compiler pipeline. If an agent failed, the test case was routed to a retry branch. Persistent failures were flagged for manual review, ensuring the process remained resilient and unblocked.

Illustration of an automated, modular pipeline used for converting legacy xUnit test files into validated Jest test files using AI-powered agents.

What lessons from this migration are shaping how the team scales AI-powered tools — and how will the team maintain speed and accuracy going forward?

This migration project set a clear and repeatable pattern for scaling AI in developer productivity initiatives. The most crucial takeaway was architectural: to solve specific problems, you need specialized agents, not generic prompts. The broad prompt approach faltered as the project grew, but the modular agent model thrived, handling complex tasks with ease.

Another vital insight was the importance of predictability. Engineers didn’t just need functional tests; they needed results that were consistent and reliable. Achieving this required a structured approach, fine-tuned prompts, human validation, and robust fallback mechanisms. The team viewed AI as a partner, not a quick fix, ensuring it was well-coordinated, clearly defined, and closely monitored.

Looking ahead, this architectural pattern will be instrumental in broader migrations, from legacy systems upgrades to automated documentation generation and unifying scattered systems into a more streamlined, centralized platform.

By reusing the pipeline logic and adapting agents to new tasks, the team can maintain speed. Accuracy will be upheld through version-controlled prompt libraries and detailed error handling. AI didn’t replace the engineers’ expertise; it amplified it, and now they have a proven blueprint for scaling this approach.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.