In our “Engineering Energizers” Q&A series, we explore the challenges and innovations that are shaping the future of engineering. Today, we spotlight Shreya Aggarwal and her AI Cloud team, who are transforming the way customer support agents assist customers. By leveraging AI-powered article recommendations, they enable faster and more informed responses, tailored specifically to each case.

Dive into how Shreya’s team tackled the complexities of building a scalable AI platform, integrating predictive and generative AI for seamless recommendations, and safeguarding customer data with robust security measures.

What is your team’s mission?

Our team’s mission is to empower customer support agents by providing AI-driven solutions that enhance efficiency and customer satisfaction. At the heart of this mission are article recommendations, which enable agents to quickly access relevant knowledge base articles tailored to specific cases. This streamlines the support process, reduces resolution times, and allows agents to deliver more informed responses.

The work is organized into three core tracks:

- Case-based Recommendations: This track utilizes predictive AI to analyze case descriptions and recommend articles that address specific customer issues. By providing targeted suggestions, agents can resolve problems more efficiently and with greater accuracy.

- Conversational Recommendations: Leveraging generative AI and Data Cloud, this track delivers real-time, context-aware article suggestions during voice, text, or chat interactions. This ensures that agents have the necessary information at their fingertips, enhancing the quality of their conversations and improving customer satisfaction.

- Knowledge-grounded Email Generation: This track automates the creation of personalized email drafts from recommended articles. The system incorporates linguistic and cultural nuances to simplify agents’ tasks, making it easier for them to communicate effectively with customers.

Each of these tracks equip agents with the tools they need to deliver faster, more precise, and effective support, significantly enhancing the customer experience.

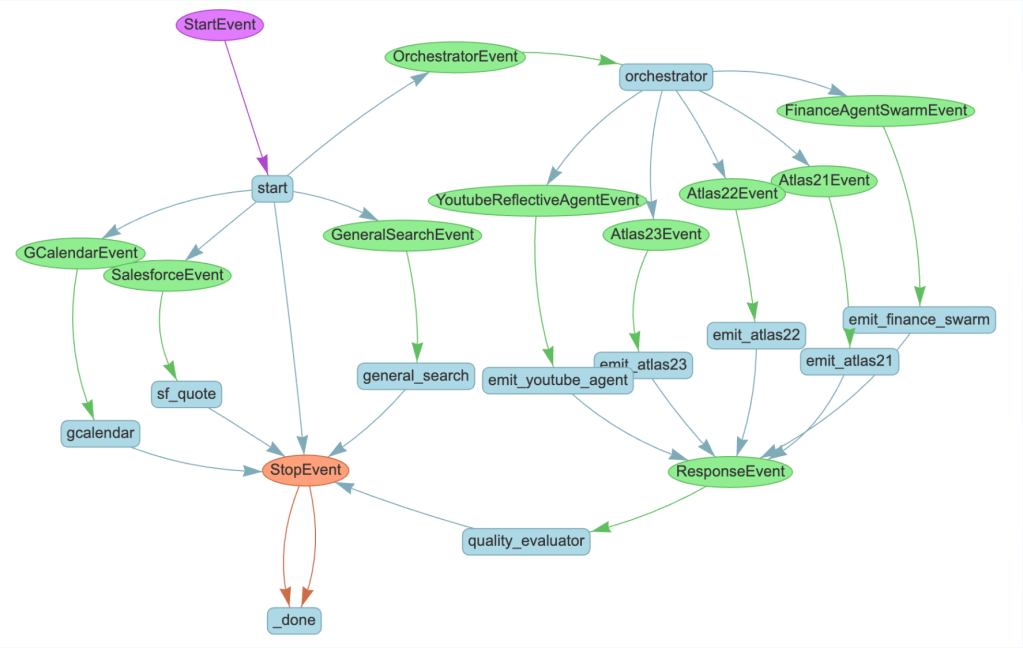

Article recommendations using generative AI to support user conversation flow.

What are the most significant technical challenges the team has faced?

One of the biggest challenges was migrating from an older AI platform, which used outdated message-queue workflows, to the newer AI Platform, which leveraged a highly advanced architecture. The goal was to reduce inefficiencies, enhance real-time capabilities, and enable scalability. However, the transition came with several significant hurdles:

- Data Pipeline Restructuring: The sequential pipelines in older AI Platform often caused performance bottlenecks during high traffic periods. To tackle this, the team employed tracing tools like OpenTelemetry to monitor and identify inefficiencies. They then rewrote the pipelines to support parallel processing, ensuring stable performance across all workloads.

- Maintaining Service Continuity: Switching platforms posed a risk of service disruption for 2,500 active customers. To avoid downtime, the team implemented a phased migration, running both the older AI system and the new AI Platform simultaneously. This allowed for a gradual shift of traffic while closely monitoring performance metrics to quickly identify and resolve any issues.

- Debugging Schema Mismatches: Differences in data schemas may have resulted in production downtime for some orgs if not handled before the migration. The team developed automated validation scripts to detect inconsistencies before the system went live. These scripts caught 90% of errors during testing, significantly reducing the need for manual corrections.

- Improving Real-Time Capabilities: The batch processing in the older AI system introduced delays in recommendations. By adopting event-driven APIs in the new AI Platform, the team eliminated these delays, enabling real-time processing and faster response times.

Through these targeted strategies, the team successfully completed the migration in just a few months, resulting in a scalable and modern infrastructure that is well-prepared for future AI innovations.

Depiction of data flowing into and out of Data Cloud.

How do predictive and generative AI models integrate into the article recommendation system, and what challenges does this integration present?

Predictive and generative AI models are seamlessly integrated to optimize article recommendations at different stages of customer interactions. Predictive models use structured data, such as case metadata, to precompute relevant article recommendations. Generative models, on the other hand, handle unstructured, dynamic data, like chat logs or voice inputs, to provide real-time, context-aware suggestions. While these models serve different purposes, their integration presented several challenges that required innovative solutions:

- Real-Time Coordination: Ensuring that the precomputed outputs from predictive models align with the live results from generative models was a significant challenge. To address this, a shared embedding layer was implemented, which maintains semantic consistency between the two types of outputs, preventing mismatched recommendations.

- Managing Large Datasets: Scaling semantic analysis across millions of articles created performance bottlenecks. The team adopted approximate nearest neighbor (ANN) search algorithms to significantly reduce computational overhead while maintaining the accuracy of recommendations.

- Error Handling and Hallucinations: Generative models sometimes produced inaccurate or irrelevant outputs. To mitigate this, fallback mechanisms were developed. When generative results failed validation, the system defaults to the outputs from predictive models, ensuring that agents receive reliable suggestions.

- Latency Optimization: The high computational demands of generative AI introduced delays. The team optimized model serving pipelines and cached frequently used embeddings to reduce response times and improve system responsiveness.

By addressing these challenges, the system now combines the efficiency of predictive models with the adaptability of generative models, delivering robust and accurate recommendations tailored to customer needs.

Shreya dives deeper into her team’s mission.

How is customer data security and trust ensured while developing AI-driven solutions?

Customer data security is a fundamental aspect of the article recommendation system. To protect sensitive information, the team has implemented robust data masking protocols. These protocols ensure that private data, such as credit card numbers or personal identifiers, remains secure throughout the processing stages, from the input data sent to large language models (LLMs) to the outputs delivered to agents.

Toxicity detection algorithms are used to flag any potentially harmful content generated by AI models. Prompt templates are rigorously tested to minimize errors or hallucinations, ensuring that agents receive reliable and high-quality outputs. Additionally, transparency is reinforced through feedback mechanisms, which allow agents to report issues like irrelevant or insufficient recommendations.

By embedding security and trust into the workflows, the system maintains its reliability, adheres to ethical standards, and provides agents with dependable tools for customer support.

Shreya explains Salesforce Engineering’s novel approach to innovation.

How has the migration to the new AI Platform enhanced scalability for the article recommendation system?

The migration has fundamentally transformed how article recommendations are processed and delivered. The previous AI system relied on a queue-based system that handled data sequentially, leading to bottlenecks as customer volumes grew. In contrast, the new AI Platform uses a microservices-based architecture with asynchronous APIs, enabling parallel processing and near-instant responses. Key technical improvements include:

- Reduced Latency: The shift from batch processing to real-time API calls has cut response times by over 60%.

- Improved Fault Tolerance: Redundant microservices were introduced to isolate failures, ensuring that issues in one service do not affect others.

- Scalability Enhancements: Stateless services were designed to dynamically allocate compute resources, allowing the system to handle increasing data volumes with ease.

These advancements required significant refactoring of the codebase, the introduction of distributed caches to manage session states, and the incorporation of load balancers to maintain system reliability under high traffic. This enabled the system to handle more than 435 million knowledge articles monthly, ensuring reliability even under high traffic demands. The result is a highly scalable and responsive system, well-prepared to meet future demands.

Shreya shares what keeps her at Salesforce.

Learn more

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.