In our “Engineering Energizers” Q&A series, we spotlight engineering leaders who are revolutionizing technology and delivering groundbreaking solutions. Today, we introduce Swapna Kasula, Principal Software Engineer at Salesforce, who leads the AI Apps team within AI Cloud. Her team is dedicated to developing and implementing AI-powered solutions across Salesforce’s product suite, aligning with the company’s broader goal of integrating advanced AI capabilities into its offerings.

Discover how Swapna’s team addresses errors in Retrieval-Augmented Generation (RAG), overcomes scalability challenges to handle millions of concurrent requests efficiently, and mitigates Large Language Model (LLM) hallucination to ensure that AI outputs are accurate and trustworthy.

What is your team’s mission?

The team is committed to delivering innovative AI solutions that revolutionize customer experiences. Our mission is to scale predictive and generative AI applications by enhancing machine learning pipelines, improving model quality, conducting experiments, automating testing & evaluation and utilizing real-time monitoring to proactively address any customer issues. This mission aligns perfectly with Salesforce’s broader objective of empowering businesses with reliable, high-performing AI solutions. Our team develops applications that drive efficiency and accuracy across various workflows, including:

- Service Replies

- Service Email

- Knowledge Grounded Email Responses

- Article Recommendations

- Case Classification

- Case Wrap-up & Voice Wrap-up

These apps are seamlessly integrated into Salesforce products, ensuring both scalability and reliability. For instance, Service Replies assist agents in crafting consistent and accurate responses more quickly, while Case Classification ensures that tickets are routed efficiently. By merging advanced technology with practical usability, our team bridges the gap between AI innovation and customer needs, embodying Salesforce’s dedication to practical, scalable solutions.

Swapna explains how her team approaches innovation.

What are the most significant technical challenges your team has faced recently?

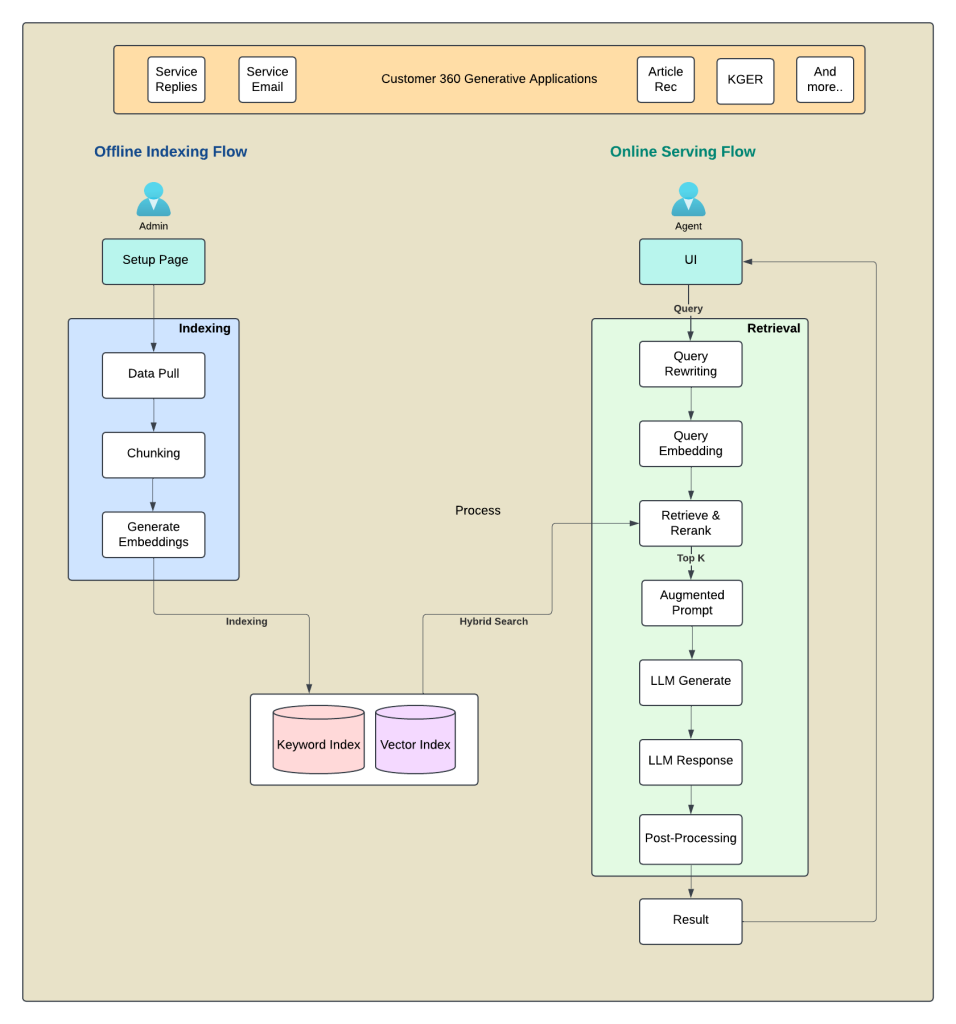

One of the most significant challenges was errors in Retrieval-Augmented Generation (RAG) processes. RAG is essential for generating accurate responses, but retrieval errors sometimes resulted in irrelevant or low-quality outputs. To address this, advanced observability tools were used to detect these errors, and regular evaluations were implemented to ensure retrieved content remained accurate and contextually relevant. For instance, augmented indexing techniques were leveraged to improve document matching, and query embeddings were optimized for more precise retrieval.

Another major challenge was large language model (LLM) hallucination, where models generate plausible but incorrect outputs. To mitigate this, retrieval mechanism was enhanced and task-specific fine-tuning was applied. Advanced prompt engineering techniques, such as chain-of-thought approach, were employed to encourage transparency in model reasoning. Additionally, fine-tuning incorporated real-world user feedback, ensuring the models learned directly from usage patterns to address high-impact errors. Real-time monitoring allowed for the quick identification and resolution of inaccuracies. These approaches addressed critical issues related to accuracy and trust, ensuring reliable performance across applications.

Customer 360 RAG-powered Generative AI Apps Indexing and Serving flow.

What are the most significant scalability challenges your team has faced?

Managing computational resources to handle millions of concurrent requests was one of the primary challenges. To address this, autoscaling techniques were implemented to dynamically allocate resources based on demand, ensuring systems could handle fluctuations in workload without sacrificing performance. Containerized solutions were also designed to optimize resource allocation across deployments.

Another critical challenge was latency reduction. By deploying services across geographically distributed regions, faster data processing and lower response times were achieved for users worldwide. These deployments relied on Kubernetes clusters, allowing for flexible orchestration of microservices and minimizing resource contention. Additionally, real-time observability tools such as performance dashboards enabled continuous monitoring of throughput and response times, ensuring bottlenecks were identified and resolved proactively.

To further enhance stability during peak workloads, load-balancing algorithms were incorporated to optimize resource allocation across clusters. These combined strategies allowed the AI Apps team to overcome scalability challenges effectively, delivering high-performing, reliable applications that meet Salesforce’s standards for global reliability.

What ongoing research and development efforts are aimed at improving the capabilities of your AI applications?

The team is dedicated to advancing their applications through continuous research and development. A key focus area is data processing, where advanced indexing and retrieval techniques are employed to optimize search query relevance and response accuracy. The team also experiments with chunking methodologies to improve the structuring of large datasets, ensuring outputs remain accurate and contextually rich.

User feedback is another pivotal element in shaping application improvements. Explicit signals, such as thumbs-up or thumbs-down ratings, are combined with implicit indicators like accepted, modified or declined responses. Team is exploring to use these feedback signals to refine and regenerate better responses through reinforcement learning techniques. Additionally, another key focus involves assessing various re-ranker models to dynamically prioritize higher-quality responses, especially for edge cases.

Automation is also critical in development. The team has established pipelines for automated testing and evaluation, consistently validating model performance. These pipelines support iterative improvements while ensuring the reliability of updates, keeping the AI Apps team at the forefront of innovation.

Swapna dives deeper into her team’s R&D work.

How do you balance the need for rapid deployment with maintaining high standards of trust and security?

Balancing rapid deployment with trust requires a structured approach. The AI Apps team leverages continuous integration and deployment (CI/CD) pipelines to automate rigorous deployment processes. These pipelines ensure that updates meet Salesforce’s stringent quality standards before they are released.

Incremental rollouts, supported by feature flags, allow the team to release updates gradually. This process minimizes the potential impact of unforeseen issues and enables quick interventions when necessary. Feature flagging not only allows for phased rollouts but also facilitates rapid rollbacks if a critical issue is detected.

Real-time monitoring tools such as Splunk and Grafana are essential to this process, tracking application health and alerting the team to anomalies like performance drops or unexpected behaviors. For instance, when upgrading a model for Service Replies, the team first tested it with a small subset of users to evaluate performance before scaling deployment. These strategies ensure innovations are delivered quickly while maintaining the security and reliability Salesforce users expect.

What strategies does your team use to ensure that enhancements in one area of your project don’t compromise other areas?

To prevent disruptions, the AI Apps team employs a modular architecture that allows components like data pipelines, backend services and user interfaces to be developed independently. This approach minimizes the risk of cross-impact when updates are made in one area. For instance, an update to improve model retrieval accuracy is designed to operate without affecting frontend interfaces or storage systems.

Comprehensive E2E testing and validation is another key strategy. The team conducts unit, integration, end-to-end and performance testing to ensure changes function cohesively across the system. Impact analyses are also used to identify potential effects of updates, such as increased latency or resource consumption. Regression testing ensures that even minor updates do not inadvertently break downstream dependencies.

Lastly, we foster collaboration among various cross-functional teams to ensure that enhancements are aligned with overall project goals and user needs. This holistic approach helps us avoid focusing on one area at the expense of others, ensuring that all components of the system work together cohesively

Learn More

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.