In our “Engineering Energizers” Q&A series, we delve into the journeys of engineering leaders who have made significant strides in their fields. Today, we feature Neha Gupta, Senior Manager of Software Engineering at Salesforce, who heads Data Cloud’s Data Graph team, tackling large-scale data processing challenges. Neha’s team is tasked with accelerating data lookups from all the data available in different datasets, ensuring swift retrieval of customer information for real-time decision-making.

Explore how Neha’s team overcomes the challenges of scaling their systems to handle billions of records, optimizing data retrieval speeds to provide fast lookups, and maintaining system availability under demanding conditions.

What is your team’s mission?

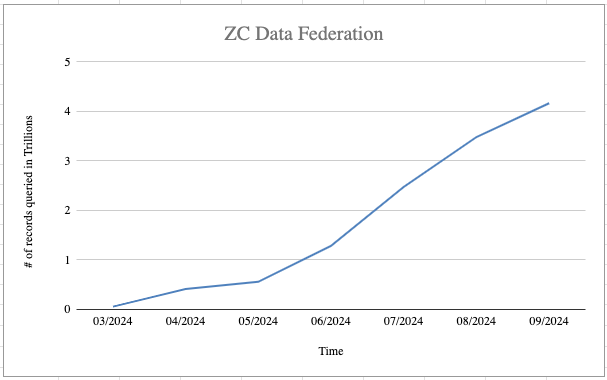

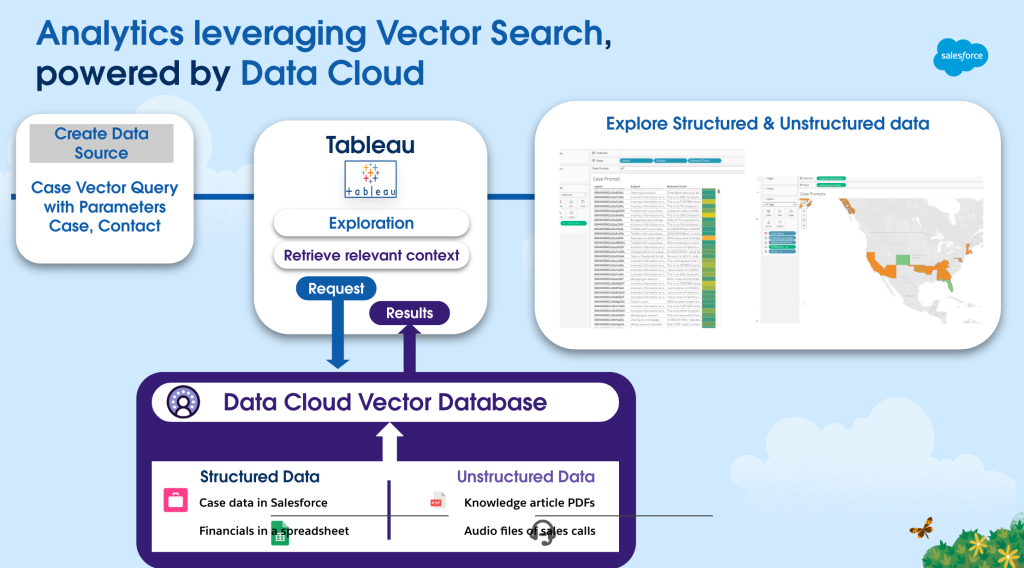

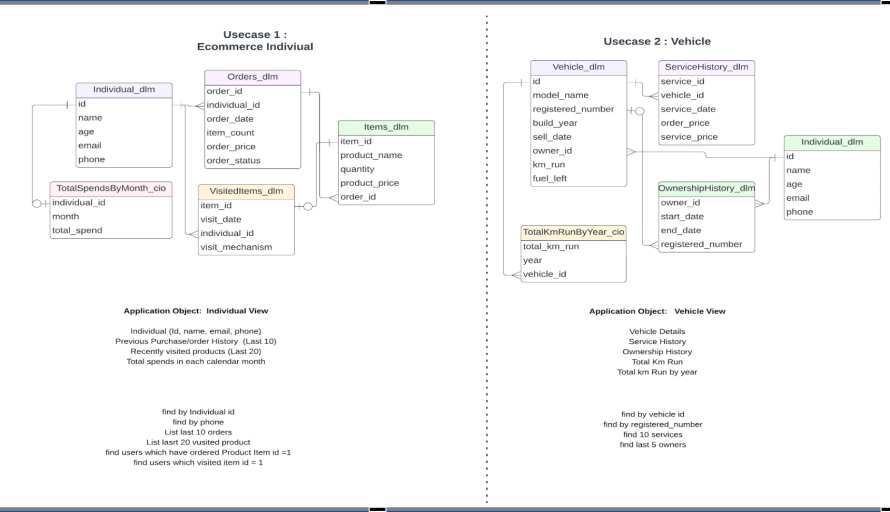

Our team’s mission revolves around Data Graph, a critical feature within Data Cloud. The team addresses complex big data challenges by facilitating faster, more efficient data lookups and insights for our customers. Data Graph pre-computes views of extensive datasets, enabling sub-second lookups across multiple tables and records, eliminating the need for customers to manually query each data point.

In essence, Data Graph organizes data into a structure that allows customers to retrieve comprehensive information swiftly, even when dealing with billions of records, to support real-time decision-making. Whether it’s customer profiles, purchase history, or engagement details, the system ensures access within milliseconds. For example, if a business needs to review all interactions with a client from the past 10 days, Data Graph can pre-process and store this data, making it instantly available when required.

Neha dives deeper into her team’s mission.

How do you ensure scalability in Data Graph to handle massive datasets while maintaining efficiency?

Scalability is fundamental to Data Graph’s design. When developing new features or enhancements, the system undergoes stress testing to its limits. If customers typically process hundreds of millions of records, the system is built to handle significantly larger volumes, ensuring room for growth without performance loss.

Customers often import data across 10 to 25 or more objects simultaneously, and Data Graph is engineered to manage these complex datasets efficiently. To guarantee scalability, performance testing simulates large-scale, real-world scenarios. This process identifies and resolves potential issues before they impact customers.

By ensuring the system can handle massive datasets while maintaining efficiency, Data Graph delivers scalable solutions that meet the needs of both small and large businesses. This allows for growth without data processing bottlenecks.

What performance improvements has your team made in processing large datasets, and what challenges did you overcome

A major achievement has been reducing the time required to process large datasets. Initially, processing 200 million records could take up to three hours. After optimizing systems, code, and architecture, the time reduced to 90 minutes, marking a substantial improvement.

To achieve this enhancement, several key challenges were tackled. The main hurdle was ensuring efficient handling of vast data across multiple tables while delivering results in near-real-time. Early attempts often led to failures, especially with large-scale data joins and complex relationships between different datasets. Through several iterations and system optimizations, processing speed improved and system failures reduced.

The system now supports an extremely high processing capacity, handling enormous volumes of data daily. Overcoming these challenges resulted in a system capable of managing massive amounts of data without compromising speed.

Neha shares what keeps her at Salesforce.

How do you ensure that new enhancements in Data Graph are deployed without affecting system performance or security?

A careful approach ensures that any new enhancement in Data Graph does not negatively affect other parts of the system. Several best practices are relied upon, including automated testing that detects regression bugs—issues where new code unintentionally disrupts existing functionality. Performance testing ensures that any enhancement maintains or improves the overall system’s efficiency.

In addition to testing, a gated release process is used, where each enhancement must pass several verification “gates” before it can move forward. These gates test the feature in isolation, then alongside other system components to ensure compatibility. Once this is completed, the enhancement undergoes further checks for performance and security. Automated tests simulate various scenarios, checking for performance under load, security vulnerabilities, and potential regression issues.

This structured release process allows for quick movement, ensuring features are deployed efficiently while maintaining the high security and trust standards customers rely on.

Neha explores what makes Salesforce Engineering’s culture unique.

How does Data Graph manage data refresh cycles?

Non-real-time Data Graph is currently refreshed daily, but customers can adjust the refresh cycle to hourly, every 4 hours, weekly, or monthly. We are actively working to shorten the refresh time for slower-moving data, aiming to offer sub-hourly updates. This is a significant challenge, as customers often process millions or even billions of records, which must be handled swiftly without impacting system performance. In contrast, the Real-Time Data Graph updates with sub-second latency, using engagement data from web and mobile sources.

To optimize the process, hourly incremental updates are being introduced. These updates allow for the refresh of only the newly added or changed data instead of reprocessing the entire dataset. This approach will significantly reduce the overall time required to update data graphs and meet the growing data needs of customers.

How do you incorporate customer feedback into the ongoing development and optimization of Data Graph?

Since Data Graph is a relatively new feature, gathering customer feedback is an essential part of its development. Several methods are used to capture feedback, starting with internal partners at Salesforce who use Data Graph for products like Sales Cloud and Marketing Cloud. Regular office hours are held with these teams to discuss performance and gather feedback on pain points or areas for improvement.

Externally, Data Graph is being piloted with select customers to gather real-world usage data. This feedback helps understand how customers are using the feature and what additional capabilities they need. Updates are prioritized in quarterly release cycles, and when necessary, urgent changes are implemented to address customer needs sooner.

By continuously gathering and acting on customer feedback, Data Graph evolves to meet the changing needs of businesses.

Learn More

- To dive deeper into Data Graph, read this blog.

- Learn Data Cloud’s secret for scaling massive data volumes in this blog.

- Stay connected — join our Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.