Elastic Kubernetes Service (EKS) is a service under the Amazon Web Services (AWS) umbrella that provides managed Kubernetes service. It significantly reduces the time to deploy, manage, and scale the infrastructure required to run production-scale Kubernetes clusters.

AWS has simplified EKS networking significantly with its container network interface (CNI) plugin. With no network overlays, a Kubernetes pod (container) gets an IP address from the same Virtual Private Cloud (VPC) allocated subnet as would an Elastic Compute Cloud (EC2) instance. This means that any workload, be it a container, lambda, or EC2 instance, can now talk to another without the need for Network Address Translation (NAT).

Each EC2 instance can have multiple elastic network interfaces (ENIs) attached to it, and each interface can be assigned multiple IP addresses from the VPC’s private address space.

- The first interface, called the primary interface, is assigned an IP address called the primary IP address.

- All other interfaces are called secondary interfaces and hold secondary IP addresses.

The CNI plugin, which runs as a pod on each Kubernetes node (EC2 instance), is responsible for the networking on each node. By default, it assigns each new pod a private IP address from the VPC private subnet that the Kubernetes node is in while attaching the IP address to one of the secondary ENIs of the node.

The plugin consists of two primary components:

- IPAM daemon, responsible for attaching ENIs to instances, assigning secondary IP addresses to these ENIs, etc

- CNI plugin, responsible for setting up the virtual ethernet (veth) interfaces, attaching it to the pod, and setting up the bridge between the veth and the host network

Problem

By default, AWS reserves a large pool of IP addresses to an EKS (Kubernetes) node that is always available to be used by the node. This pool of IP’s, also known as “Warm-Pool,” attached to the secondary interface of the node, is determined by the EC2 instance type and cannot be shared with any other AWS service or node.

For example, an instance of type m3.2xlarge can have up to four ENIs, and each ENI can be assigned up to 30 IP addresses. With default CNI configuration, when a worker node first joins the cluster, ipamD ensures that, along with an active (primary) ENI holding the primary and secondary IP addresses, there’s also a spare ENI attached to the node working in standby mode at all times. The standby ENI and its IP addresses will be utilized when there are no more secondary IP addresses on the primary ENI. Larger instance types have a higher limit on the number of ENIs that can be attached and hence can hold more secondary IP addresses (for more information, see IP Addresses Per Network Interface Per Instance Type document).

So the total number of IP’s reserved for an instance of type m3.2xlarge at any point in time will be 60 IP addresses,

- Primary ENI (active): 1 primary IP + 1*29 secondary IP’s

- Secondary ENI (standby): 1 primary IP + 1*29 secondary IP’s

This is a particularly large number of IP’s for a single Kubernetes node which might run a few resource-intensive applications and daemon set pods. We try to size our EC2 instances in a way that we run a single replica of the application on that given node at any time (although of course, some exceptions apply). Adding all the daemon sets (monitoring, logging, networking, etc), the average number of pods on a node in our environment seems to hover around 5–7, hence requiring no more than 10 IP’s/node.

With this default behavior, roughly 50 IP’s are unused, which results in inefficiencies and, if not properly planned or triaged, can quickly lead to VPC subnet exhaustion.

Solution

AWS provides a couple of CNI config variables that you can set to avoid such scenarios. The config variable WARM_IP_TARGET specifies the number of free IP’s that the ipamD daemon should attempt to keep available for pod assignment on the node at all times. WARM_ENI_TARGET, on the other hand, specifies the number of ENIs ipamD must keep ready to be attached to the node at all times.

NOTE: AWS defaults the value of WARM_ENI_TARGET to 1 in the CNI config.

For example, if the WARM_IP_TARGET variable is set to a value of 10, then ipamD attempts to keep 10 free IP addresses available at all times. If the ENIs on the node are unable to provide these free addresses, ipamD attempts to allocate more interfaces until WARM_IP_TARGET number of free IP addresses are available in the reserved pool.

$ diff -up aws-k8s-cni-without-warm-ip-pool.yaml aws-k8s-cni-with-warm-ip-pool.yaml

+ - "name": "WARM_IP_TARGET"

+ "value": "10"

To understand better, let’s take a look at the scenario where we launch an m3.2xlarge (supports maximum of 4 ENI’s and 30 IP’s per ENI) EKS node with WARM_IP_TARGET set to 10. Now ipamD will ensure that there are 10 free IP’s on the primary ENI at any point in time, and, since there’s at least one pod using a secondary IP on the primary ENI, the ipamD will honor the WARM_ENI_TARGET=1 config and a second ENI will be attached, holding 10 more IP’s.

With this setting, we have 20 IP addresses reserved at any point in time, a savings of 40 IP’s from the previous setting,

- Primary ENI (active): 1 primary IP + 1*9 secondary IP’s

- Secondary ENI (standby): 1 primary IP + 1*9 secondary IP’s

If the number of pods scheduled to run on the node increases above 10, then ipamD will start allocating IP addresses to the primary ENI in blocks of 10. This behavior will continue until it has allocated 30 IP’s, which is also the maximum number of IP’s that an ENI can hold. If a new pod gets scheduled on this node, then ipamD will start allocating IP’s from the secondary ENI, and a third ENI is added to the node due to the WARM_ENI_TARGET config variable. Once the 30 IP’s are depleted on the secondary ENI, the IP’s will be allocated from the third ENI.

Optimizing further, let’s set the WARM_ENI_TARGET environment variable to 0 in the CNI config,

$ diff -up aws-k8s-cni-with-warm-ip-pool.yaml aws-k8s-cni-with-warm-ip-pool-without-eni-pool.yaml

- "name": "WARM_ENI_TARGET"

- "value": "1"

+ "value": "0"

On applying the above settings, the secondary ENI won’t be kept in standby mode anymore. It will only be attached when all of the secondary IP’s have been consumed on the primary ENI, thus saving 10 more IP’s.

- Primary ENI (active): 1 primary IP + 1*9 secondary IP’s

With these two optimizations, we have now saved 50 IP addresses per node from being underutilized. The ENIs and corresponding IP’s will be released back to the VPC subnet when the pods no longer exist on that node.

Performance

Let’s measure the time taken for a pod launch and see how this is affected in the scenario where there’s a need to attach a new ENI.

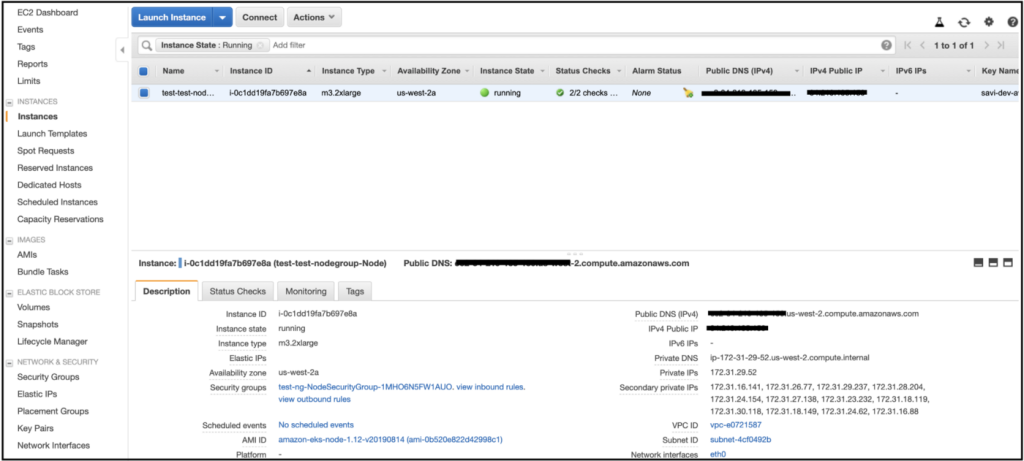

NOTE: The below screenshots and CLI outputs are from a test cluster that was created just for the purpose of this blog

DEFAULT SETTINGS

- In this setting,

WARM_ENI_TARGET=1andWARM_IP_TARGETis not set. - The EKS node is of type m3.2xlarge and has at least 1 pod scheduled on it. Hence, during the node attach process, the instance has 2 ENIs (active and standby) attached and 60 IP addresses allocated (2 primary IP’s + 2*29 secondary IP’s).

$ aws ec2 describe-instances — instance-ids i-030f0defdcee570de | jq .Reservations[0].Instances[0].NetworkInterfaces[0].PrivateIpAddresses | grep PrivateIpAddress | wc -l 30 $ aws ec2 describe-instances — instance-ids i-030f0defdcee570de | jq .Reservations[0].Instances[0].NetworkInterfaces[1].PrivateIpAddresses | grep PrivateIpAddress | wc -l 30

- On creating a deployment with 15 pod replicas on the node, we observed that it took about 19 seconds to mark them as “Running.”

2020–08–20T01:19:13Z minus 2020–08–20T01:18:54Z= 19 seconds

OPTIMIZED SETTINGS

- Here, we set

WARM_ENI_TARGET=0andWARM_IP_TARGET=10 - Running the same experiment, the instance now has 13 IP’s allocated to it when it joins the EKS cluster (1 primary IP + 1*12 secondary IP’s = 13 IP’s). Note that the 3 extra secondary IP’s are used by pods running on the node.

$ aws ec2 describe-instances — instance-ids i-0c1dd19fa7b697e8a | jq .Reservations[0].Instances[0].NetworkInterfaces[0].PrivateIpAddresses | grep PrivateIpAddress | wc -l

13

$ aws ec2 describe-instances — instance-ids i-0c1dd19fa7b697e8a | jq .Reservations[0].Instances[0].NetworkInterfaces[1].PrivateIpAddresses | grep PrivateIpAddress | wc -l

0

- With the

WARM_IP_TARGETconfig variable set, the time taken to deploy 15 pods is:

2020–08–20T02:01:55Z minus 2020–08–20T02:01:36Z= 19 seconds

- The 10 IP’s in the warm pool are consumed by the first 10 pods but there’s still a need for 5 more. So the

ipamDnow allocates extra IP’s (3 + 15 + 10 = 28 IP’s) so that we have 10 free IP addresses at all times. Also noticeable is that there isn’t any delay in getting those extra IP’s reserved to the node.

$ aws ec2 describe-instances — instance-ids i-0c1dd19fa7b697e8a | jq .Reservations[0].Instances[0].NetworkInterfaces[0].PrivateIpAddresses | grep PrivateIpAddress | wc -l

28

$ aws ec2 describe-instances — instance-ids i-0c1dd19fa7b697e8a | jq .Reservations[0].Instances[0].NetworkInterfaces[1].PrivateIpAddresses | grep PrivateIpAddress | wc -l

0

- On further scaling the deployment to 20 replicas, the five new pods will get IP addresses from the warm pool. In order to satisfy the warm pool condition (primary ENI cannot serve 23 secondary IP’s and cannot reserve 10 free IP’s at the same time), a secondary ENI will now be attached to the instance.

$ aws ec2 describe-instances — instance-ids i-0c1dd19fa7b697e8a | jq .Reservations[0].Instances[0].NetworkInterfaces[0].PrivateIpAddresses | grep PrivateIpAddress | wc -l

28

$ aws ec2 describe-instances — instance-ids i-0c1dd19fa7b697e8a | jq .Reservations[0].Instances[0].NetworkInterfaces[1].PrivateIpAddresses | grep PrivateIpAddress | wc -l

6

- With the

WARM_IP_TARGETconfig variable set and scaling the pods to 20, the time taken to deploy 20 pods is:

2020–08–20T02:11:29Z minus 2020–08–20T02:11:09Z= 20 seconds

- So we lost about a second when there’s a need to attach a new ENI. But on the other hand, we saved 50 IP’s/nodes which could be used to serve other EKS or EC2 workloads.

Conclusion

Networking in AWS is generally simple but can get complicated if things are not configured correctly. VPC subnet exhaustion is a fairly common problem for many who run Kubernetes in the cloud (overlay networking mode excluded). It’s even more of a challenge when combined with the inability to add or update subnets registered with the EKS control plane.

This optimization helps minimize the impact of IP exhaustion when running production-scale Kubernetes clusters on AWS.